信息网络安全 ›› 2025, Vol. 25 ›› Issue (12): 1878-1888.doi: 10.3969/j.issn.1671-1122.2025.12.004

面向无目标后门攻击的投毒样本检测方法

逄淑超1, 李政骁1, 曲俊怡1, 马儒昊1, 陈贺昌2, 杜安安3( )

)

- 1.南京理工大学网络空间安全学院,南京 210094

2.吉林大学人工智能学院,长春 130012

3.南京工业职业技术大学计算机与软件学院,南京 210023

-

收稿日期:2025-10-05出版日期:2025-12-10发布日期:2026-01-06 -

通讯作者:杜安安 E-mail:anan.du@niit.edu.cn -

作者简介:逄淑超(1988—),男,山东,教授,博士,CCF会员,主要研究方向为人工智能应用安全、数据安全与隐私保护|李政骁 (2002—),男,江苏,硕士研究生,主要研究方向为网络空间安全、无数据蒸馏、模型鲁棒性|曲俊怡(2002—),男,山东,本科,主要研究方向为人工智能应用及其安全|马儒昊(2000—),男,山东,硕士研究生,主要研究方向为数据安全与隐私保护、人工智能应用|陈贺昌(1988—),男,吉林,研究员,博士,主要研究方向为机器学习、数据挖掘、智能博弈、知识工程、复杂系统|杜安安(1989—),女,山东,副教授,博士,主要研究方向为弱监督学习、智能感知、人工智能模型安全 -

基金资助:国家自然科学基金(62206128);国家重点研发计划(2023YFB2703900)

Detecting Poisoned Samples for Untargeted Backdoor Attacks

PANG Shuchao1, LI Zhengxiao1, QU Junyi1, MA Ruhao1, CHEN Hechang2, DU Anan3( )

)

- 1. School of Cyber Science and Engineering, Nanjing University of Science and Technology, Nanjing 210094, China

2. School of Artificial Intelligence, Jilin University, Changchun 130012, China

3. School of Computer and Software, Nanjing University of Industry Technology, Nanjing 210023, China

-

Received:2025-10-05Online:2025-12-10Published:2026-01-06 -

Contact:DU Anan E-mail:anan.du@niit.edu.cn

摘要:

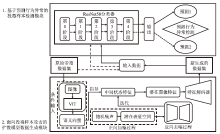

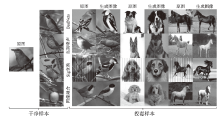

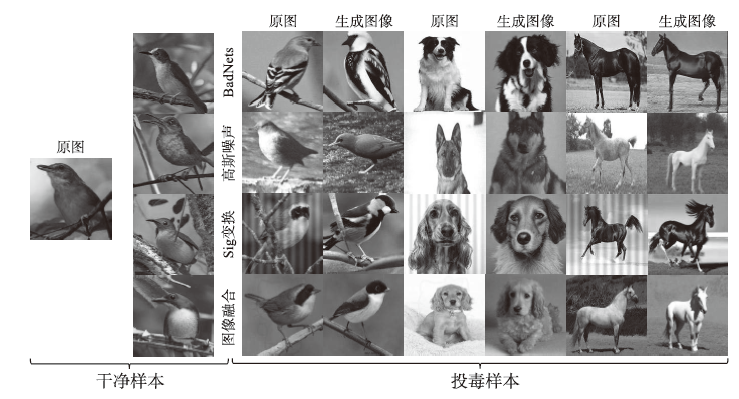

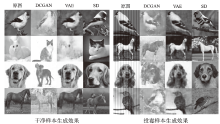

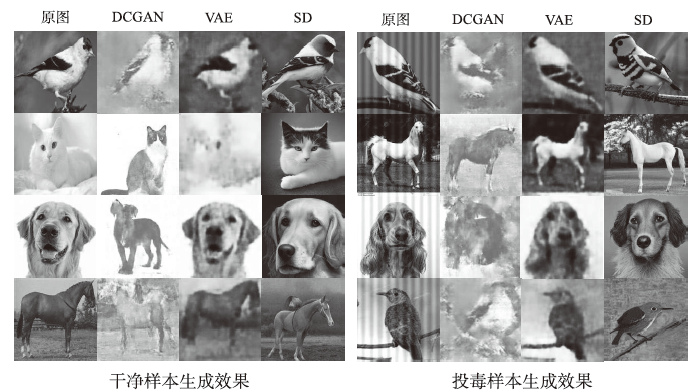

后门攻击作为数据投毒攻击的重要方式,严重威胁数据集可靠性和模型训练安全性。现有的主流防御方法大多针对特定目标后门攻击而设计,缺乏对无目标后门攻击的研究。因此,文章提出一种面向无目标后门攻击的投毒样本检测方法。该方法是一种基于预测行为异常的黑盒方法,用于检测潜在的无目标后门样本,主要由两个模块组成:基于预测行为异常的投毒样本检测模块可以根据前后两次预测行为的不同来检测可疑样本;面向投毒样本攻击的扩散模型数据生成模块用于生成与原始数据集相似但不含触发器的新数据集。通过不同类型无目标后门攻击实验和不同生成模型实验,证明了该方法的可行性,以及生成模型尤其是扩散模型在后门攻击检测领域的巨大潜力和应用价值。

中图分类号:

引用本文

逄淑超, 李政骁, 曲俊怡, 马儒昊, 陈贺昌, 杜安安. 面向无目标后门攻击的投毒样本检测方法[J]. 信息网络安全, 2025, 25(12): 1878-1888.

PANG Shuchao, LI Zhengxiao, QU Junyi, MA Ruhao, CHEN Hechang, DU Anan. Detecting Poisoned Samples for Untargeted Backdoor Attacks[J]. Netinfo Security, 2025, 25(12): 1878-1888.

| [1] | HE Zeping, XU Jian, DAI Hua, et al. A Review of Federated Learning Application Technologies[J]. Netinfo Security, 2024, 24(12): 1831-1844. |

| 何泽平, 许建, 戴华, 等. 联邦学习应用技术研究综述[J]. 信息网络安全, 2024, 24(12):1831-1844. | |

| [2] | GU Huanhuan, LI Qianmu, LIU Zhen, et al. Research on Hidden Backdoor Prompt Attack Methods Based on False Demonstrations[J]. Netinfo Security, 2025, 25(4): 619-629. |

| 顾欢欢, 李千目, 刘臻, 等. 基于虚假演示的隐藏后门提示攻击方法研究[J]. 信息网络安全, 2025, 25(4):619-629. | |

| [3] | YAN Yukun, TANG Peng, CHEN Rui, et al. A Randomness Enhanced Bi-Level Optimization Defense Method against Data Poisoning Backdoor Attacks[J]. Netinfo Security, 2025, 25(7): 1074-1091. |

| 闫宇坤, 唐朋, 陈睿, 等. 面向数据投毒后门攻击的随机性增强双层优化防御方法[J]. 信息网络安全, 2025, 25(7):1074-1091. | |

| [4] |

VICE J, AKHTAR N, HARTLEY R, et al. Bagm: A Backdoor Attack for Manipulating Text-To-Image Generative Models[J]. IEEE Transactions on Information Forensics and Security, 2024, 19: 4865-4880.

doi: 10.1109/TIFS.2024.3386058 URL |

| [5] |

WU Yi, CHEN Jiayi, LEI Tianbao, et al. Web 3.0 Security: Backdoor Attacks in Federated Learning-Based Automatic Speaker Verification Systems in the 6G Era[J]. Future Generation Computer Systems, 2024, 160: 433-441.

doi: 10.1016/j.future.2024.06.022 URL |

| [6] | LIU Tao, ZHANG Yuhang, FENG Zhu, et al. Beyond Traditional Threats: A Persistent Backdoor Attack on Federated Learning[C]// AAAI. The AAAI Conference on Artificial Intelligence. Menlo Park: AAAI, 2024: 21359-21367. |

| [7] |

LI Yiming, JIANG Yong, LI Zhifeng, et al. Backdoor Learning: A Survey[J]. IEEE Transactions on Neural Networks and Learning Systems, 2022, 35(1): 5-22.

doi: 10.1109/TNNLS.2022.3182979 URL |

| [8] | QI Xiangyu, XIE Tinghao, WANG Jiachen, et al. Towards a Proactive ML Approach for Detecting Backdoor Poison Samples[C]// USENIX. The 32nd USENIX Security Symposium (USENIX Security 23). Berkeley: USENIX, 2023: 1685-1702. |

| [9] | GUO Junfeng, LI Yiming, CHEN Xun, et al. SCALE-UP: An Efficient Black-Box Input-Level Backdoor Detection via Analyzing Scaled Prediction Consistency[EB/OL]. (2023-02-19)[2025-09-10]. https://doi.org/10.48550/arXiv.2302.03251. |

| [10] | ZHANG Zihan, LAI Qingnan, ZHOU Changling. Survey on Fuzzing Test in Deep Learning Frameworks[J]. Netinfo Security, 2024, 24(10): 1528-1536. |

| 张子涵, 赖清楠, 周昌令. 深度学习框架模糊测试研究综述[J]. 信息网络安全, 2024, 24(10):1528-1536. | |

| [11] | GU Tianyu, DOLAN-GAVITT B, GARG S. BadNets: Identifying Vulnerabilities in the Machine Learning Model Supply Chain[EB/OL]. (2019-03-11)[2025-09-10]. https://doi.org/10.48550/arXiv.1708.06733. |

| [12] | CHEN Xinyun, LIU Chang, LI Bo, et al. Targeted Backdoor Attacks on Deep Learning Systems Using Data Poisoning[EB/OL]. (2017-12-15) [2025-09-10]. https://doi.org/10.48550/arXiv.1712.05526. |

| [13] | LI Yiming, BAI Yang, JIANG Yong, et al. Untargeted Backdoor Watermark: Towards Harmless and Stealthy Dataset Copyright Protection[J]. Advances in Neural Information Processing Systems, 2022, 35: 13238-13250. |

| [14] | TURNER A, TSIPRAS D, MADRY A. Label-Consistent Backdoor Attacks[EB/OL]. (2019-12-06)[2025-09-10]. https://doi.org/10.48550/arXiv.1912.02771. |

| [15] | LI Yuezun, LI Yiming, WU Baoyuan, et al. Invisible Backdoor Attack with Sample-Specific Triggers[C]// IEEE. The IEEE/CVF International Conference on Computer Vision (ICCV). New York: IEEE, 2021: 16463-16472. |

| [16] | HE Ke, WANG Jianhua, YU Dan, et al. Adaptive Sampling-Based Machine Unlearning Method[J]. Netinfo Security, 2025, 25(4): 630-639. |

| 何可, 王建华, 于丹, 等. 基于自适应采样的机器遗忘方法[J]. 信息网络安全, 2025, 25(4):630-639. | |

| [17] | GUO Junfeng, LI Ang, LIU Cong. AEVA: Black-Box Backdoor Detection Using Adversarial Extreme Value Analysis[EB/OL]. (2022-04-24) [2025-09-10]. https://doi.org/10.48550/arXiv.2110.14880. |

| [18] | LI Yiming, ZHAI Tongqing, JIANG Yong, et al. Backdoor Attack in the Physical World[EB/OL]. (2021-04-24)[2025-09-10]. https://doi.org/10.48550/arXiv.2104.02361. |

| [19] | MIRZA M, OSINDERO S. Conditional Generative Adversarial Nets[EB/OL]. (2014-11-06)[2025-09-10]. https://doi.org/10.48550/arXiv.1411.1784. |

| [20] | RADFORD A, METZ L, CHINTALA S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks[EB/OL]. (2016-01-07)[2025-09-10]. https://doi.org/10.48550/arXiv.1511.06434. |

| [21] | SOHN K, YAN Xinchen, LEE H. Learning Structured Output Representation Using Deep Conditional Generative Models[C]// NIPS. Advances in Neural Information Processing Systems (NIPS). Cambridge: MIT, 2015: 3483-3491. |

| [22] | VAN D O A, VINYALS O, KAVUKCUOGLU K. Neural Discrete Representation Learning[C]// NIPS. Advances in Neural Information Processing Systems (NIPS). Cambridge: MIT, 2017: 6309-6318. |

| [23] | HO J, JAIN A, ABBEEL P. Denoising Diffusion Probabilistic Models[C]// NIPS. Advances in Neural Information Processing Systems (NIPS). Cambridge: MIT, 2020: 6840-6851. |

| [24] | NICHOL A, DHARIWAL P, RAMESH A, et al. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models[EB/OL]. (2022-03-08)[2025-09-10]. https://doi.org/10.48550/arXiv.2112.10741. |

| [25] | ROMBACH R, BLATTMANN A, LORENZ D, et al. High-Resolution Image Synthesis with Latent Diffusion Models[C]// IEEE. The IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2022: 10684-10695. |

| [26] | WANG Bolun, YAO Yuanshun, SHAN S, et al. Neural Cleanse: Identifying and Mitigating Backdoor Attacks in Neural Networks[C]// IEEE. 2019 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2019: 707-723. |

| [27] | CHEN B, CARVALHO W, BARACALDO N, et al. Detecting Backdoor Attacks on Deep Neural Networks by Activation Clustering[EB/OL]. (2018-11-09)[2025-09-10]. https://doi.org/10.48550/arXiv.1811.03728. |

| [28] | BANSAL A, BORGNIA E, CHU H M, et al. Cold Diffusion: Inverting Arbitrary Image Transforms without Noise[C]// NeurIPS. Advances in Neural Information Processing Systems (NeurIPS). Cambridge: MIT, 2023: 41259-41282. |

| [1] | 徐茹枝, 武晓欣, 吕畅冉. 基于Transformer的超分辨率网络对抗样本防御方法研究[J]. 信息网络安全, 2025, 25(9): 1367-1376. |

| [2] | 陈咏豪, 蔡满春, 张溢文, 彭舒凡, 姚利峰, 朱懿. 多尺度多层次特征融合的深度伪造人脸检测方法[J]. 信息网络安全, 2025, 25(9): 1456-1464. |

| [3] | 王新猛, 陈俊雹, 杨一涛, 李文瑾, 顾杜娟. 贝叶斯优化的DAE-MLP恶意流量识别模型[J]. 信息网络安全, 2025, 25(9): 1465-1472. |

| [4] | 金志刚, 李紫梦, 陈旭阳, 刘泽培. 面向数据不平衡的网络入侵检测系统研究综述[J]. 信息网络安全, 2025, 25(8): 1240-1253. |

| [5] | 王钢, 高雲鹏, 杨松儒, 孙立涛, 刘乃维. 基于深度学习的加密恶意流量检测方法研究综述[J]. 信息网络安全, 2025, 25(8): 1276-1301. |

| [6] | 酆薇, 肖文名, 田征, 梁中军, 姜滨. 基于大语言模型的气象数据语义智能识别算法研究[J]. 信息网络安全, 2025, 25(7): 1163-1171. |

| [7] | 张兴兰, 陶科锦. 基于高阶特征与重要通道的通用性扰动生成方法[J]. 信息网络安全, 2025, 25(5): 767-777. |

| [8] | 金增旺, 江令洋, 丁俊怡, 张慧翔, 赵波, 方鹏飞. 工业控制系统安全研究综述[J]. 信息网络安全, 2025, 25(3): 341-363. |

| [9] | 陈红松, 刘新蕊, 陶子美, 王志恒. 基于深度学习的时序数据异常检测研究综述[J]. 信息网络安全, 2025, 25(3): 364-391. |

| [10] | 李海龙, 崔治安, 沈燮阳. 网络流量特征的异常分析与检测方法综述[J]. 信息网络安全, 2025, 25(2): 194-214. |

| [11] | 武浩莹, 陈杰, 刘君. 改进Simon32/64和Simeck32/64神经网络差分区分器[J]. 信息网络安全, 2025, 25(2): 249-259. |

| [12] | 金地, 任昊, 唐瑞, 陈兴蜀, 王海舟. 基于情感辅助多任务学习的社交网络攻击性言论检测技术研究[J]. 信息网络安全, 2025, 25(2): 281-294. |

| [13] | 朱辉, 方云依, 王枫为, 许伟. 融合机密计算的数据安全处理研究进展[J]. 信息网络安全, 2025, 25(11): 1643-1657. |

| [14] | 李古月, 张子豪, 毛承海, 吕锐. 基于累积量与深度学习融合的水下调制识别模型[J]. 信息网络安全, 2025, 25(10): 1554-1569. |

| [15] | 梁凤梅, 潘正豪, 刘阿建. 基于共性伪造线索感知的物理和数字人脸攻击联合检测方法[J]. 信息网络安全, 2025, 25(10): 1604-1614. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||