信息网络安全 ›› 2025, Vol. 25 ›› Issue (9): 1367-1376.doi: 10.3969/j.issn.1671-1122.2025.09.005

基于Transformer的超分辨率网络对抗样本防御方法研究

- 华北电力大学控制与计算机工程学院,北京 102206

-

收稿日期:2024-12-09出版日期:2025-09-10发布日期:2025-09-18 -

通讯作者:武晓欣120232227082@ncepu.edu.cn -

作者简介:徐茹枝(1966—),女,江西,教授,博士,主要研究方向为智能电网和AI安全|武晓欣(2000—),女,河北,硕士研究生,主要研究方向为网络安全和AI安全|吕畅冉(1998—),女,河北,硕士研究生,主要研究方向为网络安全和AI安全 -

基金资助:国家自然科学基金(62372173)

Research on Transformer-Based Super-Resolution Network Adversarial Sample Defense Method

XU Ruzhi, WU Xiaoxin( ), LYU Changran

), LYU Changran

- School of Control and Computer Engineering, North China Electric Power University, Beijing 102206, China

-

Received:2024-12-09Online:2025-09-10Published:2025-09-18

摘要:

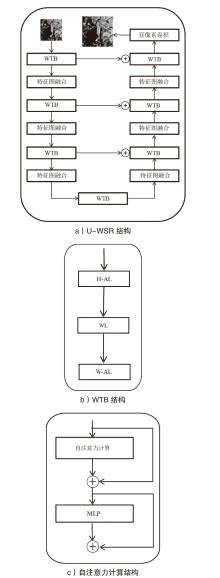

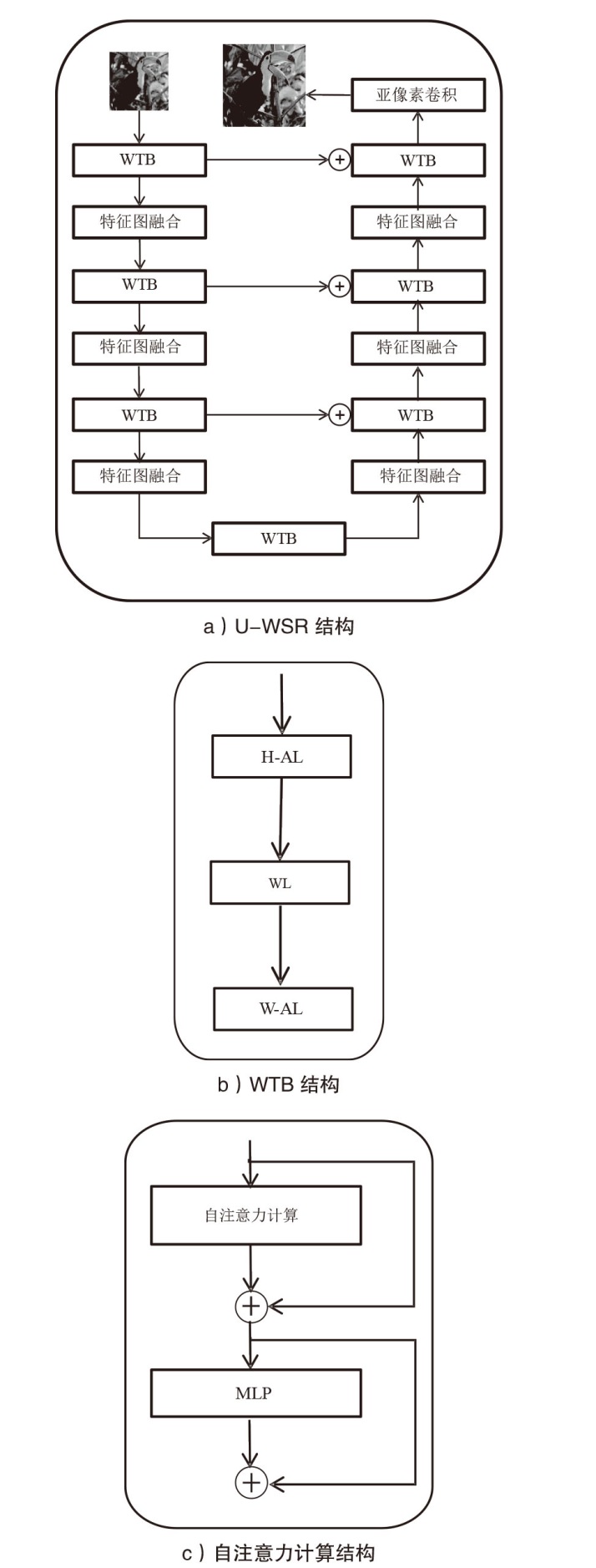

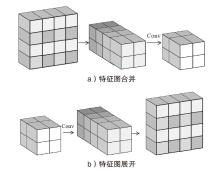

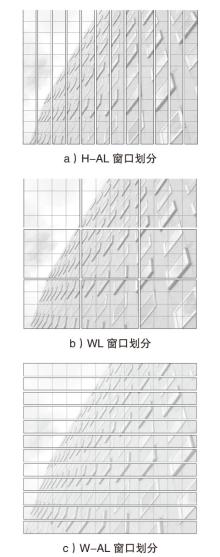

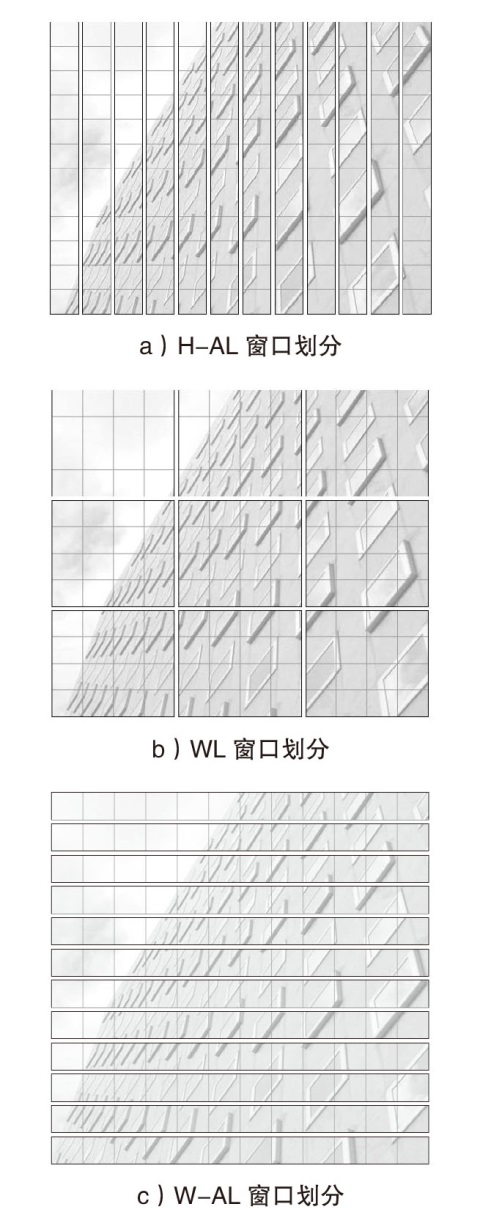

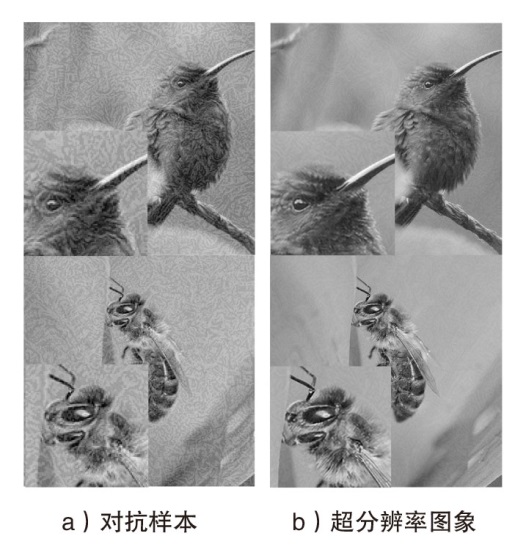

深度学习模型易遭受攻击者精心设计的对抗样本攻击的安全问题已引起广泛关注。现有针对深度学习对抗攻击的防御方法虽能取得一定效果,但仍存在通用性不足的缺陷:对特定攻击类型防御效果显著,而对其他攻击防御能力有限甚至失效。文章提出一种基于Transformer架构的超分辨率网络通用防御方法。首先,通过自注意力机制动态增强图像高频区域信息以提升图像质量;然后,采用多尺度特征融合技术有效抑制对抗扰动;最后,创新性地引入多样化窗口划分策略,在维持长距离像素依赖关系的前提下显著降低模型计算复杂度。实验结果表明,该方法对多种攻击类型的平均防御成功率高达90%,不仅优于现有基线方法,而且展现出更强的鲁棒性。

中图分类号:

引用本文

徐茹枝, 武晓欣, 吕畅冉. 基于Transformer的超分辨率网络对抗样本防御方法研究[J]. 信息网络安全, 2025, 25(9): 1367-1376.

XU Ruzhi, WU Xiaoxin, LYU Changran. Research on Transformer-Based Super-Resolution Network Adversarial Sample Defense Method[J]. Netinfo Security, 2025, 25(9): 1367-1376.

表1

不同防御方法防御效果比较

| 网络 模型 | 良性样本 | FGSM-2 | FGSM-10 | I-FGSM | MI-FGSM | PGD | L-BFGS | C&W | JSMA | DeepFool | ZOO | DI2FGSM | MDI2FGSM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 无防御方法 | |||||||||||||

| Inc-v3 | 100.0% | 31.7% | 30.5% | 11.4% | 1.7% | 1.1% | 0.3% | 0.8% | 0.8% | 0.4% | 1.0% | 1.4% | 0.6% |

| Res-50 | 100.0% | 12.2% | 6.1% | 3.4% | 0.4% | 0.2% | 0.1% | 0.1% | 0.2% | 1.0% | 0.8% | 0.3% | 0.2% |

| IncRes-v2 | 100.0% | 59.4% | 53.6% | 21.6% | 0.5% | 0.3% | 0.1% | 0.3% | 0.1% | 0.1% | 0.8% | 1.5% | 0.6% |

| JPEG 压缩 | |||||||||||||

| Inc-v3 | 96.0% | 62.3% | 48.8% | 77.5% | 69.4% | 68.1% | 76.5% | 80.5% | 78.8% | 81.2% | 80.2% | 2.1% | 1.3% |

| Res-50 | 92.8% | 57.6% | 42.9% | 74.8% | 70.8% | 65.3% | 77.9% | 81.3% | 76.3% | 77.3% | 76.9% | 0.7% | 0.4% |

| IncRes-v2 | 95.5% | 67.0% | 53.7% | 81.3% | 72.8% | 66.2% | 79.4% | 83.1% | 81.6% | 83.9% | 82.9% | 1.6% | 1.1% |

| R&P | |||||||||||||

| Inc-v3 | 97.3% | 69.2% | 53.2% | 90.6% | 89.5% | 88.5% | 89.1% | 89.5% | 88.2% | 88.9% | 87.3% | 7.0% | 5.8% |

| Res-50 | 92.5% | 66.8% | 48.8% | 88.2% | 88.0% | 87.2% | 86.6% | 87.5% | 86.3% | 90.9% | 88.9% | 6.6% | 4.2% |

| IncRes-v2 | 98.7% | 70.7% | 55.8% | 87.5% | 88.3% | 86.8% | 85.1% | 88.0% | 85.6% | 89.7% | 87.9% | 7.5% | 5.3% |

| TVM+ Image Quilting | |||||||||||||

| Inc-v3 | 96.2% | 70.2% | 54.6% | 85.7% | 84.5% | 83.6% | 84.3% | 85.3% | 84.2% | 85.9% | 84.3% | 4.1% | 1.7% |

| Res-50 | 93.1% | 69.7% | 53.3% | 85.4% | 83.8% | 83.9% | 83.2% | 84.6% | 83.3% | 85.0% | 83.2% | 3.6% | 1.1% |

| IncRes-v2 | 95.6% | 74.6% | 59.0% | 86.5% | 84.8% | 82.6% | 84.3% | 85.3% | 84.1% | 86.2% | 85.9% | 4.5% | 1.2% |

| Pixel Deflection | |||||||||||||

| Inc-v3 | 91.9% | 71.1% | 58.9% | 90.9% | 90.1% | 90.0% | 90.2% | 90.4% | 87.9% | 88.1% | 87.1% | 57.6% | 21.9% |

| Res-50 | 92.7% | 84.6% | 66.8% | 91.2% | 89.6% | 88.5% | 89.9% | 91.7% | 88.6% | 90.3% | 89.9% | 57.0% | 29.5% |

| IncRes-v2 | 92. 1% | 78.2% | 71.6% | 91.3% | 89.8% | 89.2% | 88.4% | 89.7% | 86.1% | 88.9% | 86.2% | 57.9% | 24.6% |

| SR-ResNet | |||||||||||||

| Inc-v3 | 94.0% | 89.5% | 69.9% | 93.4% | 92.6% | 91.5% | 92.1% | 93.3% | 90.4% | 93.2% | 90.8% | 54.3% | 24.9% |

| Res-50 | 92.8% | 85.5% | 62.3% | 90.8% | 90.6% | 91.1% | 90.8% | 92.1% | 89.9% | 90.5% | 89.8% | 60.1% | 29.8% |

| IncRes-v2 | 95.2% | 92.6% | 79.9% | 94.2% | 93.1% | 92.9% | 93.5% | 94.2% | 92.3% | 94.1% | 94.3% | 65.2% | 36.6% |

| 小波去噪+EDSR | |||||||||||||

| Inc-v3 | 97.0% | 94.2% | 79.7% | 96.2% | 95.9% | 95.1% | 95.2% | 96.0% | 95.1% | 96.1% | 95.6% | 67.9% | 31.7% |

| Res-50 | 93.9% | 86.1% | 64.9% | 92.3% | 92.0% | 92.3% | 92.6% | 93.1% | 92.1% | 91.5% | 90.1% | 60.7% | 31.9% |

| IncRes-v2 | 98.2% | 95.3% | 82.3% | 95.8% | 95.0% | 94.3% | 95.6% | 95.6% | 94.8% | 96.0% | 95.7% | 69.8% | 35.6% |

| U-WSR | |||||||||||||

| Inc-v3 | 99.3% | 94.3% | 85.3% | 98.1% | 97.3% | 96.3% | 97.1% | 96.6% | 96.9% | 97.3% | 96.6% | 74.5% | 36.5% |

| Res-50 | 95.6% | 90.1% | 70.3% | 95.6% | 95.4% | 93.6% | 94.3% | 94.5% | 94.3% | 93.5% | 92.6% | 66.8% | 37.9% |

| IncRes-v2 | 98.9% | 96.5% | 92.3% | 96.9% | 96.7% | 96.8% | 96.9% | 97.6% | 95.5% | 97.0% | 95.8% | 75.3% | 42.3% |

表2

不同防御方法处理后良性样本的识别准确率

| 数据集 | 网络模型 | 无防御 方法 | JPEG 压缩 | R&P | TVM+ Image Quilting | Pixel Deflection | SR-ResNet | 小波去噪+EDSR | U-WSR |

|---|---|---|---|---|---|---|---|---|---|

| ILSVRC | Inc-v3 | 100.0% | 96.0% | 97.3% | 96.2% | 91.9% | 94.0% | 97.0% | 99.3% |

| Res-50 | 100.0% | 92.8% | 92.5% | 93.1% | 92.7% | 92.8% | 93.9% | 95.6% | |

| IncRes-v2 | 100.0% | 95.5% | 98.7% | 95.6% | 92.1% | 95.2% | 98.2% | 98.9% | |

| NIPS-DEV | Inc-v3 | 95.9% | 89.7% | 92.0% | 88.8% | 86.5% | 88.3% | 90.9% | 92.6% |

| Res-50 | 98.9% | 86.9% | 90.6% | 85.6% | 87.8% | 86.8% | 86.9% | 96.9% | |

| IncRes-v2 | 99.4% | 94.5% | 98.9% | 87.9% | 88.9% | 90.3% | 92.9% | 99.5% |

表3

在Inc-v3网络上各超分辨率网络性能比较

| 攻击方法 优化方法 | 没有防御 | SR-ResNet | EDSR | U-WSR |

|---|---|---|---|---|

| 良性样本 | 100.0% | 94.0% | 96.2% | 99.3% |

| FGSM-2 | 31.7% | 89.5% | 92.6% | 95.3% |

| FGSM-10 | 30.5% | 69.9% | 73.3% | 76.4% |

| I-FGSM | 11.4% | 93.4% | 95.9% | 97.3% |

| MI-FGSM | 1.7% | 92.6% | 95.2% | 97.6% |

| PGD | 1.1% | 91.5% | 93.4% | 95.4% |

| L-BFGS | 0.3% | 92.1% | 94.4% | 96.8% |

| C&W | 0.8% | 93.3% | 95.6% | 98.1% |

| JSMA | 0.8% | 90.4% | 93.6% | 95.1% |

| DeepFool | 0.4% | 93.2% | 95.5% | 97.6% |

| ZOO | 1.0% | 90.8% | 93.5% | 95.8% |

| DI2FGSM | 1.4% | 54.3% | 57.2% | 60.8% |

| MDI2FGSM | 0.6% | 24.9% | 27.1% | 30.6% |

表4

在Res-50网络上各超分辨率网络性能比较

| 攻击方法 优化方法 | 没有防御 | SR-ResNet | EDSR | U-WSR |

|---|---|---|---|---|

| 良性样本 | 100% | 92.8% | 93.9% | 95.6% |

| FGSM-2 | 12.2% | 85.5% | 86.1% | 90.1% |

| FGSM-10 | 6.1% | 62.3% | 64.9% | 70.3% |

| I-FGSM | 3.4% | 90.8% | 92.3% | 95.6% |

| MI-FGSM | 0.4% | 90.6% | 92.0% | 95.4% |

| PGD | 0.2% | 91.1% | 92.3% | 93.6% |

| L-BFGS | 0.1% | 90.8% | 92.6% | 94.3% |

| C&W | 0.1% | 92.1% | 93.1% | 94.5% |

| JSMA | 0.2% | 89.9% | 92.1% | 94.3% |

| DeepFool | 1.0% | 90.5% | 91.5% | 93.5% |

| ZOO | 0.8% | 89.8% | 90.1% | 92.6% |

| DI2FGSM | 0.3% | 60.1% | 60.7% | 66.8% |

| MDI2FGSM | 0.2% | 29.8% | 31.9% | 37.9% |

表5

在IncRes-v2网络上各超分辨率网络性能比较

| 攻击方法 优化方法 | 没有防御 | SR-ResNet | EDSR | U-WSR |

|---|---|---|---|---|

| 良性样本 | 100% | 95.2% | 98.2% | 98.9% |

| FGSM-2 | 59.4% | 92.6% | 95.3% | 96.5% |

| FGSM-10 | 53.6% | 79.9% | 82.3% | 92.3% |

| I-FGSM | 21.6% | 94.2% | 95.8% | 96.9% |

| MI-FGSM | 0.5% | 93.1% | 95.0% | 96.7% |

| PGD | 0.3% | 92.9% | 94.3% | 96.8% |

| L-BFGS | 0.1% | 93.5% | 95.6% | 96.9% |

| C&W | 0.3% | 94.2% | 95.6% | 97.6% |

| JSMA | 0.1% | 92.3% | 94.8% | 95.5% |

| DeepFool | 0.1% | 94.1% | 96.0% | 97.0% |

| ZOO | 0.8% | 94.3% | 95.7% | 95.8% |

| DI2FGSM | 1.5% | 65.2% | 69.8% | 75.3% |

| MDI2FGSM | 0.6% | 36.6% | 35.6% | 42.3% |

| [1] | SZEGEDY C, ZAREMBA W, SUTSKEVER I, et al. Intriguing Properties of Neural Networks[C]// ICLR.The 2nd International Conference on Learning Representations. Banff: ICLR, 2014: 1-10. |

| [2] | TABELINI L, BERRIEL R, PAIXAO T M, et al. Polylanenet: Lane Estimation via Deep Polynomial Regression[C]// IEEE. 2020 the 25th International Conference on Pattern Recognition (ICPR). New York: IEEE, 2021: 6150-6156. |

| [3] | SUN Yifan, XU Qin, LI Yali, et al. Perceive Where to Focus: Learning Visibility-Aware Part-Level Features for Partial Person Re-Identification[C]// IEEE. The IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2019: 393-402. |

| [4] | LIU Dongchuan, NOCEDAL J. On the Limited Memory BFGS Method for Large Scale Optimization[J]. Mathematical Programming, 1989, 45(1): 503-528. |

| [5] | CARLINI N, WAGNER D. Towards Evaluating the Robustness of Neural Networks[C]// IEEE. 2017 IEEE Symposium on Security and Privacy. New York: IEEE, 2017: 39-57. |

| [6] | GOODFELLOW I J, SHLENS J, SZEGEDY C. Explaining and Harnessing Adversarial Examples[EB/OL]. (2015-03-20)[2024-09-12]. https://arxiv.org/abs/1412.6572. |

| [7] | KURAKIN A, GOODFELLOW I, BENGIO S. Adversarial Examples in the Physical World[EB/OL]. (2016-07-08)[2024-09-12]. https://arxiv.org/abs/1607.02533. |

| [8] | MADRY A, MAKELOV A, SCHMIDT L, et al. Towards Deep Learning Models Resistant to Adversarial Attacks[EB/OL]. (2017-07-20)[2024-09-12]. https://doi.org/10.48550/arXiv.1706.06083. |

| [9] | DONG Yinpeng, LIAO Fangzhou, PANG Tianyu, et al. Boosting Adversarial Attacks with Momentum[C]// IEEE. Computer Vision and Pattern Recognition. New York: IEEE, 2018: 9185-9193. |

| [10] | MOOSAVI-DEZFOOLI S M, FAWZI A, FROSSARD P. Deepfool: A Simple and Accurate Method to Fool Deep Neural Networks[C]// IEEE.Computer Vision and Pattern Recognition. New York: IEEE, 2016: 2574-2582. |

| [11] | MOOSAVI-DEZFOOLI S M, FAWZI A, FAWZI O, et al. Universal Adversarial Perturbations[C]// IEEE. Computer Vision and Pattern Recognition. New York: IEEE, 2017: 1765-1773. |

| [12] | BALUJA S, FISCHER I. Learning to Attack: Adversarial Transformation Networks[EB/OL]. (2018-04-29)[2024-09-12]. https://arxiv.org/abs/1703.09387. |

| [13] | HAYES J, DANEZIS G. Learning Universal Adversarial Perturbations with Generative Models[C]// IEEE.IEEE Security and Privacy Workshops (SPW). New York: IEEE, 2018: 43-49. |

| [14] | DZIUGAITE G K, GHAHRAMANI Z, ROY D M. A Study of the Effect of JPG Compression on Adversarial Images[EB/OL]. (2016-08-02)[2024-09-12]. https://arxiv.org/abs/1608.00853. |

| [15] | LIAO Fangzhou, LIANG Ming, DONG Yinpeng, et al. Defense Against Adversarial Attacks Using High-Level Representation Guided Denoiser[C]// IEEE. Computer Vision and Pattern Recognition. New York: IEEE, 2018: 1778-1787. |

| [16] | JIA Xiaojun, WEI Xingxing, CAO Xiaochun, et al. Comdefend: An Efficient Image Compression Model to Defend Adversarial Examples[C]// IEEE. The IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2019: 6084-6092. |

| [17] | MUSTAFA A, KHAN S H, HAYAT M, et al. Image Super-Resolution as a Defense Against Adversarial Attacks[J]. IEEE Transactions on Image Processing, 2019, 29: 1711-1724. |

| [18] | LIM B, SON S, KIM H, et al. Enhanced Deep Residual Networks for Single Image Super-Resolution[C]// IEEE. Computer Vision and Pattern Recognition Workshops. New York: IEEE, 2017: 136-144. |

| [19] | VASWANI A, SHAZEER N, PARMAR N, et al. Attention is All You Need[J]. Advances in Neural Information Processing Systems, 2017, 30: 5998-6009. |

| [20] | DONG Xiaoyi, BAO Jianmin, CHEN Dongdong, et al. CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows[C]// IEEE. The IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2022: 12124-12134. |

| [21] | SHI Wenzhe, CABALLERO J, HUSZAR F, et al. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network[C]// IEEE.Computer Vision and Pattern Recognition. New York: IEEE, 2016: 1874-1883. |

| [22] | DENG Jia, DONG Wei, SOCHER R, et al. Imagenet: A Large-Scale Hierarchical Image Database[C]// IEEE. 2009 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2009: 248-255. |

| [23] | KURAKIN A, GOODFELLOW I, BENGIO S, et al. Adversarial Machine Learning at Scale[EB/OL]. (2016-11-04)[2024-09-12]. https://arxiv.org/abs/1611.01236. |

| [24] | PAPERNOT N, MCDANIEL P, JHA S, et al. The Limitations of Deep Learning in Adversarial Settings[C]// IEEE. 2016 IEEE European Symposium on Security and Privacy (EuroS&P). New York: IEEE, 2016: 372-387. |

| [25] | CHEN Pinyu, ZHANG Huan, SHARMA Y, et al. Zoo: Zeroth Order Optimization Based Black-Box Attacks to Deep Neural Networks without Training Substitute Models[C]// ACM. The 10th ACM Workshop on Artificial Intelligence and Security. New York: ACM, 2017: 15-26. |

| [26] | XIE Cihang, ZHANG Zhishuai, ZHOU Yuyin, et al. Improving Transferability of Adversarial Examples with Input Diversity[C]// IEEE. The IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2019: 2730-2739. |

| [27] | DAS N, SHANBHOGUE M, CHEN S T, et al. Shield: Fast, Practical Defense and Vaccination for Deep Learning Using JPEG Compression[C]// ACM. The 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. New York: ACM, 2018: 196-204. |

| [28] | XIE Cihang, WANG Jianyu, ZHANG Zhishuai, et al. Mitigating Adversarial Effects through Randomization[EB/OL]. (2017-09-08)[2024-09-12]. https://arxiv.org/abs/1711.01991. |

| [29] | GUO Chuan, RANA M, CISSE M, et al. Countering Adversarial Images Using Input Transformations[EB/OL]. (2017-06-18)[2024-09-12]. https://arxiv.org/abs/1711.00117. |

| [30] | PRAKASH A, MORAN N, GARBER S, et al. Deflecting Adversarial Attacks with Pixel Deflection[C]// IEEE. The IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2018: 8571-8580. |

| [31] | LEDIG C, THEIS L, HUSZAR F, et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network[C]// IEEE. The IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2017: 4681-4690. |

| [1] | 陈咏豪, 蔡满春, 张溢文, 彭舒凡, 姚利峰, 朱懿. 多尺度多层次特征融合的深度伪造人脸检测方法[J]. 信息网络安全, 2025, 25(9): 1456-1464. |

| [2] | 王新猛, 陈俊雹, 杨一涛, 李文瑾, 顾杜娟. 贝叶斯优化的DAE-MLP恶意流量识别模型[J]. 信息网络安全, 2025, 25(9): 1465-1472. |

| [3] | 金志刚, 李紫梦, 陈旭阳, 刘泽培. 面向数据不平衡的网络入侵检测系统研究综述[J]. 信息网络安全, 2025, 25(8): 1240-1253. |

| [4] | 王钢, 高雲鹏, 杨松儒, 孙立涛, 刘乃维. 基于深度学习的加密恶意流量检测方法研究综述[J]. 信息网络安全, 2025, 25(8): 1276-1301. |

| [5] | 张兴兰, 陶科锦. 基于高阶特征与重要通道的通用性扰动生成方法[J]. 信息网络安全, 2025, 25(5): 767-777. |

| [6] | 金增旺, 江令洋, 丁俊怡, 张慧翔, 赵波, 方鹏飞. 工业控制系统安全研究综述[J]. 信息网络安全, 2025, 25(3): 341-363. |

| [7] | 陈红松, 刘新蕊, 陶子美, 王志恒. 基于深度学习的时序数据异常检测研究综述[J]. 信息网络安全, 2025, 25(3): 364-391. |

| [8] | 李海龙, 崔治安, 沈燮阳. 网络流量特征的异常分析与检测方法综述[J]. 信息网络安全, 2025, 25(2): 194-214. |

| [9] | 武浩莹, 陈杰, 刘君. 改进Simon32/64和Simeck32/64神经网络差分区分器[J]. 信息网络安全, 2025, 25(2): 249-259. |

| [10] | 金地, 任昊, 唐瑞, 陈兴蜀, 王海舟. 基于情感辅助多任务学习的社交网络攻击性言论检测技术研究[J]. 信息网络安全, 2025, 25(2): 281-294. |

| [11] | 陈晓静, 陶杨, 吴柏祺, 刁云峰. 面向骨骼动作识别的优化梯度感知对抗攻击方法[J]. 信息网络安全, 2024, 24(9): 1386-1395. |

| [12] | 徐茹枝, 张凝, 李敏, 李梓轩. 针对恶意软件的高鲁棒性检测模型研究[J]. 信息网络安全, 2024, 24(8): 1184-1195. |

| [13] | 田钊, 牛亚杰, 佘维, 刘炜. 面向车联网的车辆节点信誉评估方法[J]. 信息网络安全, 2024, 24(5): 719-731. |

| [14] | 张光华, 刘亦纯, 王鹤, 胡勃宁. 基于JSMA对抗攻击的去除深度神经网络后门防御方案[J]. 信息网络安全, 2024, 24(4): 545-554. |

| [15] | 徐子荣, 郭焱平, 闫巧. 基于特征恶意度排序的恶意软件对抗防御模型[J]. 信息网络安全, 2024, 24(4): 640-649. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||