信息网络安全 ›› 2023, Vol. 23 ›› Issue (7): 22-30.doi: 10.3969/j.issn.1671-1122.2023.07.003

基于安全两方计算的高效神经网络推理协议

- 1.南京理工大学数学与统计学院,南京 210094

2.南京理工大学计算机科学与工程学院,南京 210094

3.南京理工大学网络空间安全学院,南京 210094

-

收稿日期:2023-02-20出版日期:2023-07-10发布日期:2023-07-14 -

通讯作者:薛少康 xueshaok@njust.edu.cn -

作者简介:许春根(1969—),男,安徽,教授,博士,CCF会员,主要研究方向为密码学、网络空间安全|薛少康(1999—),男,河南,硕士研究生,主要研究方向为安全多方计算|徐磊(1990—),男,安徽,副教授,博士,CCF会员,主要研究方向为应用密码学、信息安全|张盼(1997—),男,安徽,博士研究生,主要研究方向为联邦学习、差分隐私 -

基金资助:国家自然科学基金(62072240);国家自然科学基金(62202228);江苏省自然科学基金(BK20210330)

Efficient Neural Network Inference Protocol Based on Secure Two-Party Computation

XU Chungen1, XUE Shaokang2( ), XU Lei1, ZHANG Pan3

), XU Lei1, ZHANG Pan3

- 1. School of Mathematics and Statistics, Nanjing University of Science and Technology, Nanjing 210094, China

2. School of Computer Science and Engineering, Nanjing University of Science and Technology, Nanjing 210094, China

3. School of Cyber Science and Engineering, Nanjing University of Science and Technology, Nanjing 210094, China

-

Received:2023-02-20Online:2023-07-10Published:2023-07-14

摘要:

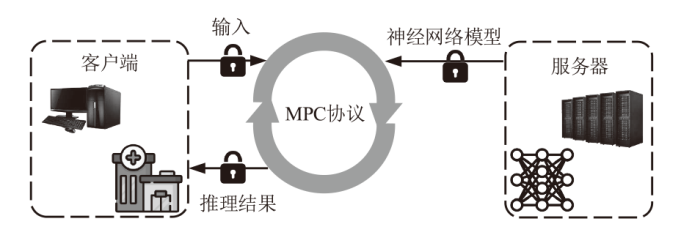

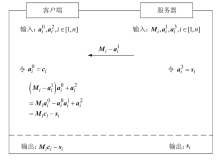

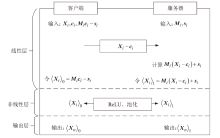

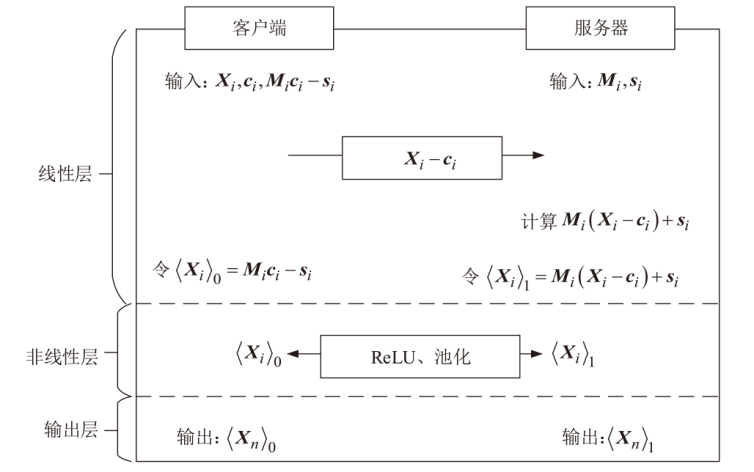

近年来机器学习即服务(MLaaS)发展迅速,但在实际应用中,其性能存在很大瓶颈,且面临用户数据和企业神经网络模型参数泄露的风险。目前已有一些具有隐私保护功能的机器学习方案,但存在计算效率低和通信开销大的问题。针对上述问题,文章提出一种基于安全两方计算的高效神经网络推理协议,其中线性层使用秘密共享技术保护输入数据的隐私,非线性层使用低通信开销的基于不经意传输的比较函数计算激活函数。实验结果表明,与现有方案相比,该协议在两个基准数据集上的效率至少提高了23%,通信开销至少减小51%。

中图分类号:

引用本文

许春根, 薛少康, 徐磊, 张盼. 基于安全两方计算的高效神经网络推理协议[J]. 信息网络安全, 2023, 23(7): 22-30.

XU Chungen, XUE Shaokang, XU Lei, ZHANG Pan. Efficient Neural Network Inference Protocol Based on Secure Two-Party Computation[J]. Netinfo Security, 2023, 23(7): 22-30.

| [1] | RATHOR K, PATIL K, TARUN M S, et al. A Novel and Efficient Method to Detect the Face Coverings to Ensure the Safety Using Comparison Analysis[C]// IEEE. The International Conference on Edge Computing and Applications. New York: IEEE, 2022: 1664-1667. |

| [2] |

BHANGALE K, KOTHANDARAMAN M. Speech Emotion Recognition Based on Multiple Acoustic Features and Deep Convolutional Neural Network[J]. Electronics, 2023, 12(4): 839-855.

doi: 10.3390/electronics12040839 URL |

| [3] | ZHANG Ge, ZHEN Peng, YAN Chaokun, et al. A Novel Liver Cancer Diagnosis Method Based on Patient Similarity Network and DenseGCN[EB/OL]. (2022-12-01) [2023-01-15]. https://www.nature.com/arti-cles/s41598-022-10441-3. |

| [4] |

ZIOVIRIS G, KOLOMVATSOS K, STAMOULIS G. Credit Card Fraud Detection Using a Deep Learning Multistage Model[J]. The Journal of Supercomputing, 2022, 78(12): 14571-14596.

doi: 10.1007/s11227-022-04465-9 |

| [5] | YAO A C. Protocols for Secure Computations[C]// IEEE. 23rd Annual Symposium on Foundations of Computer Science. New York: IEEE, 1982: 160-164. |

| [6] | BOGETOFT P, CHRISTENSEN D L, DAMGARD I, et al. Secure Multiparty Computation Goes Live[C]// Springer. 13th International Conference on Financial Cryptography and Data Security. Heidelberg: Springer, 2009: 325-343. |

| [7] | MA J P, CHOW S. Secure-Computation-Friendly Private Set Intersection from Oblivious Compact Graph Evaluation[C]// ACM. 2022 ACM on Asia Conference on Computer and Communications Security. New York: ACM, 2022: 1086-1097. |

| [8] | SPERLING L, RATHA N, ROSS A, et al. HEFT: Homomorphically Encrypted Fusion of Biometric Templates[C]// IEEE. 2022 IEEE International Joint Conference on Biometrics. New York: IEEE, 2022: 1-10. |

| [9] | MOHASSEL P, ZHANG Yupeng. Secureml: A System for Scalable Privacy-Preserving Machine Learning[C]// IEEE. 2017 IEEE Symposium on Security and Privacy. New York: IEEE, 2017: 19-38. |

| [10] | RIAZI M S, WEINERT C, TKACHENKO O, et al. Chameleon: A Hybrid Secure Computation Framework for Machine Learning Applications[C]// ACM. The 2018 on Asia Conference on Computer and Communications Security. New York: ACM, 2018: 707-721. |

| [11] | JUVEKAR C, VAIKUNTANATHAN V, CHANDRAKASAN A. Gazelle: A Low Latency Framework for Secure Neural Network Inference[C]// USENIX. 27th USENIX Security Symposium. Baltimore: USENIX Association, 2018: 1651-1669. |

| [12] | GARAY J A, SCHOENMAKERS B, VILLEGAS J. Practical and Secure Solutions for Integer Comparison[C]// Springer. 10th International Conference on Practice and Theory in Public-Key Cryptography. Heidelberg: Springer, 2007: 330-342. |

| [13] | RABIN M O. How to Exchange Secrets with Oblivious Transfer[EB/OL]. [2023-01-15]. https://e-print.iacr.org/2005/187.pdf. |

| [14] | ASHAROV G, LINDELL Y, SCHNEIDER T, et al. More Efficient Oblivious Transfer and Extensions for Faster Secure Computation[C]// ACM. 2013 ACM SIGSAC Conference on Computer & Communications Security. New York: ACM, 2013: 535-548. |

| [15] | SHAMIR A. How to Share a Secret[C]// ACM. Communications of the ACM. New York: ACM, 1979: 612-613. |

| [16] | BEAVER D. Precomputing Oblivious Transfer[C]// Springer. 15th Annual International Cryptology Conference. Heidelberg: Springer, 1995: 97-109. |

| [17] | DAMGARD I, PASTRO V, SMART N, et al. Multiparty Computation from Somewhat Homomorphic Encryption[C]// Springer. 32nd Annual Cryptology Conference. Heidelberg: Springer, 2012: 643-662. |

| [18] | YANG Kang, WENG Chenkai, LAN Xiao, et al. Ferret: Fast Extension for Correlated OT with Small Communication[C]// ACM. 2020 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2020: 1607-1626. |

| [19] | RATHEE D, RATHEE M, KUMAR N, et al. Cryptflow2: Practical 2--Party Secure Inference[C]// ACM. The 2020 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2020: 325-342. |

| [20] |

GOLDWASSER S, MICALI S. Probabilistic Encryption[J]. Journal of Computer and System Sciences, 1984, 28(2): 270-299.

doi: 10.1016/0022-0000(84)90070-9 URL |

| [21] | LIU Jian, JUUTI M, LU Yu, et al. Oblivious Neural Network Predictions via Minionn Transformations[C]// ACM. 2017 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2017: 619-631. |

| [22] | LIU Xiaoning, ZHENG Yifeng, YUAN Xingliang, et al. Medisc: Towards Secure and Lightweight Deep Learning as a Medical Diagnostic Service[C]// Springer. European Symposium on Research in Computer Security. Heidelberg: Springer, 2021: 519-541. |

| [23] | WAGH S, TOPLE S, BENHAMOUDA F, et al. Falcon: Honest-Majority Maliciously Secure Framework for Private Deep Learning[C]// Springer. Proceedings on Privacy Enhancing Technologies. Heidelberg: Springer, 2021: 188-208. |

| [24] | MISHRA M, LEHMKUHL R, SRINIVASAN A, et al. Delphi: A Cryptographic Inference Service for Neural Networks[C]// USENIX. 29th USENIX Security Symposium. California: USENIX Association, 2020: 2505-2522. |

| [1] | 苑文昕, 陈兴蜀, 朱毅, 曾雪梅. 基于深度学习的HTTP负载隐蔽信道检测方法[J]. 信息网络安全, 2023, 23(7): 53-63. |

| [2] | 李晨蔚, 张恒巍, 高伟, 杨博. 基于AdaN自适应梯度优化的图像对抗迁移攻击方法[J]. 信息网络安全, 2023, 23(7): 64-73. |

| [3] | 蒋英肇, 陈雷, 闫巧. 基于双通道特征融合的分布式拒绝服务攻击检测算法[J]. 信息网络安全, 2023, 23(7): 86-97. |

| [4] | 陈晶, 彭长根, 谭伟杰, 许德权. 基于差分隐私和秘密共享的多服务器联邦学习方案[J]. 信息网络安全, 2023, 23(7): 98-110. |

| [5] | 杨宇光, 卢嘉煜. 一种基于星型簇态的动态的分级的量子秘密共享协议[J]. 信息网络安全, 2023, 23(6): 34-42. |

| [6] | 李志华, 王志豪. 基于LCNN和LSTM混合结构的物联网设备识别方法[J]. 信息网络安全, 2023, 23(6): 43-54. |

| [7] | 蒋曾辉, 曾维军, 陈璞, 武士涛. 面向调制识别的对抗样本研究综述[J]. 信息网络安全, 2023, 23(6): 74-90. |

| [8] | 赵小林, 王琪瑶, 赵斌, 薛静锋. 基于机器学习的匿名流量分类方法研究[J]. 信息网络安全, 2023, 23(5): 1-10. |

| [9] | 秦宝东, ,陈从正, ,何俊杰, 郑东. 基于可验证秘密共享的多关键词可搜索加密方案[J]. 信息网络安全, 2023, 23(5): 32-40. |

| [10] | 陈梓彤, 贾鹏, 刘嘉勇. 基于Siamese架构的恶意软件隐藏函数识别方法[J]. 信息网络安全, 2023, 23(5): 62-75. |

| [11] | 裴蓓, 张水海, 吕春利. 用于云存储的主动秘密共享方案[J]. 信息网络安全, 2023, 23(5): 95-104. |

| [12] | 赵彩丹, 陈璟乾, 吴志强. 基于多通道联合学习的自动调制识别网络[J]. 信息网络安全, 2023, 23(4): 20-29. |

| [13] | 马敏, 付钰, 黄凯. 云环境下基于秘密共享的安全外包主成分分析方案[J]. 信息网络安全, 2023, 23(4): 61-71. |

| [14] | 张学旺, 张豪, 姚亚宁, 付佳丽. 基于群签名和同态加密的联盟链隐私保护方案[J]. 信息网络安全, 2023, 23(3): 56-61. |

| [15] | 胡智杰, 陈兴蜀, 袁道华, 郑涛. 基于改进随机森林的Android广告应用静态检测方法[J]. 信息网络安全, 2023, 23(2): 85-95. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||