信息网络安全 ›› 2023, Vol. 23 ›› Issue (7): 64-73.doi: 10.3969/j.issn.1671-1122.2023.07.007

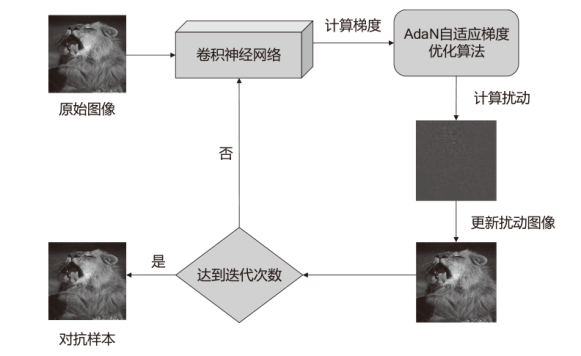

基于AdaN自适应梯度优化的图像对抗迁移攻击方法

- 1.解放军信息工程大学密码工程学院,郑州 450001

2.北京地铁科技发展有限公司,北京 100160

-

收稿日期:2023-02-10出版日期:2023-07-10发布日期:2023-07-14 -

通讯作者:张恒巍 zhw11qd@126.com -

作者简介:李晨蔚(1992—),男,湖北,硕士研究生,主要研究方向为计算机视觉安全|张恒巍(1978—),男,河南,副教授,博士,主要研究方向为网络安全博弈、人工智能对抗攻击与防御|高伟(1978—),男,河南,工程师,硕士,主要研究方向为人工智能应用|杨博(1993—),男,湖北,博士研究生,主要研究方向为人工智能安全 -

基金资助:国家重点研发计划(2017YFB0801904)

Transferable Image Adversarial Attack Method with AdaN Adaptive Gradient Optimizer

LI Chenwei1, ZHANG Hengwei1( ), GAO Wei2, YANG Bo1

), GAO Wei2, YANG Bo1

- 1. Department of Cryptogram Engineering, PLA Information Engineering University, Zhengzhou 450001, China

2. Beijing Subway Science and Technology Development Co., Ltd., Beijing 100160, China

-

Received:2023-02-10Online:2023-07-10Published:2023-07-14

摘要:

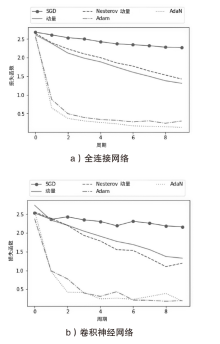

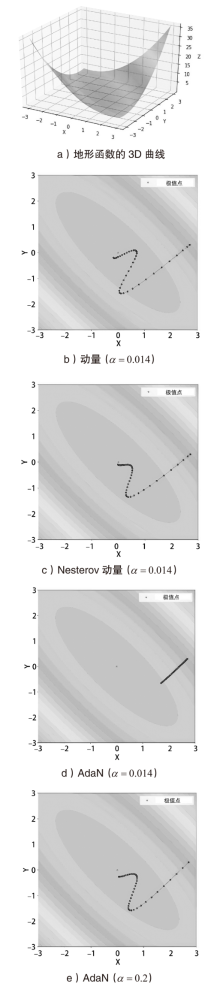

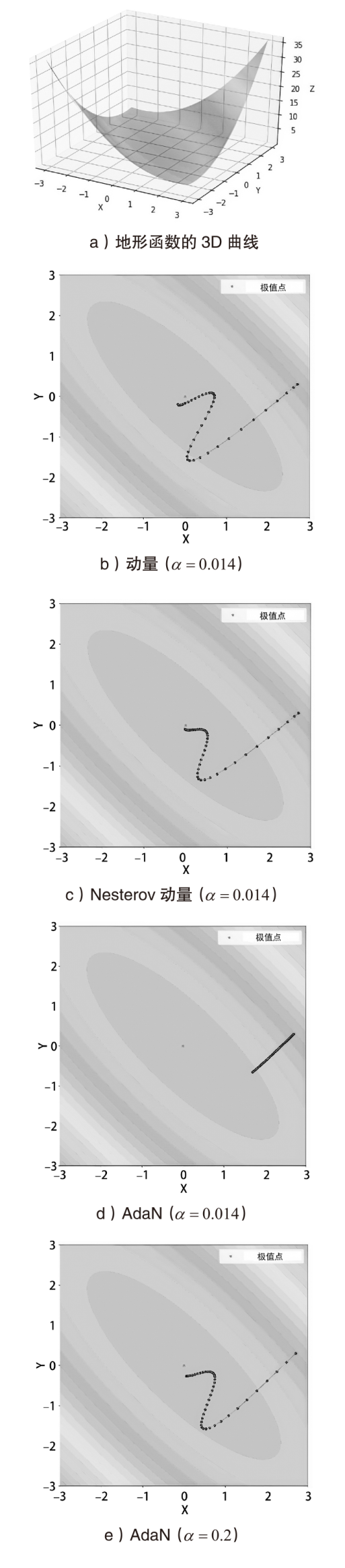

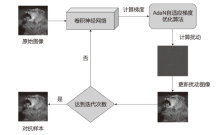

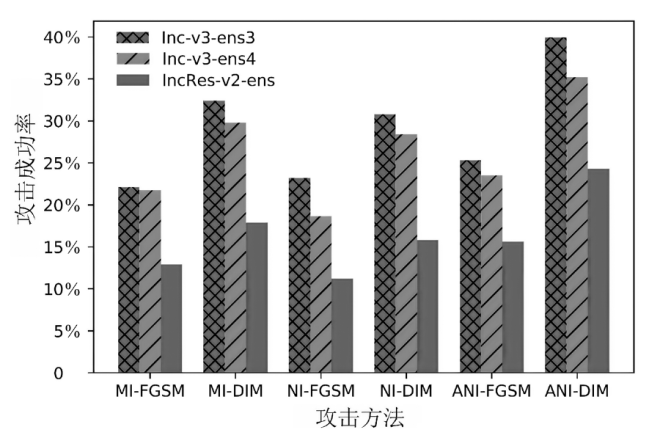

大部分网络模型在面临对抗攻击时表现不佳,这给网络算法的安全性带来了严重威胁。因此,对抗攻击已成为评估网络模型安全性的有效方式之一。现有的白盒攻击方法已经能够取得较高的攻击成功率,但是在黑盒攻击条件下,攻击成功率还有待提升。文章以梯度优化为出发点,将自适应梯度优化算法AdaN引入对抗样本生成过程中,以加速收敛,使梯度更新方向更稳定,从而增强对抗攻击的迁移性。为了进一步增强攻击效果,将文章所提方法与其他数据增强方法进行结合,从而形成攻击成功率更高的攻击方法。此外,还通过集成多个已知模型生成对抗样本,以便对已进行对抗训练的网络模型进行更有效的黑盒攻击。实验结果表明,采用AdaN梯度优化的对抗样本在黑盒攻击成功率上高于当前的基准方法,并具有更好的迁移性。

中图分类号:

引用本文

李晨蔚, 张恒巍, 高伟, 杨博. 基于AdaN自适应梯度优化的图像对抗迁移攻击方法[J]. 信息网络安全, 2023, 23(7): 64-73.

LI Chenwei, ZHANG Hengwei, GAO Wei, YANG Bo. Transferable Image Adversarial Attack Method with AdaN Adaptive Gradient Optimizer[J]. Netinfo Security, 2023, 23(7): 64-73.

表1

单个模型攻击成功率

| 生成模型 | 攻击方法 | Inc-v3 | Inc-v4 | IncRes-v2 | Res-101 | Inc-v3-ens3 | Inc-v3-ens4 | IncRes-v2-ens |

|---|---|---|---|---|---|---|---|---|

| Inc-v3 | I-FGSM | 99.9%* | 22.8% | 19.9% | 18.1% | 7.5% | 6.4% | 4.1% |

| MI-FGSM | 99.9%* | 48.8% | 48.0% | 39.9% | 15.1% | 15.2% | 7.8% | |

| NI-FGSM | 100%* | 55.1% | 50.8% | 44.2% | 14.6% | 14.0% | 7.3% | |

| ANI-FGSM | 100%* | 52.7% | 48.7% | 42.9% | 16.4% | 16.5% | 8.1% | |

| Inc-v4 | I-FGSM | 35.8% | 99.9%* | 24.7% | 21.9% | 7.8% | 6.8% | 4.9% |

| MI-FGSM | 65.6% | 99.9%* | 54.9% | 47.7% | 19.8% | 17.4% | 9.6% | |

| NI-FGSM | 69.6% | 100%* | 58.4% | 49.5% | 17.8% | 16.8% | 7.9% | |

| ANI-FGSM | 69.2% | 99.9%* | 57.0% | 48.3% | 21.6% | 18.0% | 11.0% | |

| IncRes-v2 | I-FGSM | 37.8% | 20.8% | 99.6%* | 25.9% | 8.9% | 7.8% | 5.8% |

| MI-FGSM | 69.8% | 62.1% | 99.5%* | 52.1% | 26.1% | 20.9% | 15.7% | |

| NI-FGSM | 70.7% | 61.9% | 99.7%* | 52.3% | 22.2% | 18.9% | 11.9% | |

| ANI-FGSM | 68.3% | 61.1% | 99.6%* | 52.7% | 26.9% | 24.2% | 19.4% | |

| Res-101 | I-FGSM | 26.7% | 22.7% | 21.2% | 98.2%* | 9.3% | 8.9% | 6.2% |

| MI-FGSM | 53.6% | 48.9% | 44.7% | 98.2%* | 22.1% | 21.7% | 12.9% | |

| NI-FGSM | 59.3% | 54.8% | 52.4% | 98.6%* | 23.2% | 18.6% | 11.2% | |

| ANI-FGSM | 60.7% | 55.3% | 50.6% | 98.8%* | 25.3% | 23.5% | 15.6% |

| [1] |

KRIZHEVSKY A, SUTSKEVER I, HINTON G E. ImageNet Classification with Deep Convolutional Neural Networks[J]. Communications of the ACM, 2017, 60(6): 84-90.

doi: 10.1145/3065386 URL |

| [2] | SCHROFF F, KALENICHENKO D, PHILBIN J. FaceNet: A Unified Embedding for Face Recognition and Clustering[C]// IEEE. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2015: 815-823. |

| [3] | SZEGEDY C, TOSHEV A, ERHAN D. Deep Neural Networks for Object Detection[EB/OL]. (2013-12-05) [2023-01-13]. https://arxiv.org/abs/1611.08588. |

| [4] | GIDARIS S, KOMODAKIS N. Object Detection via a Multi-Region and Semantic Segmentation-Aware CNN Model[C]// IEEE. Proceedings of the IEEE International Conference on Computer Vision. New York: IEEE, 2015: 1134-1142. |

| [5] | HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Identity Mappings in Deep Residual Networks[C]// Springer. European Conference on Computer Vision. Berlin:Springer, 2016: 630-645. |

| [6] | SZEGEDY C, ZAREMBA W, SUTSKEVER I, et al. Intriguing Properties of Neural Networks[EB/OL]. (2013-12-21) [2023-01-13]. https://arxiv.org/abs/1312.6199. |

| [7] | HENDRYCKS D, ZHAO K, BASART S, et al. Natural Adversarial Examples[C]// IEEE. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2021: 15262-15271. |

| [8] | TRAMÈR F, KURAKIN A, PAPERNOT N, et al. Ensemble Adversarial Training: Attacks and Defenses[EB/OL]. (2017-05-19) [2023-01-13]. https://arxiv.org/abs/1705.07204. |

| [9] | PAPERNOT N, MCDANIEL P, GOODFELLOW I, et al. Practical Black-Box Attacks Against Machine Learning[C]// ACM. Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security. New York: ACM, 2017: 506-519. |

| [10] | BAI Yang, ZENG Yuyuan, JIANG Yong, et al. Improving Query Efficiency of Black-Box Adversarial Attack[C]// Springer. European Conference on Computer Vision. Berlin:Springer, 2020: 101-116. |

| [11] | INKAWHICH N, LIANG K J, CARIN L, et al. Transferable Perturbations of Deep Feature Distributions[EB/OL]. (2020-04-27) [2023-01-13]. https://arxiv.org/abs/2004.12519. |

| [12] |

CHEN Mengxuan, ZHANG Zhenyong, JI Shouling, et al. Survey of Research Progress on Adversarial Examples in Images[J]. Computer Science, 2022, 49(2): 92-106.

doi: 10.11896/jsjkx.210800087 |

|

陈梦轩, 张振永, 纪守领, 等. 图像对抗样本研究综述[J]. 计算机科学, 2022, 49(2): 92-106.

doi: 10.11896/jsjkx.210800087 |

|

| [13] | BIGGIO B, CORONA I, MAIORCA D, et al. Evasion Attacks Against Machine Learning at Test Time[C]// Springer. Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Berlin:Springer, 2013: 387-402. |

| [14] | GOODFELLOW I J, SHLENS J, SZEGEDY C. Explaining and Harnessing Adversarial Examples[EB/OL]. (2014-12-20) [2023-01-13]. https://arxiv.org/abs/1412.6572. |

| [15] | CARLINI N, WAGNER D. Towards Evaluating the Robustness of Neural Networks[C]// IEEE. 2017 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2017: 39-57. |

| [16] | KURAKIN A, GOODFELLOW I J, BENGIO S. Adversarial Examples in the Physical World[EB/OL]. (2016-07-08) [2023-01-13]. https://arxiv.org/abs/1607.02533. |

| [17] | DONG Yinpeng, LIAO Fangzhou, PANG Tianyu, et al. Boosting Adversarial Attacks with Momentum[C]// IEEE. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2018: 9185-9193. |

| [18] | LIN Jiadong, SONG Chuanbiao, HE Kun, et al. Nesterov Accelerated Gradient and Scale Invariance for Adversarial Attacks[EB/OL]. (2019-08-17) [2023-01-13]. https://arxiv.org/abs/1908.06281. |

| [19] | XIE Xingyu, ZHOU Pan, LI Huan, et al. Adan: Adaptive Nesterov Momentum Algorithm for Faster Optimizing Deep Models[EB/OL]. (2022-08-13) [2023-01-13]. https://arxiv.org/abs/2208.06677. |

| [20] | XIE Cihang, ZHANG Zhishuai, ZHOU Yuyin, et al. Improving Transferability of Adversarial Examples with Input Diversity[C]// IEEE. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2019: 2730-2739. |

| [21] | NIELSEN M A. Neural Networks and Deep Learning[M]. San Francisco: Determination Press, 2015. |

| [22] | RUDER S. An Overview of Gradient Descent Optimization Algorithms[EB/OL]. (2016-09-15) [2023-01-13]. https://arxiv.org/abs/1609.04747. |

| [23] |

SHORTEN C, KHOSHGOFTAAR T M. A Survey on Image Data Augmentation for Deep Learning[J]. Journal of Big Data, 2019, 6(1): 1-48.

doi: 10.1186/s40537-018-0162-3 |

| [24] | BÜHLMANN P. Bagging, Boosting and Ensemble Methods[M]. Berlin: Springer, 2012. |

| [25] | LIU Yanpei, CHEN Xinyun, LIU Chang, et al. Delving into Transferable Adversarial Examples and Black-Box Attacks[EB/OL]. (2016-11-08) [2023-01-13]. https://arxiv.org/abs/1611.02770. |

| [26] | HASHEMI A S, BÄR A, MOZAFFARI S, et al. Improving Transferability of Generated Universal Adversarial Perturbations for Image Classification and Segmentation[C]// Springer. Deep Neural Networks and Data for Automated Driving. Berlin:Springer, 2022: 171-196. |

| [27] | SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the Inception Architecture for Computer Vision[C]// IEEE. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2016: 2818-2826. |

| [28] | SZEGEDY C, IOFFE S, VANHOUCKE V, et al. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning[C]// ACM. Thirty-First AAAI Conference on Artificial Intelligence. New York: ACM, 2017: 4278-4284. |

| [1] | 许春根, 薛少康, 徐磊, 张盼. 基于安全两方计算的高效神经网络推理协议[J]. 信息网络安全, 2023, 23(7): 22-30. |

| [2] | 苑文昕, 陈兴蜀, 朱毅, 曾雪梅. 基于深度学习的HTTP负载隐蔽信道检测方法[J]. 信息网络安全, 2023, 23(7): 53-63. |

| [3] | 蒋英肇, 陈雷, 闫巧. 基于双通道特征融合的分布式拒绝服务攻击检测算法[J]. 信息网络安全, 2023, 23(7): 86-97. |

| [4] | 李志华, 王志豪. 基于LCNN和LSTM混合结构的物联网设备识别方法[J]. 信息网络安全, 2023, 23(6): 43-54. |

| [5] | 蒋曾辉, 曾维军, 陈璞, 武士涛. 面向调制识别的对抗样本研究综述[J]. 信息网络安全, 2023, 23(6): 74-90. |

| [6] | 赵小林, 王琪瑶, 赵斌, 薛静锋. 基于机器学习的匿名流量分类方法研究[J]. 信息网络安全, 2023, 23(5): 1-10. |

| [7] | 陈梓彤, 贾鹏, 刘嘉勇. 基于Siamese架构的恶意软件隐藏函数识别方法[J]. 信息网络安全, 2023, 23(5): 62-75. |

| [8] | 赵彩丹, 陈璟乾, 吴志强. 基于多通道联合学习的自动调制识别网络[J]. 信息网络安全, 2023, 23(4): 20-29. |

| [9] | 张玉健, 刘代富, 童飞. 基于局部图匹配的智能合约重入漏洞检测方法[J]. 信息网络安全, 2022, 22(8): 1-7. |

| [10] | 刘光杰, 段锟, 翟江涛, 秦佳禹. 基于多特征融合的移动流量应用识别[J]. 信息网络安全, 2022, 22(7): 18-26. |

| [11] | 王浩洋, 李伟, 彭思维, 秦元庆. 一种基于集成学习的列车控制系统入侵检测方法[J]. 信息网络安全, 2022, 22(5): 46-53. |

| [12] | 胡卫, 赵文龙, 陈璐, 付伟. 基于Logits向量的JSMA对抗样本攻击改进算法[J]. 信息网络安全, 2022, 22(3): 62-69. |

| [13] | 郑耀昊, 王利明, 杨婧. 基于网络结构自动搜索的对抗样本防御方法研究[J]. 信息网络安全, 2022, 22(3): 70-77. |

| [14] | 刘峰, 杨成意, 於欣澄, 齐佳音. 面向去中心化双重差分隐私的谱图卷积神经网络[J]. 信息网络安全, 2022, 22(2): 39-46. |

| [15] | 夏强, 何沛松, 罗杰, 刘嘉勇. 基于普遍对抗噪声的高效载体图像增强算法[J]. 信息网络安全, 2022, 22(2): 64-75. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||