信息网络安全 ›› 2023, Vol. 23 ›› Issue (7): 98-110.doi: 10.3969/j.issn.1671-1122.2023.07.010

基于差分隐私和秘密共享的多服务器联邦学习方案

- 1.贵州大学公共大数据国家重点实验室,贵阳 550025

2.贵州大学大数据产业发展应用研究院,贵阳 550025

-

收稿日期:2023-03-27出版日期:2023-07-10发布日期:2023-07-14 -

通讯作者:彭长根 cgpeng@gzu.edu.cn -

作者简介:陈晶(1998—),男,江苏,硕士研究生,CCF会员,主要研究方向为安全多方计算和隐私保护|彭长根(1963—),男,贵州,教授,博士,CCF杰出会员,主要研究方向为隐私保护、密码学和大数据安全|谭伟杰(1981—),男,陕西,副教授,博士,CCF会员,主要研究方向为物联网安全、人工智能安全和通信网络安全|许德权(1989—),男,贵州,博士研究生,主要研究方向为密码学和数据安全。 -

基金资助:国家自然科学基金(62272124)

A Multi-Server Federation Learning Scheme Based on Differential Privacy and Secret Sharing

CHEN Jing1, PENG Changgen1,2( ), TAN Weijie1,2, XU Dequan1

), TAN Weijie1,2, XU Dequan1

- 1. State Key Laboratory of Public Big Data, Guizhou University, Guiyang 550025, China

2. Guizhou Big Data Academy, Guizhou University, Guiyang 550025, China

-

Received:2023-03-27Online:2023-07-10Published:2023-07-14

摘要:

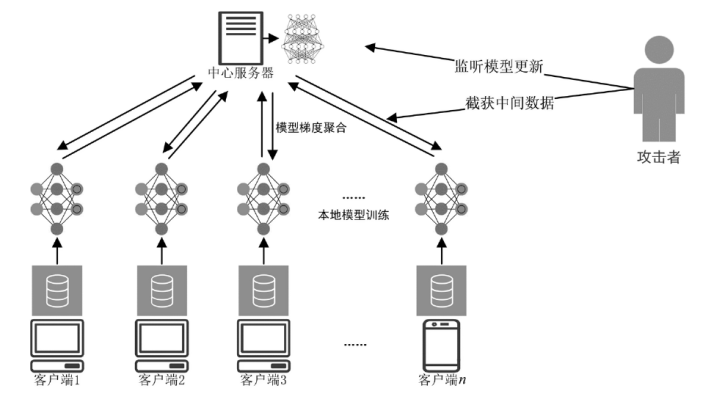

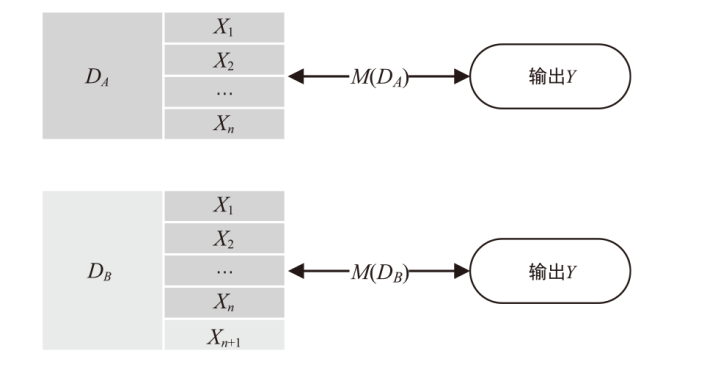

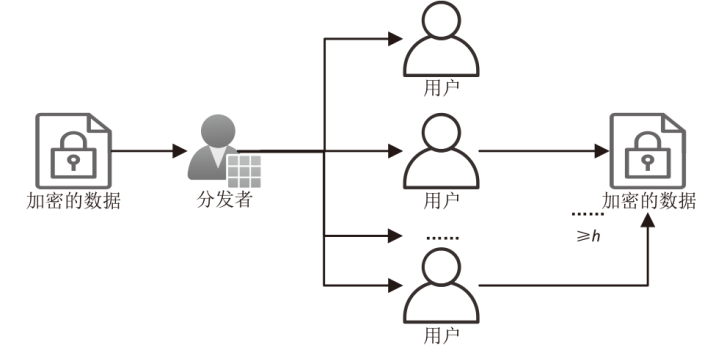

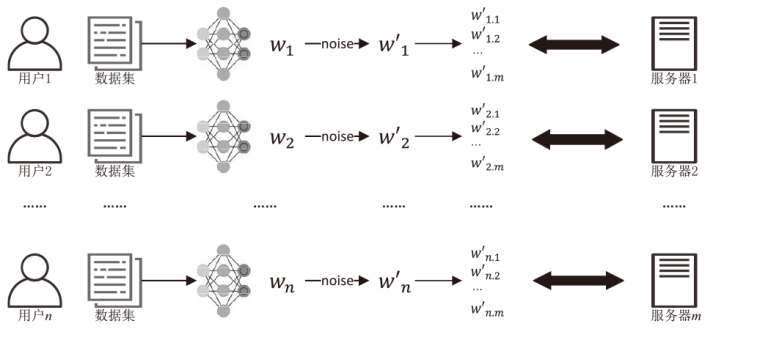

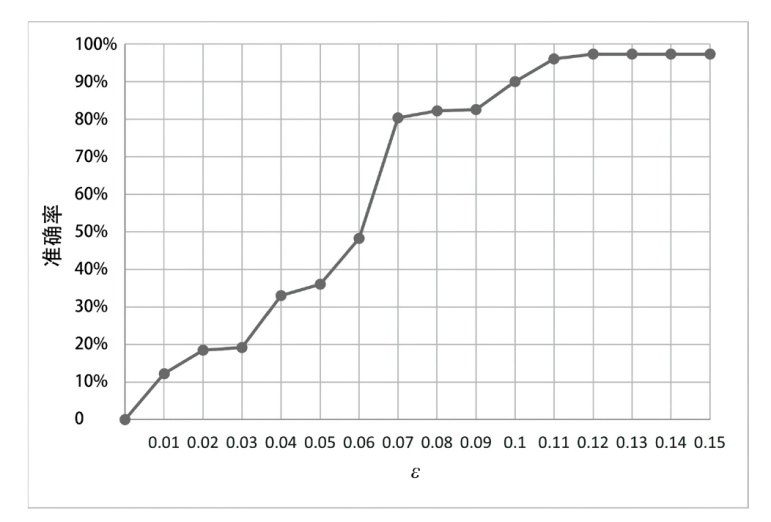

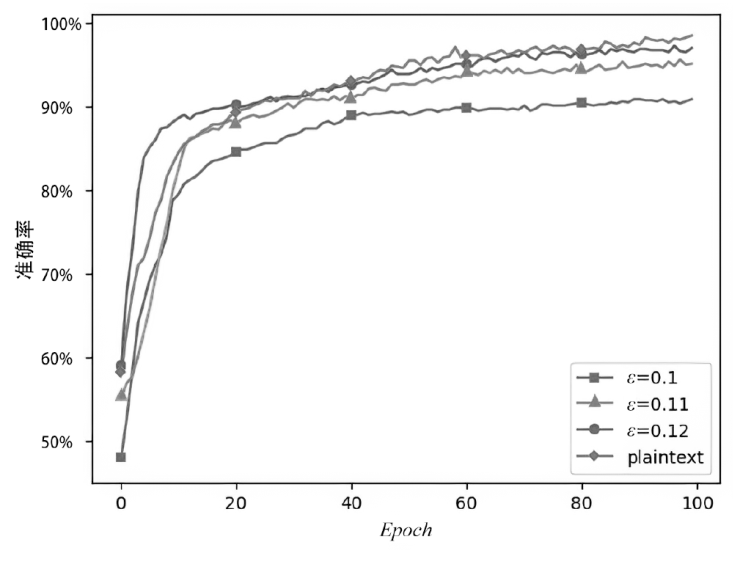

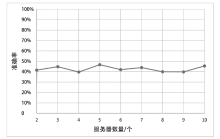

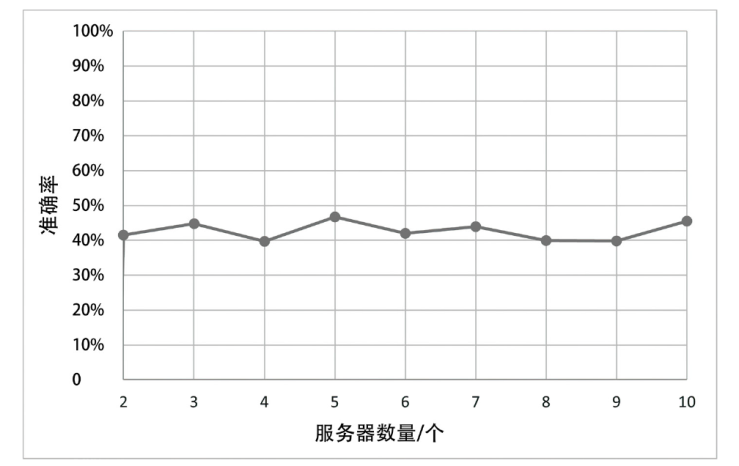

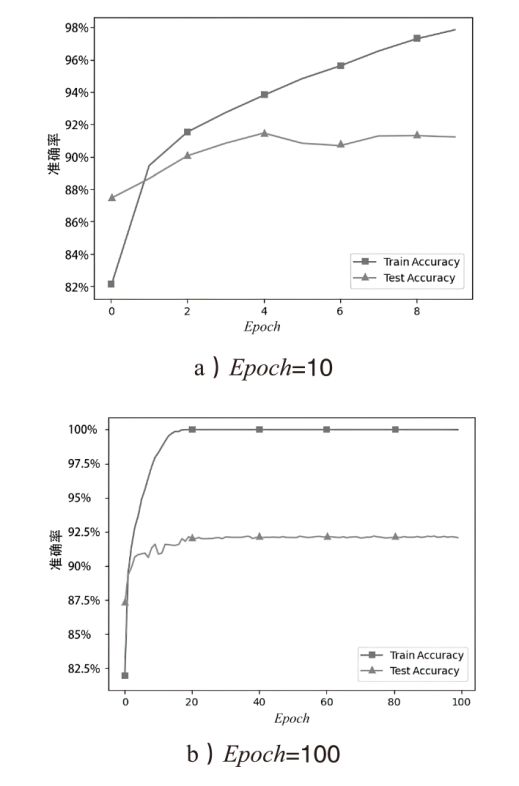

联邦学习依靠其中心服务器调度机制,能够在数据不出域的前提下完成多用户的联合训练。目前多数联邦学习方案及其相关的隐私保护方案都依赖于单个中心服务器完成加解密和梯度计算,一方面容易降低服务器的计算效率,另一方面一旦服务器受到外部攻击或是内部的恶意合谋,则会造成大量的隐私信息泄露。因此文章将差分隐私和秘密共享技术相结合,提出了一种多服务器的联邦学习方案。对本地用户训练的模型添加满足(ε,δ)-近似差分隐私的噪声,以防止多个服务器合谋获取隐私数据。将加噪后的梯度通过秘密共享协议分发至多服务器,保证传输梯度安全的同时利用多个服务器均衡计算负载,提高整体运算效率。基于公开数据集对该方案的模型性能、训练开销和安全性能进行了实验,结果表明该方案拥有较高的安全性,且该方案相较于明文方案,性能损耗仅为4%左右,对比单个服务器的加密方案,该方案在整体的计算开销上减少了近53%。

中图分类号:

引用本文

陈晶, 彭长根, 谭伟杰, 许德权. 基于差分隐私和秘密共享的多服务器联邦学习方案[J]. 信息网络安全, 2023, 23(7): 98-110.

CHEN Jing, PENG Changgen, TAN Weijie, XU Dequan. A Multi-Server Federation Learning Scheme Based on Differential Privacy and Secret Sharing[J]. Netinfo Security, 2023, 23(7): 98-110.

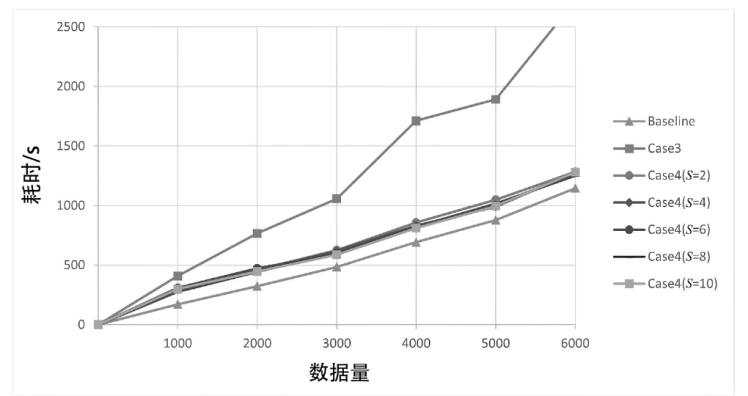

表3

实验参考系

| 参考系 | 详细设置 |

|---|---|

| Baseline | plaintext,Epoch=100, |

| Case1 | |

| Case2 | G,Epoch=100, |

| Case3 | |

| Case4 |

表4

训练100Epoch计算开销

| 模型\数据量 | 1000/s | 2000/s | 3000/s | 4000/s | 5000/s | 6000/s |

|---|---|---|---|---|---|---|

| Baseline | 169.58 | 323.13 | 485.13 | 692.31 | 877.81 | 1147.50 |

| Case3 | 409.06 | 767.29 | 1058.05 | 1711.50 | 1893.45 | 2721.17 |

| Case4(S=2) | 305.96 | 461.23 | 627.25 | 859.61 | 1049.17 | 1284.53 |

| Case4(S=4) | 295.31 | 449.88 | 618.74 | 830.97 | 991.21 | 1280.81 |

| Case4(S=6) | 308.74 | 472.37 | 594.17 | 810.15 | 1003.98 | 1274.87 |

| Case4(S=8) | 275.63 | 443.73 | 600.08 | 823.97 | 1015.36 | 1250.56 |

| Case4(S=10) | 300.53 | 448.04 | 589.04 | 811.54 | 997.42 | 1281.18 |

| [1] | GONG Yongqin. From FACEBOOK Personal Data Leak Incident to See the Platform’s Privacy Protection Responsibility[J]. Journal of Beijing Vocational College of Labour, 2018, 12(2): 31-34. |

| 弓永钦. 从FACEBOOK个人信息泄露事件看平台的隐私保护责任[J]. 北京劳动保障职业学院学报, 2018, 12(2):31-34. | |

| [2] | MCMAHAN H B, MOORE E, RAMAGE D, et al. Federated Learning of Deep Networks Using Model Averaging[EB/OL]. (2016-02-17) [2023-03-22]. https://doi.org/10.48550/arXiv.1602.05629. |

| [3] | SONG Congzheng, RISTENPART T, SHMATIKOV V. Machine Learning Models that Remember Too Much[C]// ACM. 2017 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2017: 587-601. |

| [4] | SHOKRI R, STRONATI M, SONG Congzheng, et al. Membership Inference Attacks Against Machine Learning Models[C]// IEEE. 2017 IEEE Symposium on Security and Privacy(SP). New York: IEEE, 2017: 3-18. |

| [5] | WANG Zhibo, SONG Mengkai, ZHANG Zhifei, et al. Beyond Inferring Class Representatives: User-Level Privacy Leakage from Federated Learning[C]// IEEE. IEEE INFOCOM 2019-IEEE Conference on Computer Communications. New York: IEEE, 2019: 2512-2520. |

| [6] | LIU Zaoxing, LI Tian, SMITH V, et al. Enhancing the Privacy of Federated Learning with Sketching[EB/OL].(2019-11-05) [2023-03-22].https://doi.org/10.48550/arXiv.1911.01812. |

| [7] |

SONG Mengkai, WANG Zhibo, ZHANG Zhifei, et al. Analyzing User-Level Privacy Attack Against Federated Learning[J]. IEEE Journal on Selected Areas in Communications, 2020, 38(10): 2430-2444.

doi: 10.1109/JSAC.49 URL |

| [8] | XIE Wei, WANG Yang, BOKER S M, et al. Privlogit: Efficient Privacy-Preserving Logistic Regression by Tailoring Numerical Optimizers[EB/OL].(2016-11-03) [2023-03-22].https://doi.org/10.48550/arXiv.1611.01170. |

| [9] |

PHONG L T, AONO Y, HAYASHI T, et al. Privacy-Preserving Deep Learning via Additively Homomorphic Encryption[J]. IEEE Transactions on Information Forensics and Security, 2018, 13(5): 1333-1345.

doi: 10.1109/TIFS.2017.2787987 URL |

| [10] | HARDY S, HENECKA W, IVEY-LAW H, et al. Private Federated Learning on Vertically Partitioned Data via Entity Resolution and Additively Homomorphic Encryption[EB/OL]. (2017-11-29) [2023-03-22]. https://doi.org/10.48550/arXiv.1711.10677. |

| [11] |

LIU Xiaoyuan, LI Hongwei, XU Guowen, et al. Privacy-Enhanced Federated Learning Against Poisoning Adversaries[J]. IEEE Transactions on Information Forensics and Security, 2021, 16: 4574-4588.

doi: 10.1109/TIFS.2021.3108434 URL |

| [12] | SONG lei, MA Chunguang, DUAN Guanghan, et al. Privacy-Preserving Logistic Regression on Vertically Partitioned Data[J]. Journal of Computer Research and Development, 2019, 56(10): 2243-2249. |

| 宋蕾, 马春光, 段广晗, 等. 基于数据纵向分布的隐私保护逻辑回归[J]. 计算机研究与发展, 2019, 56(10):2243-2249. | |

| [13] | GEYER R C, KLEIN T, NABI M. Differentially Private Federated Learning: A Client Level Perspective[EB/OL]. (2017-12-20) [2023-03-22]. https://doi.org/10.48550/arXiv.1712.07557. |

| [14] |

WEI Kang, LI Jun, DING Ming, et al. Federated Learning with Differential Privacy: Algorithms and Performance Analysis[J]. IEEE Transactions on Information Forensics and Security, 2020, 15: 3454-3469.

doi: 10.1109/TIFS.2020.2988575 URL |

| [15] | BONAWITZ K, IVANOV V, KREUTER B, et al. Practical Secure Aggregation for Privacy-Preserving Machine Learning[C]// ACM. The 2017 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2017: 1175-1191. |

| [16] | BYRD D, POLYCHRONIADOU A. Differentially Private Secure Multi-Party Computation for Federated Learning in Financial Applications[C]// ACM. The First ACM International Conference on AI in Finance. New York: ACM, 2020: 1-9. |

| [17] | JAYARAMAN B, WANG Lingxiao, Evans D, et al. Distributed Learning Without Distress: Privacy-Preserving Empirical Risk Minimization[C]// IEEE. Advances in Neural Information Processing Systems. New York: IEEE, 2018, 31-42. |

| [18] | TRUEX S, LIU Ling, CHOW K H, et al. LDP-Fed: Federated Learning with Local Differential Privacy[C]// ACM. 3rd ACM International Workshop on Edge Systems, Analytics and Networking. New York: ACM, 2020: 61-66. |

| [19] |

ZHAO Yang, ZHAO Jun, YANG Mengmeng, et al. Local Differential Privacy-Based Federated Learning for Internet of Things[J]. IEEE Internet of Things Journal, 2020, 8(11): 8836-8853.

doi: 10.1109/JIOT.2020.3037194 URL |

| [20] | SHI Lu, SHU Jiangang, ZHANG Weizhe, et al. HFL-DP: Hierarchical Federated Learning with Differential Privacy[C]// IEEE. 2021 IEEE Global Communications Conference(GLOBECOM). New York: IEEE, 2021: 1-7. |

| [21] | ZHANG Yu, CAI Ying, CUI Jianyang, et al. Gradient Descent with Momentum Algorithm Based on Differential Privacy in Convolutional Neural Network[J]. Journal of Computer Applications, 2023: 1-10. |

| 张宇, 蔡英, 崔剑阳, 等. 卷积神经网络中基于差分隐私的动量梯度下降算法[J]. 计算机应用, 2023:1-10. | |

| [22] | YU Lei, LIU Ling, PU C, et al. Differentially Private Model Publishing for Deep Learning[C]// IEEE. 2019 IEEE Symposium on Security and Privacy(SP). New York: IEEE, 2019: 332-349. |

| [23] | HAN Weili, SONG Lushan, RUAN Wenqiang, et al. Secure Multi-Party Learning: From Secure Computation to Secure Learning[J]. Chinese Journal of Computers, 2023: 1-20. |

| 韩伟力, 宋鲁杉, 阮雯强, 等. 安全多方学习:从安全计算到安全学习[J]. 计算机学报, 2023:1-20. | |

| [24] | SAKTHIMOHAN M, ELIZABETH RG, NAVENEETHAKRISHNAN M, et al. Digit Recognition of MNIST Handwritten Using Convolutional Neural Networks[C]// IEEE. 2023 International Conference on Intelligent Systems for Communication, IoT and Security. New York: IEEE, 2023: 328-332. |

| [25] | KAYED M, ANTER A, MOHAMED H. Classification of Garments from Fashion MNIST Dataset Using CNN LeNet-5 Architecture[C]// IEEE. 2020 International Conference on Innovative Trends in Communication and Computer Engineering(ITCE). New York: IEEE, 2020: 238-243. |

| [1] | 许春根, 薛少康, 徐磊, 张盼. 基于安全两方计算的高效神经网络推理协议[J]. 信息网络安全, 2023, 23(7): 22-30. |

| [2] | 刘刚, 杨雯莉, 王同礼, 李阳. 基于云联邦的差分隐私保护动态推荐模型[J]. 信息网络安全, 2023, 23(7): 31-43. |

| [3] | 杨宇光, 卢嘉煜. 一种基于星型簇态的动态的分级的量子秘密共享协议[J]. 信息网络安全, 2023, 23(6): 34-42. |

| [4] | 秦宝东, ,陈从正, ,何俊杰, 郑东. 基于可验证秘密共享的多关键词可搜索加密方案[J]. 信息网络安全, 2023, 23(5): 32-40. |

| [5] | 裴蓓, 张水海, 吕春利. 用于云存储的主动秘密共享方案[J]. 信息网络安全, 2023, 23(5): 95-104. |

| [6] | 马敏, 付钰, 黄凯. 云环境下基于秘密共享的安全外包主成分分析方案[J]. 信息网络安全, 2023, 23(4): 61-71. |

| [7] | 刘长杰, 石润华. 基于安全高效联邦学习的智能电网入侵检测模型[J]. 信息网络安全, 2023, 23(4): 90-101. |

| [8] | 张学旺, 张豪, 姚亚宁, 付佳丽. 基于群签名和同态加密的联盟链隐私保护方案[J]. 信息网络安全, 2023, 23(3): 56-61. |

| [9] | 赵佳, 高塔, 张建成. 基于改进贝叶斯网络的高维数据本地差分隐私方法[J]. 信息网络安全, 2023, 23(2): 19-25. |

| [10] | 刘忻, 李韵宜, 王淼. 一种基于机密计算的联邦学习节点轻量级身份认证协议[J]. 信息网络安全, 2022, 22(7): 37-45. |

| [11] | 吕国华, 胡学先, 杨明, 徐敏. 基于联邦随机森林的船舶AIS轨迹分类算法[J]. 信息网络安全, 2022, 22(4): 67-76. |

| [12] | 吕凯欣, 李志慧, 黑吉辽, 宋云. 一类图存取结构的最优信息率计算[J]. 信息网络安全, 2022, 22(4): 77-85. |

| [13] | 刘峰, 杨成意, 於欣澄, 齐佳音. 面向去中心化双重差分隐私的谱图卷积神经网络[J]. 信息网络安全, 2022, 22(2): 39-46. |

| [14] | 晏燕, 张雄, 冯涛. 大数据统计划分发布的等比差分隐私预算分配方法[J]. 信息网络安全, 2022, 22(11): 24-35. |

| [15] | 白宏鹏, 邓东旭, 许光全, 周德祥. 基于联邦学习的入侵检测机制研究[J]. 信息网络安全, 2022, 22(1): 46-54. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||