Netinfo Security ›› 2025, Vol. 25 ›› Issue (4): 524-535.doi: 10.3969/j.issn.1671-1122.2025.04.002

Previous Articles Next Articles

Research on Differential Privacy Methods for Medical Diagnosis Based on Knowledge Distillation

- School of Cyber Science and Technology, Beihang University, Beijing 100191, China

-

Received:2024-11-25Online:2025-04-10Published:2025-04-25

CLC Number:

Cite this article

LI Xiao, SONG Xiao, LI Yong. Research on Differential Privacy Methods for Medical Diagnosis Based on Knowledge Distillation[J]. Netinfo Security, 2025, 25(4): 524-535.

share this article

Add to citation manager EndNote|Ris|BibTeX

URL: http://netinfo-security.org/EN/10.3969/j.issn.1671-1122.2025.04.002

| 层类型 | 名称 | 输入尺寸 | 输出尺寸 | 参数描述 |

|---|---|---|---|---|

| 输入层 | — | (N,3) | (N,3) | 输入节点特征矩阵,N为节点数量 |

| 图卷积层 | GATConv(conv1) | (N,3) | (N,96) | 96个卷积核,每个卷积核有27个权重和一个偏置,总计2688个参数 |

| 激活层 | ELU | (N,96) | (N,96) | 应用ELU激活函数 |

| 丢弃层 | Dropout | (N,96) | (N,96) | 随机丢弃比例为0.5 |

| 图卷积层 | GATConv(conv2) | (N,96) | (N,32) | 32个卷积核,每个卷积核有288个权重和一个偏置,总计9248个参数 |

| 激活层 | ELU | (N,32) | (N,32) | 应用ELU激活函数 |

| 丢弃层 | Dropout | (N,32) | (N,32) | 随机丢弃比例为0.5 |

| 图卷积层 | GATConv(conv3) | (N,32) | (N,32) | 32个卷积核,每个卷积核有96个权重和一个偏置,总计3104个参数 |

| 激活层 | ELU | (N,32) | (N,32) | 应用ELU激活函数 |

| 池化层 | Global Mean Pooling | (N,32) | (1,32) | 全局平均池化,整合整个图的特征 |

| 丢弃层 | Dropout | (1,32) | (1,32) | 随机丢弃比例为0.5 |

| 全连接层 | Linear | (1,32) | (1,2) | 全连接层,将32个隐藏单元映射到2个输出类别,共66个参数 |

| 激活函数 | Log Softmax | (1,2) | (1,2) | 使用log_softmax激活函数作为输出 |

| [1] | WAC M, SANTOS-RODRIGUEZ R, MCWILLIAMS C, et al. Capturing Requirements for a Data Annotation Tool for Intensive Care: Experimental User-Centered Design Study[EB/OL]. (2023-09-28)[2024-11-15]. https://arxiv.org/abs/2309.16500. |

| [2] | DAI Enyan, ZHAO Tianxiang, ZHU Huaisheng, et al. A Comprehensive Survey on Trustworthy Graph Neural Networks: Privacy, Robustness, Fairness, and Explainability[J]. Machine Intelligence Research, 2024, 21(6): 1011-1061. |

| [3] | WU Zonghan, PAN Shirui, CHEN Fengwen, et al. A Comprehensive Survey on Graph Neural Networks[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 32(1): 4-24. |

| [4] | DILLON T W, LENDING D. Will They Adopt? Effects of Privacy and Accuracy[J]. Journal of Computer Information Systems, 2010, 50(4): 20-29. |

| [5] | ZHOU Jie, CUI Ganqu, HU Shengding, et al. Graph Neural Networks: A Review of Methods and Applications[J]. AI Open, 2020, 1: 57-81. |

| [6] | LIU Zhiyuan, ZHOU Jie. Graph Attention Networks[M]. Beijing: Posts & Telecom Press, 2021. |

| [7] | ZHANG Si, TONG Hanghang, XU Jiejun, et al. Graph Convolutional Networks: A Comprehensive Review[EB/OL]. (2019-09-10)[2024-11-15]. https://doi.org/10.1186/s40649-019-0069-y. |

| [8] | DWORK C. Differential Privacy[M]. Heidelberg: Springer, 2006. |

| [9] | DWORK C, ROTH A. The Algorithmic Foundations of Differential Privacy[J]. Foundations and Trends® in Theoretical Computer Science, 2014, 9(3-4): 211-407. |

| [10] | DWORK C, MCSHERRY F, NISSIM K, et al. Calibrating Noise to Sensitivity in Private Data Analysis[C]// Springer. Proceedings of the Third Conference on Theory of Cryptography. Heidelberg: Springer, 2006: 265-284. |

| [11] | WEN Jie, ZHANG Zhixia, LAN Yang, et al. A Survey on Federated Learning: Challenges and Applications[J]. International Journal of Machine Learning and Cybernetics, 2022, 14(2): 513-535. |

| [12] | YU Da, ZHANG Huishuai, CHEN Wei, et al. Large Scale Private Learning via Low-Rank Reparametrization[C]// PMLR. International Conference on Machine Learning. New York: PMLR, 2021: 12208-12218. |

| [13] | UNIYAL A, NAIDU R, KOTTI S, et al. DP-SGD vs PATE: Which Has Less Disparate Impact on Model Accuracy?[EB/OL]. (2024-03-19)[2024-11-15]. https://ar5iv.labs.arxiv.org/html/2106.12576. |

| [14] | CHEN Jing, ZHANG Jian. A Data-Free Personalized Federated Learning Algorithm Based on Knowledge Distillation[J]. Netinfo Security, 2024, 24(10): 1562-1569. |

| 陈婧, 张健. 基于知识蒸馏的无数据个性化联邦学习算法[J]. 信息网络安全, 2024, 24(10):1562-1569. | |

| [15] | LIU Zilong, WANG Xuequn. How to Regulate Individuals’ Privacy Boundaries on Social Network Sites: A Cross-Cultural Comparison[J]. Information & Management, 2018, 55(8): 1005-1023. |

| [16] | INAN A, GURSOY M E, SAYGIN Y. Sensitivity Analysis for Non-Interactive Differential Privacy: Bounds and Efficient Algorithms[J]. IEEE Transactions on Dependable and Secure Computing, 2017(1): 194-207. |

| [17] | ABADI M, CHU A, GOODFELLOW I, et al. Deep Learning with Differential Privacy[C]// ACM. Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. |

| [18] | PAPERNOT N, ABADI M, ERLINGSSON Ú, et al. Semi-Supervised Knowledge Transfer for Deep Learning from Private Training Data[EB/OL]. (2017-02-06)[2024-11-15]. https://arxiv.org/abs/1610.05755v4. |

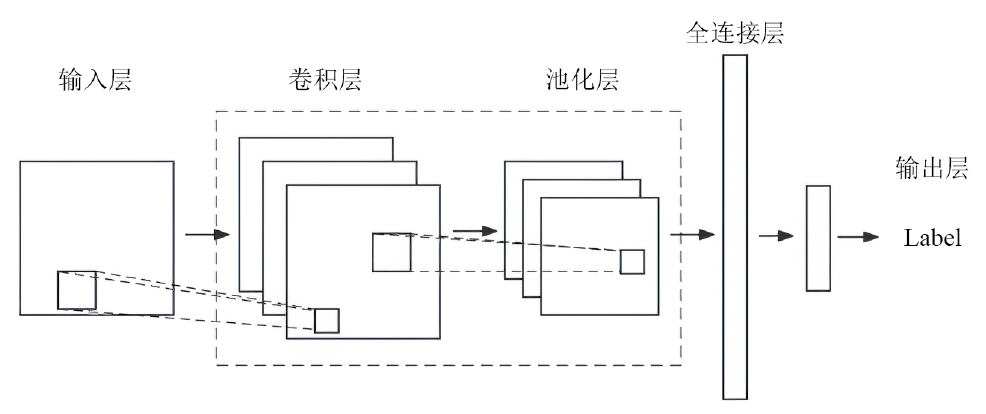

| [19] | KRICHEN M. Convolutional Neural Networks: A Survey[EB/OL]. (2023-06-17)[2024-11-15]. https://doi.org/10.3390/computers12080151. |

| [20] | ZHAO Xia, WANG Limin, ZHANG Yufei, et al. A Review of Convolutional Neural Networks in Computer Vision[EB/OL]. (2024-03-23)[2024-11-25]. https://doi.org/10.1007/s10462-024-10721-6. |

| [21] | VELICKOVIC P, CUCURULL G, CASANOVA A, et al. Graph Attention Networks[EB/OL]. (2017-10-30)[2024-11-15]. https://arxiv.org/abs/1710.10903. |

| [22] | NGONG I. Maintaining Privacy in Medical Data with Differential Privacy[EB/OL]. (2020-03-12)[2024-11-15]. https://openmined.org/blog/maintaining-privacy-in-medical-data-with-differential-privacy. |

| [23] | LIU Weikang, ZHANG Yanchun, YANG Hong, et al. A Survey on Differential Privacy for Medical Data Analysis[J]. Annals of Data Science, 2024, 11(2): 733-747. |

| [24] | WEI Li, DUAN Qin, LIU Zhiwei. A Summary of Medical Information Security Management in Internet Era[J]. Netinfo Security, 2019, 19(12): 88-92. |

| 韦力, 段沁, 刘志伟. 互联网时代医院网络安全管理综述[J]. 信息网络安全, 2019, 19(12):88-92. | |

| [25] | YAN Haicao, YIN Menghan, YAN Chaokun, et al. A Survey of Privacy Preserving Methods Based on Differential Privacy for Medical Data[C]// IEEE. 2024 7th World Conference on Computing and Communication Technologies (WCCCT). New York: IEEE, 2024: 104-108. |

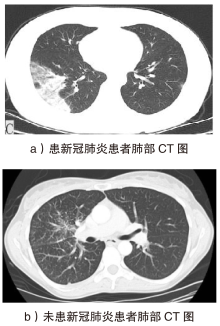

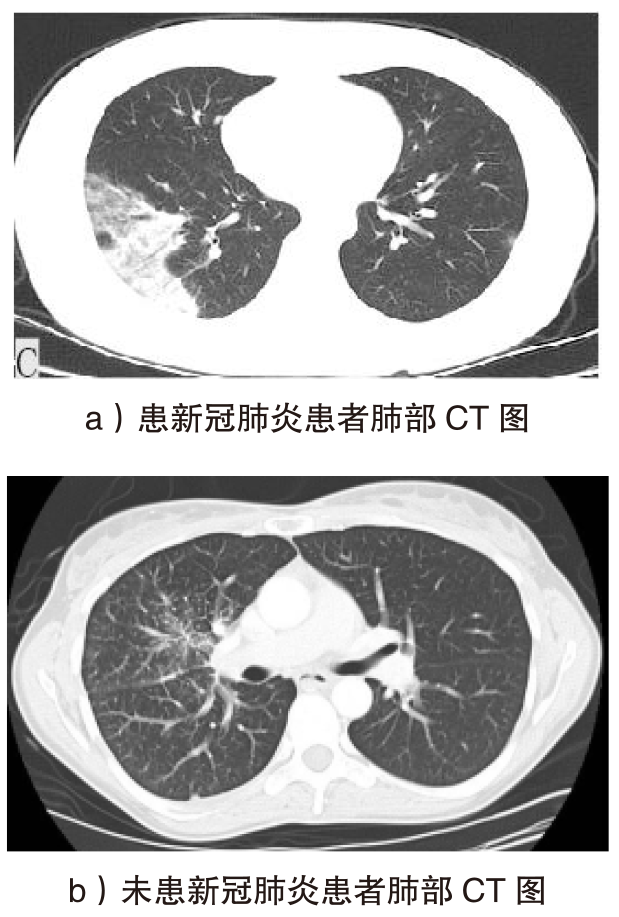

| [26] | YANG Xingyi, HE Xuehai, ZHAO Jinyu, et al. COVID-CT-Dataset:A CT Scan Dataset about COVID-19[EB/OL]. (2020-03-30)[2024-11-15]. https://arxiv.org/abs/2003.13865v3. |

| [27] |

YANG Feng, POOSTCHI M, YU Hang, et al. Deep Learning for Smartphone-Based Malaria Parasite Detection in Thick Blood Smears[J]. IEEE Journal of Biomedical and Health Informatics, 2020, 24(5): 1427-1438.

doi: 10.1109/JBHI.2019.2939121 pmid: 31545747 |

| [28] | KASSIM Y M, YANG Feng, YU Hang, et al. Diagnosing Malaria Patients with Plasmodium Falciparum and Vivax Using Deep Learning for Thick Smear Images[EB/OL]. (2021-10-27)[2024-11-15]. https://doi.org/10.3390/diagnostics11111994. |

| [29] | KASSIM Y M, PALANIAPPAN K, YANG Feng, et al. Clustering-Based Dual Deep Learning Architecture for Detecting Red Blood Cells in Malaria Diagnostic Smears[J]. IEEE Journal of Biomedical and Health Informatics, 2020, 25(5): 1735-1746. |

| [30] | YANG Feng, QUIZON N, YU Hang, et al. Cascading YOLO: Automated Malaria Parasite Detection for Plasmodium Vivax in Thin Blood Smears[C]// SPIE. Medical Imaging 2020:Computer-Aided Diagnosis. Bellingham: SPIE, 2020: 404-410. |

| [31] | RAJARAMAN S, ANTANI S K, POOSTCHI M, et al. Pre-Trained Convolutional Neural Networks as Feature Extractors Toward Improved Malaria Parasite Detection in Thin Blood Smear Images[EB/OL]. (2018-04-16)[2024-11-15]. https://doi.org/10.7717/peerj.4568. |

| [1] | XU Ruzhi, TONG Yumeng, DAI Lipeng. Research on Federated Learning Adaptive Differential Privacy Method Based on Heterogeneous Data [J]. Netinfo Security, 2025, 25(1): 63-77. |

| [2] | YIN Chunyong, JIA Xukang. Research on 3D-Location Privacy Publishing Algorithm Based on Policy Graph [J]. Netinfo Security, 2024, 24(4): 602-613. |

| [3] | YANG Jiechao, HU Hanping, SHUAI Yan, DENG Yuxin. Lightweight Stream Cipher Based on Time-Varying Mutual Coupling Double Chaotic System [J]. Netinfo Security, 2024, 24(3): 385-397. |

| [4] | ZHANG Xuan, WAN Liang, LUO Heng, YANG Yang. Automated Botnet Detection Method Based on Two-Stage Graph Learning [J]. Netinfo Security, 2024, 24(12): 1933-1947. |

| [5] | CHEN Jing, ZHANG Jian. A Data-Free Personalized Federated Learning Algorithm Based on Knowledge Distillation [J]. Netinfo Security, 2024, 24(10): 1562-1569. |

| [6] | XU Ruzhi, DAI Lipeng, XIA Diya, YANG Xin. Research on Centralized Differential Privacy Algorithm for Federated Learning [J]. Netinfo Security, 2024, 24(1): 69-79. |

| [7] | YIN Chunyong, JIANG Yiyang. Differential Privacy Trajectory Protection Model Based on Personalized Spatiotemporal Clustering [J]. Netinfo Security, 2024, 24(1): 80-92. |

| [8] | LIU Gang, YANG Wenli, WANG Tongli, LI Yang. Differential Privacy-Preserving Dynamic Recommendation Model Based on Cloud Federation [J]. Netinfo Security, 2023, 23(7): 31-43. |

| [9] | CHEN Jing, PENG Changgen, TAN Weijie, XU Dequan. A Multi-Server Federation Learning Scheme Based on Differential Privacy and Secret Sharing [J]. Netinfo Security, 2023, 23(7): 98-110. |

| [10] | ZHAO Jia, GAO Ta, ZHANG Jiancheng. Method of Local Differential Privacy Method for High-Dimensional Data Based on Improved Bayesian Network [J]. Netinfo Security, 2023, 23(2): 19-25. |

| [11] | HU Yujia, DAI Zhengyi, SUN Bing. Differential-Linear Cryptanalysis of the SIMON Algorithm [J]. Netinfo Security, 2022, 22(9): 63-75. |

| [12] | TONG Xiaojun, SU Yuyue, ZHANG Miao, WANG Zhu. Lightweight Cipher Algorithm Based on Chaos and Improved Generalized Feistel Structure [J]. Netinfo Security, 2022, 22(8): 8-18. |

| [13] | LIU Xin, LI Yunyi, WANG Miao. A Lightweight Authentication Protocol Based on Confidential Computing for Federated Learning Nodes [J]. Netinfo Security, 2022, 22(7): 37-45. |

| [14] | LIU Feng, YANG Chengyi, YU Xincheng, QI Jiayin. Spectral Graph Convolutional Neural Network for Decentralized Dual Differential Privacy [J]. Netinfo Security, 2022, 22(2): 39-46. |

| [15] | YAN Yan, ZHANG Xiong, FENG Tao. Proportional Differential Privacy Budget Allocation Method for Partition and Publishing of Statistical Big Data [J]. Netinfo Security, 2022, 22(11): 24-35. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||