信息网络安全 ›› 2025, Vol. 25 ›› Issue (5): 767-777.doi: 10.3969/j.issn.1671-1122.2025.05.009

基于高阶特征与重要通道的通用性扰动生成方法

- 北京工业大学计算机学院,北京 100124

-

收稿日期:2025-02-19出版日期:2025-05-10发布日期:2025-06-10 -

通讯作者:陶科锦taokejin@emails.bjut.edu.cn -

作者简介:张兴兰(1970—),女,山西,教授,博士,主要研究方向为人工智能安全、量子计算、密码学|陶科锦(2000—),男,山东,硕士研究生,主要研究方向为对抗样本与人工智能安全 -

基金资助:国家自然科学基金(62202017)

Universal Perturbations Generation Method Based on High-Level Features and Important Channels

- School of Computer Science, Beijing University of Technology, Beijing 100124, China

-

Received:2025-02-19Online:2025-05-10Published:2025-06-10

摘要:

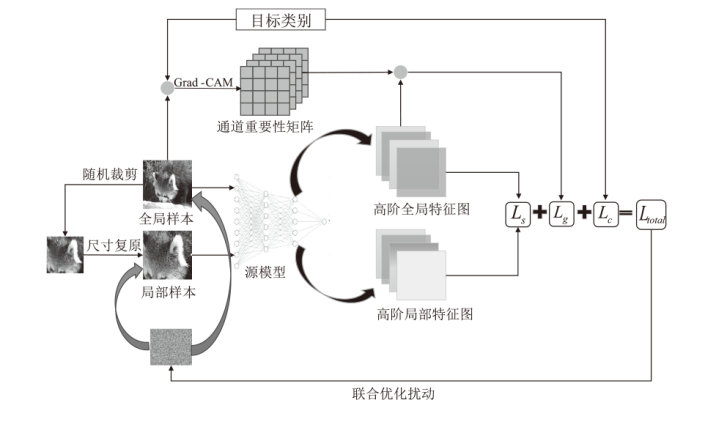

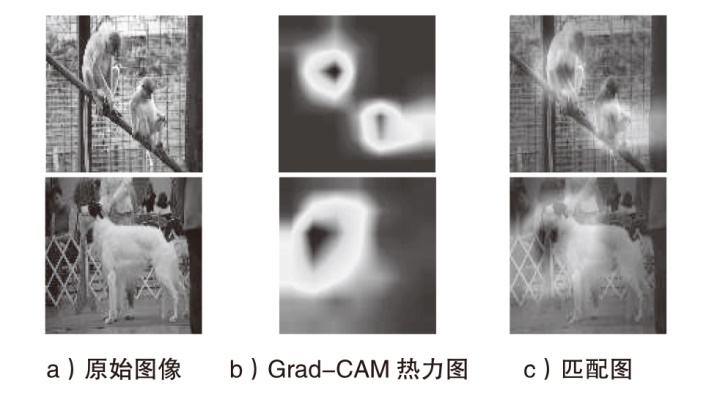

以深度卷积神经网络(DCNN)为代表的深度神经网络模型在面对精心设计的对抗样本时,往往存在鲁棒性不足的问题。在现有的攻击方法中,基于梯度的对抗样本生成方法常常因过度拟合白盒模型而缺乏跨模型的迁移攻击能力。针对这一问题,文章提出一种基于高阶特征与重要通道的通用性扰动生成方法来提高对抗样本的可迁移性。文章基于高阶特征深度挖掘设计了3种损失模块。首先,通过干净样本对指定类别的类别梯度矩阵与对抗样本的高阶特征图相乘得到高阶特征重要通道损失,以此来引导对抗样本在高阶特征重点区域的变动趋势。其次,通过计算全局高阶特征矩阵与局部高阶特征矩阵的相似度作为高阶特征相似度损失,控制扰动对高阶特征的引导方向。最后,由分类损失控制目标攻击时扰动优化的总体方向。该方法在梯度更新过程中可与DIM、TIM、SIM等梯度更新策略联合训练扰动。通过在ImageNet与Fashion MNIST数据集上,针对多种正常训练与对抗训练的不同架构DCNN模型进行实验与测试,结果表明,该方法生成的对抗样本攻击迁移性显著优于现有的基于梯度的对抗样本生成方法。

中图分类号:

引用本文

张兴兰, 陶科锦. 基于高阶特征与重要通道的通用性扰动生成方法[J]. 信息网络安全, 2025, 25(5): 767-777.

ZHANG Xinglan, TAO Kejin. Universal Perturbations Generation Method Based on High-Level Features and Important Channels[J]. Netinfo Security, 2025, 25(5): 767-777.

表1

不同权重下ImageNet数据集攻击成功率

| VN-16 | DN-121 | EN-B0 | ViT | Inc-v3-ens3 | Inc-v3-ens4 | |

|---|---|---|---|---|---|---|

| 1:1:5 | 69.9% | 80.1% | 66.2% | 54.0% | 18.8% | 15.9% |

| 1:1:10 | 74.1% | 87.4% | 71.9% | 60.1% | 22.1% | 20.9% |

| 1:1:15 | 71.2% | 82.4% | 70.5% | 58.3% | 20.2% | 18.7% |

| 5:1:1 | 65.6% | 70.3% | 63.6% | 55.9% | 15.0% | 13.4% |

| 1:5:1 | 61.9% | 64.6% | 60.1% | 51.6% | 12.9% | 11.5% |

表3

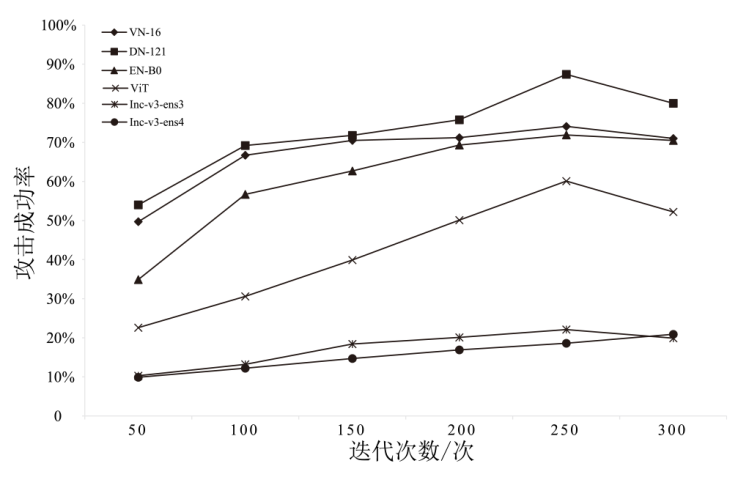

不同方法在ImageNet数据集上的攻击成功率

| 方法 | VN-16 | DN-121 | EN-B0 | ViT | Inc-v3-ens3 | Inc-v3-ens4 |

|---|---|---|---|---|---|---|

| FGSM | 20.5% | 30.5% | 19.8% | 11.2% | 6.2% | 5.0% |

| BIM | 27.3% | 36.6% | 25.3% | 14.3% | 10.3% | 11.2% |

| MI-FGSM | 47.8% | 50.7% | 33.6% | 22.8% | 17.3% | 15.7% |

| SGM | 55.9% | 63.0% | 58.7% | 44.7% | 12.3% | 10.9% |

| BN-MI-FGSM | 61.4% | 64.2% | 62.5% | 49.6% | 16.2% | 15.1% |

| 本文方法 | 74.1% | 87.4% | 71.9% | 60.1% | 22.1% | 20.9% |

表4

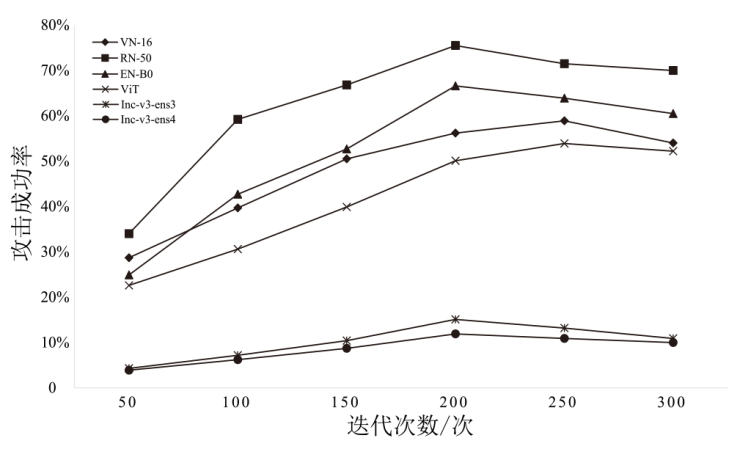

不同方法在ImageNet数据集上的攻击成功率

| 方法 | VN-16 | RN-50 | EN-B0 | ViT | Inc-v3-ens3 | Inc-v3-ens4 |

|---|---|---|---|---|---|---|

| FGSM | 10.2% | 14.3% | 17.9% | 9.4% | 4.6% | 2.0% |

| BIM | 14.2% | 21.7% | 22.3% | 15.3% | 11.4% | 10.7% |

| MI-FGSM | 30.3% | 32.5% | 32.4% | 28.8% | 19.2% | 14.7% |

| SGM | 45.9% | 50.1% | 52.4% | 39.5% | 14.6% | 15.3% |

| BN-MI-FGSM | 43.4% | 54.7% | 59.8% | 41.6% | 18.2% | 16.0% |

| 本文方法 | 55.2% | 69.3% | 66.3% | 52.4% | 21.4% | 19.2% |

表5

不同方法在Fashion MNIST数据集上的攻击成功率

| 方法 | VN-16 | DN-121 | EN-B0 | ViT | Inc-v3-ens3 | Inc-v3-ens4 |

|---|---|---|---|---|---|---|

| FGSM | 13.6% | 28.5% | 14.7% | 10.6% | 14.2% | 13.5% |

| BIM | 18.6% | 37.6% | 21.6% | 13.3% | 27.3% | 22.3% |

| MI-FGSM | 43.2% | 49.0% | 39.8% | 34.2% | 22.7% | 27.8% |

| SGM | 56.0% | 55.1% | 54.3% | 45.7% | 29.0% | 26.4% |

| BN-MI-FGSM | 65.3% | 67.7% | 50.6% | 60.4% | 31.4% | 25.9% |

| 本文方法 | 72.7% | 84.8% | 75.3% | 61.7% | 30.8% | 30.9% |

表6

不同方法在Fashion MNIST数据集上的攻击成功率

| 方法 | VN-16 | RN-50 | EN-B0 | ViT | Inc-v3-ens3 | Inc-v3-ens4 |

|---|---|---|---|---|---|---|

| FGSM | 12.2% | 15.1% | 11.5% | 9.2% | 3.5% | 4.3% |

| BIM | 14.4% | 20.7% | 15.4% | 16.9% | 7.8% | 9.4% |

| MI-FGSM | 31.3% | 35.5% | 29.9% | 25.1% | 11.1% | 10.9% |

| SGM | 40.9% | 42.8% | 49.3% | 50.1% | 12.3% | 12.7% |

| BN-MI-FGSM | 41.3% | 59.4% | 46.7% | 48.8% | 10.8% | 11.3% |

| 本文方法 | 58.9% | 75.5% | 66.6% | 53.9% | 13.2% | 11.9% |

| [1] | ZAREMBA W, SUTSKEVER I, VINYALS O. Recurrent Neural Network Regularization[EB/OL].(2015-02-19)[2024-12-24]. https://arxiv.org/abs/1409.2329v5. |

| [2] | HINTON G, DENG Li, YU Dong, et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups[J]. IEEE Signal Processing Magazine, 2012, 29(6): 82-97. |

| [3] | SUN Ke, XIAO Bin, LIU Dong, et al. Deep High-Resolution Representation Learning for Human Pose Estimation[C]// IEEE. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2019: 5686-5696. |

| [4] | ZHANG Yuqing, DONG Ying, LIU Caiyun, et al. Situation, Trends and Prospects of Deep Learning Applied to Cyberspace Security[J]. Journal of Computer Research and Development, 2018, 55(6): 1117-1142. |

| [5] | NAUMOV M, MUDIGERE D, SHI H M, et al. Deep Learning Recommendation Model for Personalization and Recommendation Systems[EB/OL].(2019-05-31)[2024-12-24]. https://arxiv.org/abs/1906.00091v1. |

| [6] | KRIZHEVSKY A, SUTSKEVER I, HINTON G E. ImageNet Classification with Deep Convolutional Neural Networks[J]. Communications of the ACM, 2017, 60(6): 84-90. |

| [7] | SIMONYAN K, ZISSERMAN A. Very Deep Convolutional Networks for Large-Scale Image Recognition[EB/OL].(2015-04-10)[2024-12-24]. https://arxiv.org/abs/1409.1556v6. |

| [8] | SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the Inception Architecture for Computer Vision[C]// IEEE. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2016: 2818-2826. |

| [9] | HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep Residual Learning for Image Recognition[C]// IEEE. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2016: 770-778. |

| [10] | SZEGEDY C, ZAREMBA W, SUTSKEVER I, et al. Intriguing Properties of Neural Networks[EB/OL].(2014-02-19)[2024-12-24]. https://arxiv.org/abs/1312.6199v4. |

| [11] | LONG Teng, GAO Qi, XU Lili, et al. A Survey on Adversarial Attacks in Computer Vision: Taxonomy, Visualization and Future Directions[EB/OL].(2022-07-27)[2024-12-24]. https://doi.org/10.1016/j.cose.2022.102847. |

| [12] | GOODFELLOW I J, SHLENS J, SZEGEDY C, et al. Explaining and Harnessing Adversarial Examples[EB/OL].(2015-03-20)[2024-12-24]. https://arxiv.org/abs/1412.6572v3. |

| [13] | KURAKIN A, GOODFELLOW I J, BENGIO S. Adversarial Examples in the Physical World[EB/OL].(2017-02-11)[2024-12-24]. https://doi.org/10.48550/arXiv.1607.02533. |

| [14] | DONG Yinpeng, LIAO Fangzhou, PANG Tianyu, et al. Boosting Adversarial Attacks with Momentum[C]// IEEE. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2018: 9185-9193. |

| [15] | CARLINI N, WAGNER D. Towards Evaluating the Robustness of Neural Networks[C]// IEEE. 2017 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2017: 39-57. |

| [16] | XIE Cihang, ZHANG Zhishuai, ZHOU Yuyin, et al. Improving Transferability of Adversarial Examples with Input Diversity[C]// IEEE. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2019: 2725-2734. |

| [17] | DONG Yinpeng, PANG Tianyu, SU Hang, et al. Evading Defenses to Transferable Adversarial Examples by Translation-Invariant Attacks[C]// IEEE. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2019: 4307-4316. |

| [18] | LIN Jiadong, SONG Chuanbiao, HE Kun, et al. Nesterov Accelerated Gradient and Scale Invariance for Adversarial Attacks[EB/OL].(2020-02-03)[2024-12-24]. https://arxiv.org/abs/1908.06281v5. |

| [19] | WEI Zhipeng, CHEN Jingjing, WU Zuxuan, et al. Enhancing the Self-Universality for Transferable Targeted Attacks[C]// IEEE. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2023: 12281-12290. |

| [20] | ZHANG Chaoning, BENZ P, IMTIAZ T, et al. Understanding Adversarial Examples from the Mutual Influence of Images and Perturbations[C]// IEEE. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2020: 14509-14518. |

| [21] | WU Dongxian, WANG Yisen, XIA Shutao, et al. Skip Connections Matter: On the Transferability of Adversarial Examples Generated with ResNets[EB/OL].(2020-02-14)[2024-12-24]. https://arxiv.org/abs/2002.05990v1. |

| [22] | BENZ P, ZHANG Chaoning, KWEON I S. Batch Normalization Increases Adversarial Vulnerability and Decreases Adversarial Transferability: A Non-Robust Feature Perspective[C]// IEEE. 2021 IEEE/CVF International Conference on Computer Vision (ICCV). New York: IEEE, 2021: 7798-7807. |

| [23] | JANDIAL S, MANGLA P, VARSHNEY S, et al. AdvGAN: Harnessing Latent Layers for Adversary Generation[C]// IEEE. 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW). New York: IEEE, 2019: 2045-2048. |

| [24] | ZHANG Qilong, LI Xiaodan, CHEN Yuefeng, et al. Beyond ImageNet Attack: Towards Crafting Adversarial Examples for Black-Box Domains[EB/OL].(2022-03-14)[2024-12-24]. https://arxiv.org/abs/2201.11528v4. |

| [25] | SELVARAJU R R, COGSWELL M, DAS A, et al. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization[J]. International Journal of Computer Vision, 2020, 128(2): 336-359. |

| [26] | LI Xiang, GUO Haiwang, DENG Xinyang, et al. CGN: Class Gradient Network for the Construction of Adversarial Samples[EB/OL].(2023-11-07)[2024-12-24]. https://doi.org/10.1016/j.ins.2023.119855. |

| [27] | DENG Jia, DONG Wei, SOCHER R, et al. ImageNet: A Large-Scale Hierarchical Image Database[C]// IEEE. 2009 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2009: 248-255. |

| [28] | XIAO Han, RASUL K, VOLLGRAF R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms[EB/OL].(2017-09-15)[2024-12-24]. https://arxiv.org/abs/1708.07747v2. |

| [29] | HUANG Gao, LIU Zhuang, VAN D M L, et al. Densely Connected Convolutional Networks[C]// IEEE. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2017: 2261-2269. |

| [30] | TAN Mingxing, LE Q V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks[EB/OL].(2020-09-11)[2024-12-24]. https://arxiv.org/abs/1905.11946v5. |

| [31] | DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An Image is Worth 16×16 Words:Transformers for Image Recognition at Scale[EB/OL]. (2021-06-03)[2024-12-24]. https://arxiv.org/abs/2010.11929. |

| [32] | TRAMÈR F, KURAKIN A, PAPERNOT N, et al. Ensemble Adversarial Training: Attacks and Defenses[EB/OL]. (2020-04-26)[2024-12-24]. https://arxiv.org/abs/1705.07204v5. |

| [1] | 金增旺, 江令洋, 丁俊怡, 张慧翔, 赵波, 方鹏飞. 工业控制系统安全研究综述[J]. 信息网络安全, 2025, 25(3): 341-363. |

| [2] | 陈红松, 刘新蕊, 陶子美, 王志恒. 基于深度学习的时序数据异常检测研究综述[J]. 信息网络安全, 2025, 25(3): 364-391. |

| [3] | 秦广雪, 李丽莎. 基于量子卷积神经网络的ARX分组密码区分器[J]. 信息网络安全, 2025, 25(3): 467-477. |

| [4] | 李海龙, 崔治安, 沈燮阳. 网络流量特征的异常分析与检测方法综述[J]. 信息网络安全, 2025, 25(2): 194-214. |

| [5] | 张双全, 殷中豪, 张环, 高鹏. 基于残差卷积神经网络的网络攻击检测技术研究[J]. 信息网络安全, 2025, 25(2): 240-248. |

| [6] | 武浩莹, 陈杰, 刘君. 改进Simon32/64和Simeck32/64神经网络差分区分器[J]. 信息网络安全, 2025, 25(2): 249-259. |

| [7] | 金地, 任昊, 唐瑞, 陈兴蜀, 王海舟. 基于情感辅助多任务学习的社交网络攻击性言论检测技术研究[J]. 信息网络安全, 2025, 25(2): 281-294. |

| [8] | 陈晓静, 陶杨, 吴柏祺, 刁云峰. 面向骨骼动作识别的优化梯度感知对抗攻击方法[J]. 信息网络安全, 2024, 24(9): 1386-1395. |

| [9] | 徐茹枝, 张凝, 李敏, 李梓轩. 针对恶意软件的高鲁棒性检测模型研究[J]. 信息网络安全, 2024, 24(8): 1184-1195. |

| [10] | 田钊, 牛亚杰, 佘维, 刘炜. 面向车联网的车辆节点信誉评估方法[J]. 信息网络安全, 2024, 24(5): 719-731. |

| [11] | 张光华, 刘亦纯, 王鹤, 胡勃宁. 基于JSMA对抗攻击的去除深度神经网络后门防御方案[J]. 信息网络安全, 2024, 24(4): 545-554. |

| [12] | 徐子荣, 郭焱平, 闫巧. 基于特征恶意度排序的恶意软件对抗防御模型[J]. 信息网络安全, 2024, 24(4): 640-649. |

| [13] | 杨志鹏, 刘代东, 袁军翼, 魏松杰. 基于自注意力机制的网络局域安全态势融合方法研究[J]. 信息网络安全, 2024, 24(3): 398-410. |

| [14] | 江荣, 刘海天, 刘聪. 基于集成学习的无监督网络入侵检测方法[J]. 信息网络安全, 2024, 24(3): 411-426. |

| [15] | 冯光升, 蒋舜鹏, 胡先浪, 马明宇. 面向物联网的入侵检测技术研究新进展[J]. 信息网络安全, 2024, 24(2): 167-178. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||