信息网络安全 ›› 2025, Vol. 25 ›› Issue (6): 920-932.doi: 10.3969/j.issn.1671-1122.2025.06.007

基于掩码的选择性联邦蒸馏方案

- 1.网络与信息安全保密武警部队重点实验室,西安 710086

2.武警工程大学密码工程学院,西安 710086

-

收稿日期:2025-03-26出版日期:2025-06-10发布日期:2025-07-11 -

通讯作者:刘科乾 2753224405@qq.com -

作者简介:朱率率(1985—),男,山东,教授,博士,主要研究方向为后量子密码、人工智能安全、隐私保护|刘科乾(2000—),男,四川,硕士研究生,主要研究方向为数据隐私保护。 -

基金资助:陕西省自然科学基础研究计划(2024JC-YBMS-546)

A Masking-Based Selective Federated Distillation Scheme

ZHU Shuaishuai1,2, LIU Keqian2( )

)

- 1. Key Laboratory of Network and Information Security under PAP, Xi’an 710086 China

2. College of Cryptography Engineering, Engineering University of PAP, Xi’an 710086 China

-

Received:2025-03-26Online:2025-06-10Published:2025-07-11

摘要:

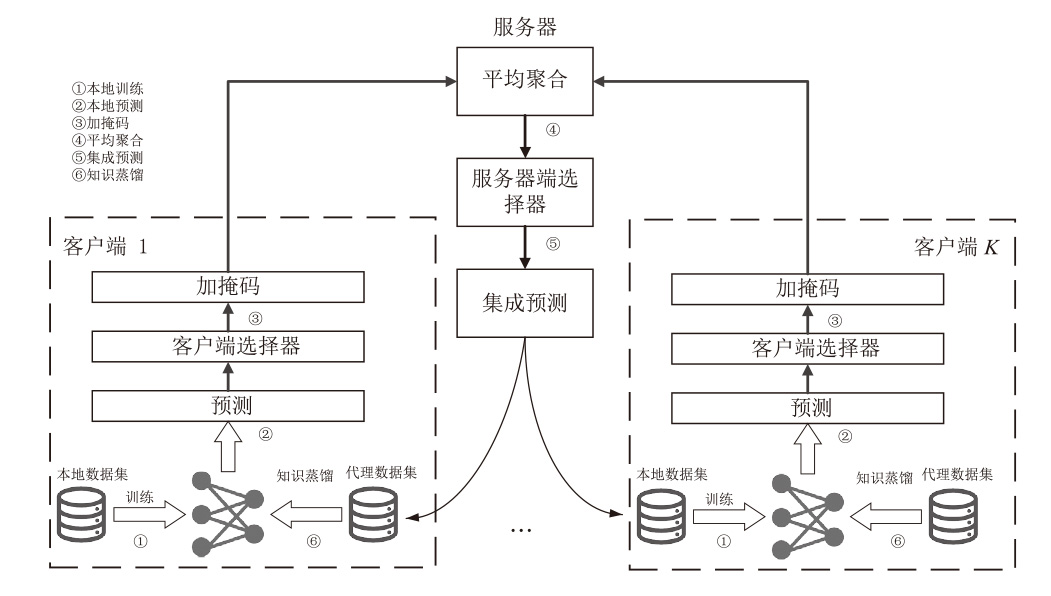

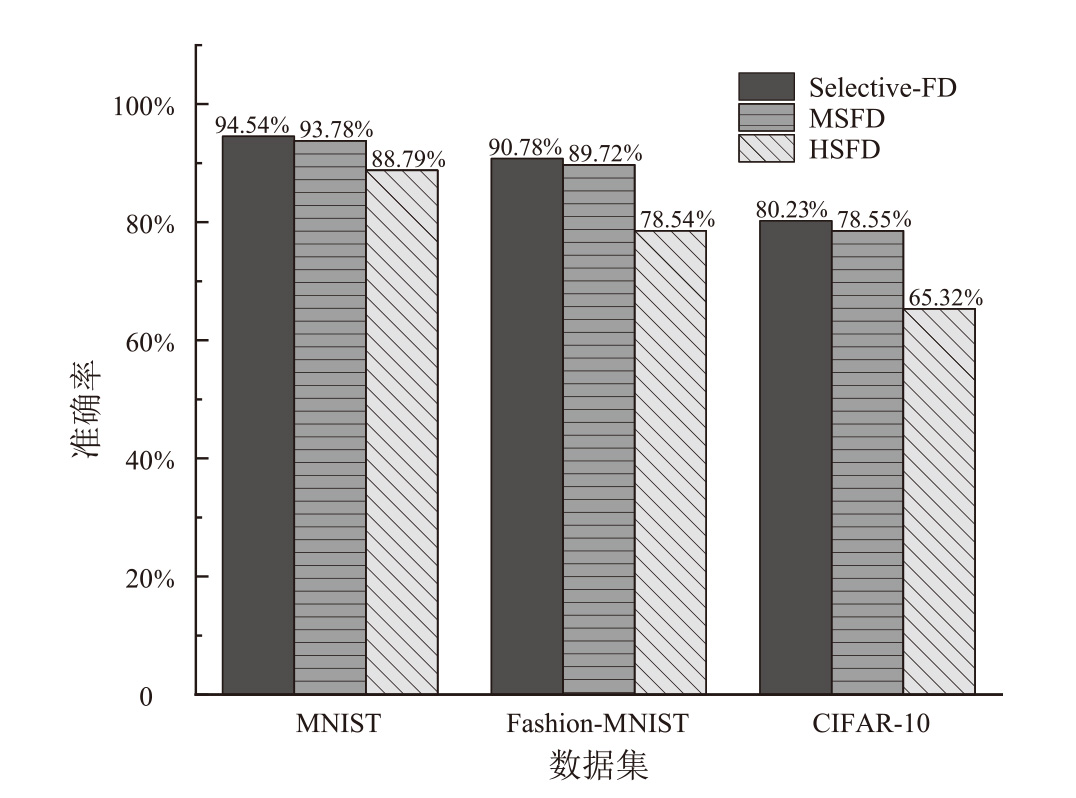

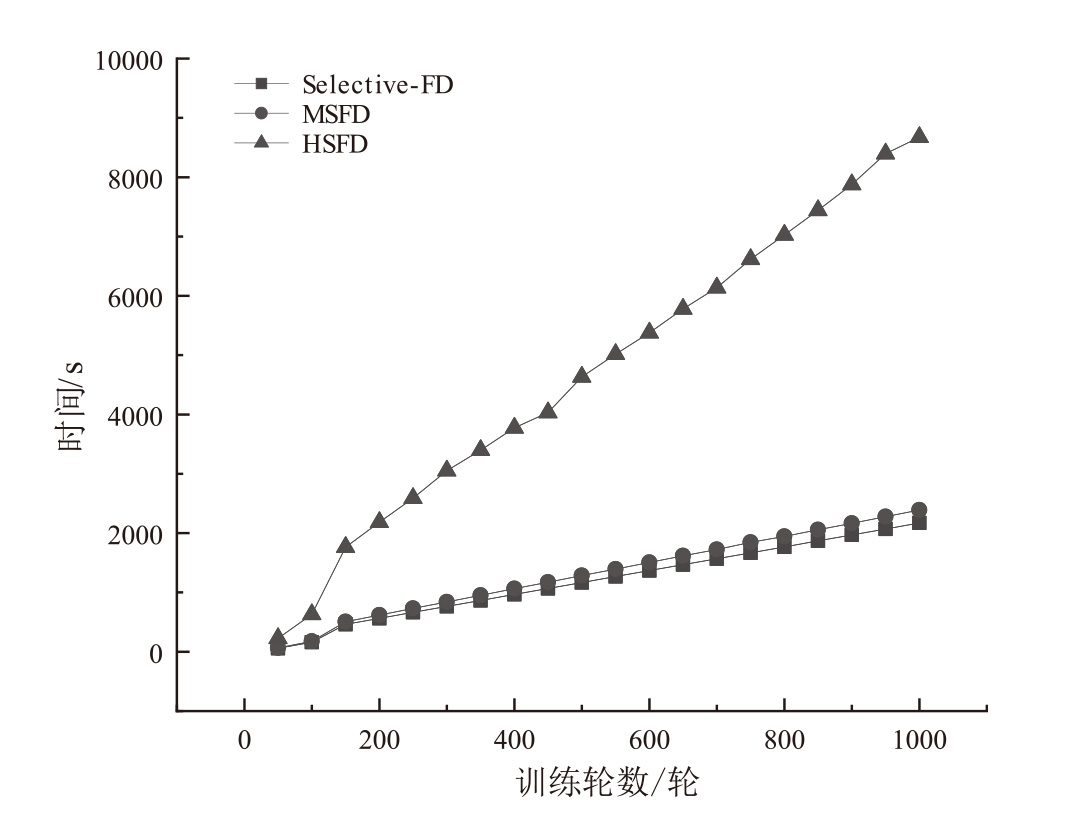

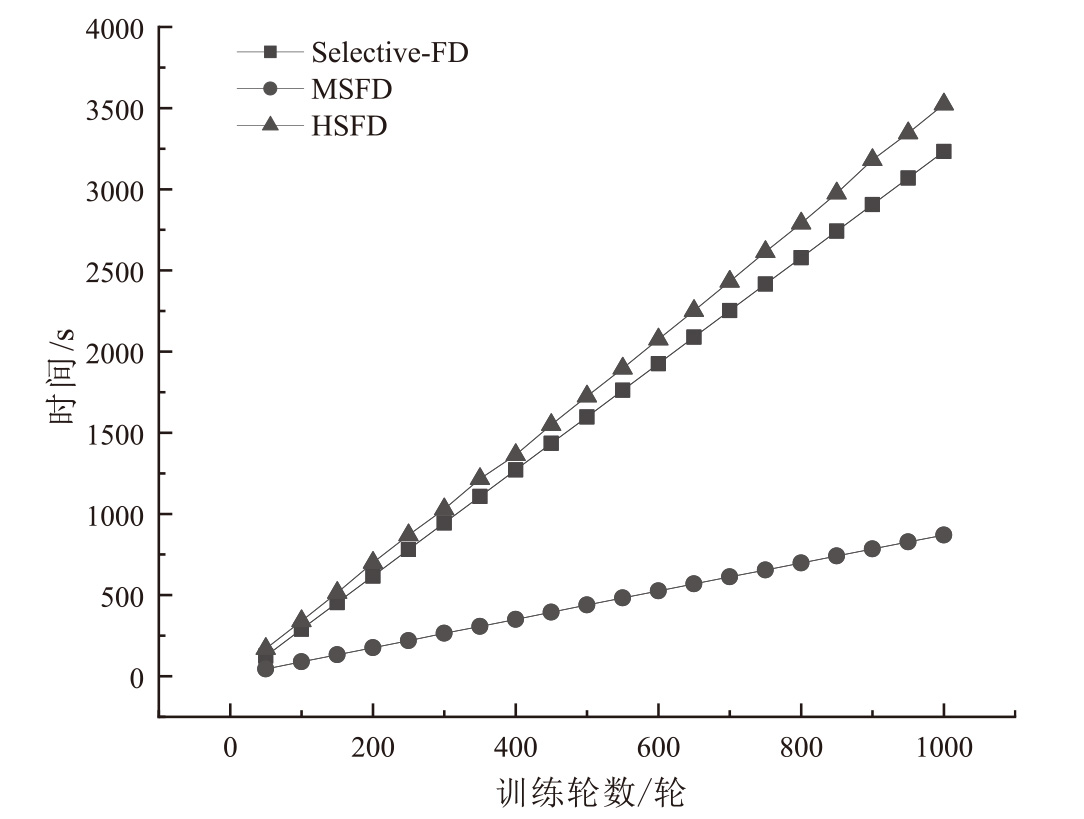

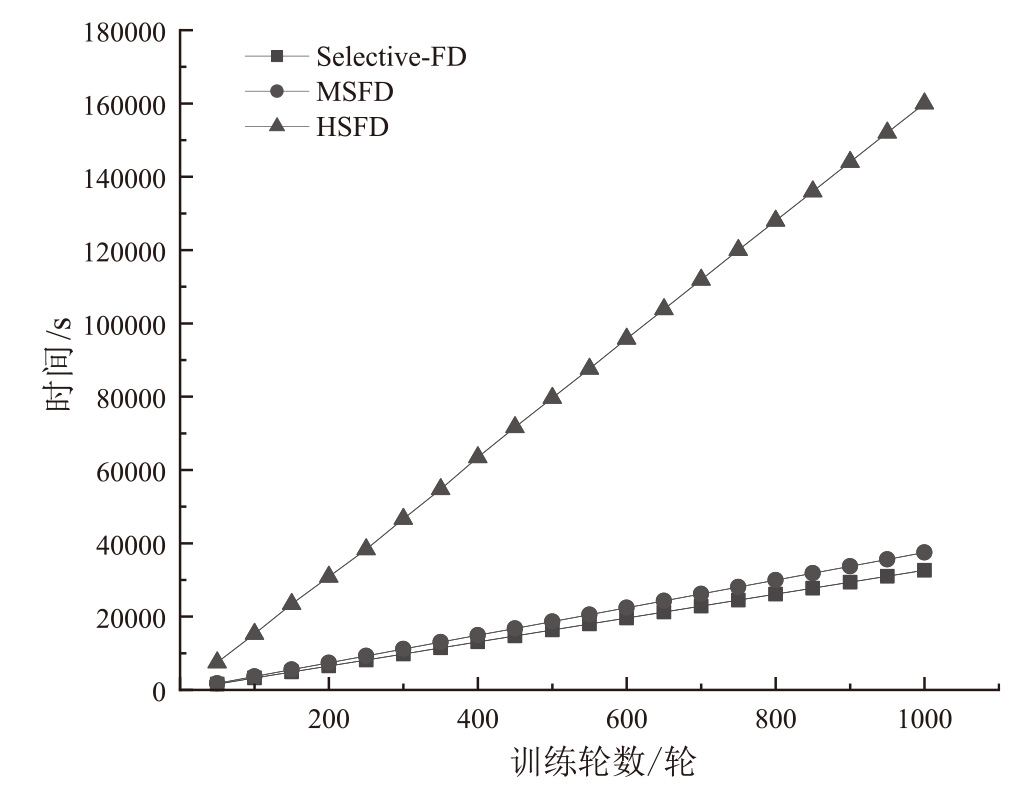

随着机器学习技术的不断进步,隐私保护问题越来越被重视。联邦学习作为一种分布式机器学习框架,得到了广泛应用,然而,其在实际应用中仍面临隐私泄露和低效率的挑战。为了应对上述挑战,文章提出基于掩码的选择性联邦蒸馏(MSFD)方案,该方案利用联邦蒸馏通过传递知识而非模型参数的特点,有效抵御白盒攻击,同时降低通信开销。通过在共享的软标签中引入AES加密后的掩码机制,有效解决选择性联邦蒸馏明文共享软标签易受黑盒攻击威胁的问题,显著提升对黑盒攻击的抵御能力,从而大幅提高选择性联邦蒸馏方案的安全性。通过在客户端软标签中嵌入动态加密掩码,实现隐私混淆,并结合秘密信道协商与轮次密钥更新机制,显著降低遭受黑盒攻击的风险并保持模型性能,兼顾联邦学习的安全性与通信效率。通过安全性分析和实验结果表明,MSFD在多个数据集上能够显著降低黑盒攻击成功率,同时保持分类准确率,有效提升隐私保护能力。

中图分类号:

引用本文

朱率率, 刘科乾. 基于掩码的选择性联邦蒸馏方案[J]. 信息网络安全, 2025, 25(6): 920-932.

ZHU Shuaishuai, LIU Keqian. A Masking-Based Selective Federated Distillation Scheme[J]. Netinfo Security, 2025, 25(6): 920-932.

| [1] | MCMAHAN B H, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[C]// JMLR. Artificial intelligence and statistics. New York: JMLR, 2017: 1273-1282. |

| [2] |

RAJKOMAR A, DEAN J, KOHANE I. Machine Learning in Medicine[J]. New England Journal of Medicine, 2019, 380(14): 1347-1358.

doi: 10.1056/NEJMra1814259 |

| [3] | SUN Yu, LIU Zheng, CUI Jian, et al. Client-Side Gradient Inversion Attack in Federated Learning Using Secure Aggregation[J]. IEEE Internet of Things Journal, 2024, 11 (17): 28774-28786. |

| [4] | QI Tao, WU Fangzhao, WU Chuhan, et al. Differentially Private Knowledge Transfer for Federated Learning[EB/OL]. (2023-05-15)[2025-03-20]. https://doi.org/10.1038/s41467-023-38794-x. |

| [5] | SHAO Jiawei, WU Fangzhao, ZHANG Jun. Selective Knowledge Sharing for Privacy-Preserving Federated Distillation without a Good Teacher[EB/OL]. (2023-12-12)[2025-03-20]. https://doi.org/10.1038/s41467-023-44383-9. |

| [6] | HU Hongsheng, SALCIC Z, SUN Lichao, et al. Membership Inference Attacks on Machine Learning: A Survey[J]. ACM Computing Surveys, 2022, 54 (11s): 1-37. |

| [7] | LIU Lumin, ZHANG Jun, SONG Shuhang, et al. Communication-Efficient Federated Distillation with Active Data Sampling[C]// IEEE. ICC2022-IEEE International Conference on Communications. New York: IEEE, 2022: 201-206. |

| [8] | HAN Yu. A Review of Knowledge Distillation in Deep Neural Networks[J]. Computer Science and Applications, 2020, 10(9): 1625-1630. |

| [9] | VOIGT P, VON D B A. The EU General Data Protection Regulation (GDPR)[M]. Heidelberg: Springer, 2017. |

| [10] | PARK W, KIM D, LU Yan, et al. Relational Knowledge Distillation[C]// IEEE. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2019: 3967-3976. |

| [11] | GOU Jun, YU Bo, MAYBANK S J, et al. Knowledge Distillation: A Survey[J]. International Journal of Computer Vision, 2021, 129: 1789-1819. |

| [12] |

NGUYEN Q, VALIZADEGAN H, HAUSKRECHT M. Learning Classification Models with Soft-Label Information[J]. Journal of the American Medical Informatics Association, 2014, 21(3): 501-508.

doi: 10.1136/amiajnl-2013-001964 pmid: 24259520 |

| [13] | YANG Qiang, LIU Yang, CHEN Tianjian, et al. Federated Machine Learning: Concept and Applications[J]. ACM Transactions on Intelligent Systems and Technology, 2019, 10(2): 1-19. |

| [14] | ZHU Hangyu, XU Jinjin, LIU Shiqing, et al. Federated Learning on Non-Iid Data: A Survey[J]. Neurocomputing, 2021, 465: 371-390. |

| [15] | OUADRHIRI A E, ABDELHADI A. Differential Privacy for Deep and Federated Learning: A Survey[J]. IEEE Access, 2022, 10: 22359-22380. |

| [16] | ITAHARA S, NISHIO T, KODA Y, et al. Distillation-Based Semi-Supervised Federated Learning for Communication-Efficient Collaborative Training with Non-Iid Private Data[J]. IEEE Transactions on Mobile Computing, 2021, 22: 191-205. |

| [17] | SHOKRI R, STRONATI M, SONG Congzheng, et al. Membership Inference Attacks against Machine Learning Models[C]// IEEE. IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2017: 3-18. |

| [18] | LUO Zeren, ZHU Chuangwei, FANG Lujie, et al. An Effective and Practical Gradient Inversion Attack[J]. International Journal of Intelligent Systems, 2022, 37(11): 9373-9389. |

| [19] | JIANG Yingrui, ZHAO Xuejian, LI Hao, et al. A Personalized Federated Learning Method Based on Knowledge Distillation and Differential Privacy[EB/OL]. (2024-09-06)[2025-03-20]. https://doi.org/10.3390/electronics13173538. |

| [20] | WU Xiang, ZHANG Yongting, SHI Minyu, et al. An Adaptive Federated Learning Scheme with Differential Privacy Preserving[J]. Future Generation Computer Systems, 2022, 127: 362-372. |

| [1] | 孙剑文, 张斌, 司念文, 樊莹. 基于知识蒸馏的轻量化恶意流量检测方法[J]. 信息网络安全, 2025, 25(6): 859-871. |

| [2] | 荀毅杰, 崔嘉容, 毛伯敏, 秦俊蔓. 基于联邦学习的智能汽车CAN总线入侵检测系统[J]. 信息网络安全, 2025, 25(6): 872-888. |

| [3] | 邓东上, 王伟业, 张卫东, 吴宣够. 基于模型特征方向的分层个性化联邦学习框架[J]. 信息网络安全, 2025, 25(6): 889-897. |

| [4] | 赵锋, 范淞, 赵艳琦, 陈谦. 基于本地差分隐私的可穿戴医疗设备流数据隐私保护方法[J]. 信息网络安全, 2025, 25(5): 700-712. |

| [5] | 秦金磊, 康毅敏, 李整. 智能电网中轻量级细粒度的多维多子集隐私保护数据聚合[J]. 信息网络安全, 2025, 25(5): 747-757. |

| [6] | 李骁, 宋晓, 李勇. 基于知识蒸馏的医疗诊断差分隐私方法研究[J]. 信息网络安全, 2025, 25(4): 524-535. |

| [7] | 胡宇涵, 杨高, 蔡红叶, 付俊松. 三维分布式无线智能系统数据传输路径隐私保护方案[J]. 信息网络安全, 2025, 25(4): 536-549. |

| [8] | 何可, 王建华, 于丹, 陈永乐. 基于自适应采样的机器遗忘方法[J]. 信息网络安全, 2025, 25(4): 630-639. |

| [9] | 李佳东, 曾海涛, 彭莉, 汪晓丁. 一种保护数据隐私的匿名路由联邦学习框架[J]. 信息网络安全, 2025, 25(3): 494-503. |

| [10] | 徐茹枝, 仝雨蒙, 戴理朋. 基于异构数据的联邦学习自适应差分隐私方法研究[J]. 信息网络安全, 2025, 25(1): 63-77. |

| [11] | 温金明, 刘庆, 陈洁, 吴永东. 基于错误学习的全同态加密技术研究现状与挑战[J]. 信息网络安全, 2024, 24(9): 1328-1351. |

| [12] | 林湛航, 向广利, 李祯鹏, 徐子怡. 基于同态加密的前馈神经网络隐私保护方案[J]. 信息网络安全, 2024, 24(9): 1375-1385. |

| [13] | 郭倩, 赵津, 过弋. 基于分层聚类的个性化联邦学习隐私保护框架[J]. 信息网络安全, 2024, 24(8): 1196-1209. |

| [14] | 李增鹏, 王思旸, 王梅. 隐私保护近邻检测研究[J]. 信息网络安全, 2024, 24(6): 817-830. |

| [15] | 傅彦铭, 陆盛林, 陈嘉元, 覃华. 基于深度强化学习和隐私保护的群智感知动态任务分配策略[J]. 信息网络安全, 2024, 24(3): 449-461. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||