信息网络安全 ›› 2024, Vol. 24 ›› Issue (1): 69-79.doi: 10.3969/j.issn.1671-1122.2024.01.007

基于联邦学习的中心化差分隐私保护算法研究

- 华北电力大学控制与计算机工程学院,北京 102200

-

收稿日期:2023-08-20出版日期:2024-01-10发布日期:2024-01-24 -

通讯作者:戴理朋 E-mail:dlpdaniel1234@163.com -

作者简介:徐茹枝(1966—),女,江西,教授,博士,主要研究方向为AI安全、智能电网|戴理朋(1999—),男,安徽,硕士研究生,主要研究方向为联邦学习|夏迪娅(1999—),女,新疆,硕士研究生,主要研究方向为联邦学习、数据重构攻击|杨鑫(1996—),女,安徽,硕士研究生, 主要研究方向为差分隐私保护 -

基金资助:国家自然科学基金(61972148)

Research on Centralized Differential Privacy Algorithm for Federated Learning

XU Ruzhi, DAI Lipeng( ), XIA Diya, YANG Xin

), XIA Diya, YANG Xin

- School of Control and Computer Engineering, North China Electric Power University, Beijing 102200, China

-

Received:2023-08-20Online:2024-01-10Published:2024-01-24 -

Contact:DAI Lipeng E-mail:dlpdaniel1234@163.com

摘要:

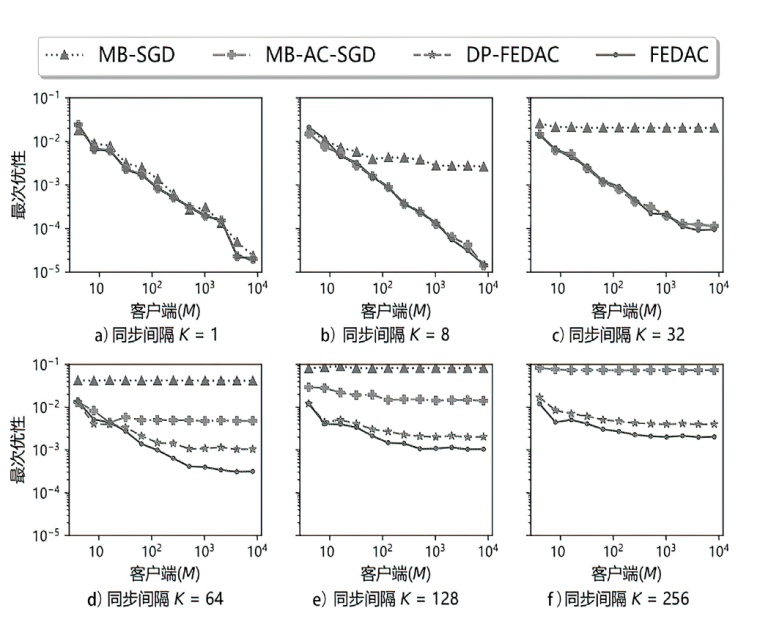

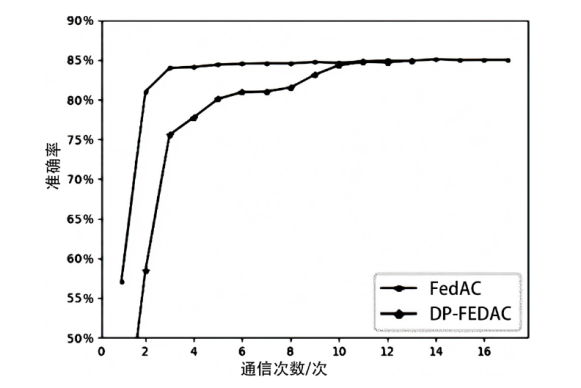

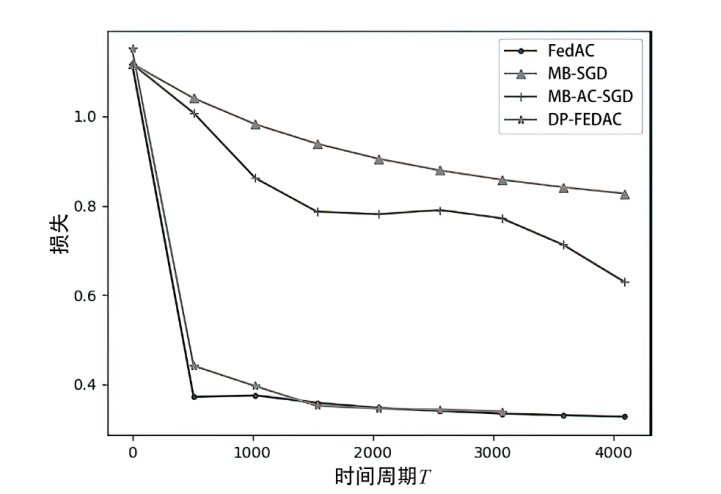

近年来,联邦学习以独特的训练方式打破了数据“孤岛”,因此受到越来越多的关注。然而在训练全局模型时,联邦学习易受到推理攻击,可能会泄露参与训练成员的一些信息,产生严重的安全隐患。针对联邦训练过程中半诚实/恶意客户端造成的差分攻击,文章提出了基于中心化的差分隐私联邦学习算法DP-FEDAC。首先,优化联邦加速随机梯度下降算法,改进服务器的聚合方式,计算参数更新差值后采用梯度聚合方式更新全局模型,以提升稳定收敛;然后,通过对聚合参数添加中心化差分高斯噪声隐藏参与训练的成员贡献,达到保护参与方隐私信息的目的,同时还引入时刻会计(MA)计算隐私损失,进一步平衡模型收敛和隐私损失之间的关系;最后,与FedAC、分布式MB-SGD、分布式MB-AC-SGD等算法做对比实验,评估DP-FEDAC的综合性能。实验结果表明,在通信不频繁的情况下,DP-FEDAC算法的线性加速最接近FedAC,远优于另外两种算法,拥有较好的健壮性;此外DP-FEDAC算法在保护隐私的前提下能够达到与FedAC算法相同的模型精度,体现了算法的优越性和可用性。

中图分类号:

引用本文

徐茹枝, 戴理朋, 夏迪娅, 杨鑫. 基于联邦学习的中心化差分隐私保护算法研究[J]. 信息网络安全, 2024, 24(1): 69-79.

XU Ruzhi, DAI Lipeng, XIA Diya, YANG Xin. Research on Centralized Differential Privacy Algorithm for Federated Learning[J]. Netinfo Security, 2024, 24(1): 69-79.

| [1] | MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[C]// PMLR.Artificial Intelligence and Statistics. Lauderdale: PMLR, 2017: 1273-1282. |

| [2] | ZHOU Jun, FANG Guoying, WU Nan. A Survey of Research on Security and Privacy Protection in Federated Learning[J]. Journal of Xihua University(Natural Science Edition), 2020, 39(4): 9-17. |

| 周俊, 方国英, 吴楠. 联邦学习安全与隐私保护研究综述[J]. 西华大学学报(自然科学版), 2020, 39(4): 9-17. | |

| [3] | GEYER R C, KLEIN T, NABI M. Differentially Private Federated Learning: A Client Level Perspective[EB/OL]. (2017-09-20) [2023-07-23]. https://arxiv.org/abs/1712.07557. |

| [4] | MCMAHAN H B, RAMAGE D, TALWAR K, et al. Learning Differentially Private Recurrent Language Models[EB/OL]. (2017-08-18) [2023-07-24]. https://arxiv.org/abs/1710.06963. |

| [5] | MCMAHAN H B, ANDREW G, ERLINGSSON U, et al. A General Approach to Adding Differential Privacy to Iterative Training Procedures[EB/OL].(2018-09-15) [2023-07-24]. https://arxiv.org/abs/1812.06210. |

| [6] | LIU Yuhan, SURESH A T, YU F, et al. Learning Discrete Distributions: User vs Item-Level Privacy[C]// NIPS. Advances in Neural Information Processing Systems. New York: MIT Press, 2020: 20965-20976. |

| [7] | LIANG Zhicong, WANG Bao, GU Quanquan, et al. Exploring Private Federated Learning with Laplacian Smoothing[EB/OL]. (2020-05-01) [2023-07-24]. https://arxiv.org/abs/2005.00218. |

| [8] | HAMM J, CAO P, BELKIN M. Learning Privately from Multiparty Data[C]// ACM. International Conference on Machine Learning. New York: ACM, 2016: 555-563. |

| [9] | PAPERNOT N, ABADI M, ERLINGSSON U, et al. Semi-Supervised Knowledge Transfer for Deep Learning from Private Training Data[C]// ICLR. International Conference on Learning Representations. New York: arXiv.org, 2016: 1-16. |

| [10] | PAPERNOT N, SONG Shuang, MIRONOV I, et al. Scalable Private Learning with PATE[C]// ICLR. International Conference on Learning Representations. New York: arXiv.org, 2018: 1-34. |

| [11] | MIRONOV I. Renyi Differential Privacy[C]// IEEE. 2017 IEEE 30th Computer Security Foundations Symposium (CSF). New York: IEEE, 2017: 263-275. |

| [12] | SUN Lichao, ZHOU Yingbo, YU P S, et al. Differentially Private Deep Learning with Smooth Sensitivity[EB/OL]. (2020-03-01) [2023-07-25]. https://arxiv.org/abs/2003.00505. |

| [13] | ABADI M, CHU A, GOODFELLOW I, et al. Deep Learning with Differential Privacy[C]// ACM. Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. |

| [14] | DING Jiahao, WANG Jingyi, LIANG Guanguan, et al. Towards Plausible Differentially Private ADMM Based Distributed Machine Learning[C]// ACM.Proceedings of the 29th ACM International Conference on Information & Knowledge Management. New York: ACM, 2020: 285-294. |

| [15] |

ARACHCHIGE P C M, BERTOK P, KHALIL I, et al. Local Differential Privacy for Deep Learning[J]. IEEE Internet of Things Journal, 2019, 7(7): 5827-5842.

doi: 10.1109/JIoT.6488907 URL |

| [16] |

ZHAO Yang, ZHAO Jun, YANG Mengmeng, et al. Local Differential Privacy-Based Federated Learning for Internet of Things[J]. IEEE Internet of Things Journal, 2020, 8(11): 8836-8853.

doi: 10.1109/JIOT.2020.3037194 URL |

| [17] | TRUEX S, LIU Ling, CHOW K H, et al. LDP-Fed: Federated Learning with Local Differential Privacy[C]// ACM. Proceedings of the Third ACM International Workshop on Edge Systems, Analytics and Networking. New York: ACM, 2020: 61-66. |

| [18] | KIM M, LEE J, OHNO-MACHADO L, et al. Secure and Differentially Private Logistic Regression for Horizontally Distributed Data[J]. IEEE Transactions on Information Forensics and Security, 2019(15): 695-710. |

| [19] |

LU Yunlong, HUANG Xiahong, DAI Yueyue, et al. Differentially Private Asynchronous Federated Learning for Mobile Edge Computing in Urban Informatics[J]. IEEE Transactions on Industrial Informatics, 2019, 16(3): 2134-2143.

doi: 10.1109/TII.9424 URL |

| [20] |

ZHAO Bin, FAN Kai, YANG Kan, et al. Anonymous and Privacy-Preserving Federated Learning with Industrial Big Data[J]. IEEE Transactions on Industrial Informatics, 2021, 17(9): 6314-6323.

doi: 10.1109/TII.2021.3052183 URL |

| [21] | HU Rui, GUO Yuanxiong, GONG Yanming. Concentrated Differentially Private and Utility Preserving Federated Learning[EB/OL]. (2020-09-22) [2023-07-23]. https://arxiv.org/abs/2003.13761. |

| [22] |

GONG Maoguo, FENG Jialun, YU Xie. Privacy-Enhanced Multi-Party Deep Learning[J]. Neural Networks, 2020, 121: 484-496.

doi: S0893-6080(19)30323-5 pmid: 31648120 |

| [23] | CHOUDHURY O, GKOULALAS-DIVANIS A, SALONIDIS T, et al. Anonymizing Data for Privacy-Preserving Federated Learning[EB/OL]. (2020-02-21) [2023-07-24]. https://arxiv.org/abs/2002.09096. |

| [24] | POULIS G, LOUKIDES G, GKOULALAS-DIVANIS A, et al. Anonymizing Data with Relational and Transaction Attributes[C]// Springer. Proceedings of the 2013 European Conference on Machine Learning and Knowledge Discovery in Databases-Volume Part III. Heidelberg: Springer, 2013: 353-369. |

| [25] | BITTAU A, ERLINGSSON U, MANIATIS P, et al. Prochlo: Strong Privacy for Analytics in the Crowd[C]// ACM. Proceedings of the 26th Symposium on Operating Systems Principles. New York: ACM, 2017: 441-459. |

| [26] | ERLINGSSON U, FELDMAN V, MIRONOV I, et al. Encode,Shuffle, Analyze Privacy Revisited: Formalizations and Empirical Evaluation[EB/OL].(2020-01-10) [2023-07-25]. https://arxiv.org/abs/2001.03618. |

| [27] | WEI Kang, LI Jun, DING Ming, et al. Federated Learning with Differential Privacy: Algorithms and Performance Analysis[J]. IEEE Transactions on Information Forensics and Security, 2020(15): 3454-3469. |

| [28] | WANG Hongyi, YUROCHKIN M, SUN Yuekai, et al. Federated Learning with Matched Averaging[EB/OL]. (2020-02-15) [2023-07-23]. https://arxiv.org/abs/2002.06440. |

| [29] | YE Yunfan, LI Shen, LIU Fang, et al. EdgeFed: Optimized Federated Learning Based on Edge Computing[J]. IEEE Access, 2020(8): 209191-209198. |

| [30] | YUAN Honglin, MA Tengyu. Federated Accelerated Stochastic Gradient Descent[C]// NIPS. Advances in Neural Information Processing Systems. San Diego: NIPS, 2020(33): 5332-5344. |

| [31] | DEKEL O, GILAD-BACHRACH R, SHAMIR O, et al. Optimal Distributed Online Prediction Using Mini-Batches[J]. Journal of Machine Learning Research, 2012, 13(1): 1-38. |

| [32] | COTTER A, SHAMIR O, SREBRO N, et al. Better Mini-Batch Algorithms via Accelerated Gradient Methods[C]// NIPS. Advances in Neural Information Processing Systems. San Diego: NIPS, 2011: 24-33. |

| [1] | 吴昊天, 李一凡, 崔鸿雁, 董琳. 基于零知识证明和区块链的联邦学习激励方案[J]. 信息网络安全, 2024, 24(1): 1-13. |

| [2] | 赵佳, 杨博凯, 饶欣宇, 郭雅婷. 基于联邦学习的Tor流量检测算法设计与实现[J]. 信息网络安全, 2024, 24(1): 60-68. |

| [3] | 尹春勇, 蒋奕阳. 基于个性化时空聚类的差分隐私轨迹保护模型[J]. 信息网络安全, 2024, 24(1): 80-92. |

| [4] | 赖成喆, 赵益宁, 郑东. 基于同态加密的隐私保护与可验证联邦学习方案[J]. 信息网络安全, 2024, 24(1): 93-105. |

| [5] | 彭翰中, 张珠君, 闫理跃, 胡成林. 联盟链下基于联邦学习聚合算法的入侵检测机制优化研究[J]. 信息网络安全, 2023, 23(8): 76-85. |

| [6] | 刘刚, 杨雯莉, 王同礼, 李阳. 基于云联邦的差分隐私保护动态推荐模型[J]. 信息网络安全, 2023, 23(7): 31-43. |

| [7] | 陈晶, 彭长根, 谭伟杰, 许德权. 基于差分隐私和秘密共享的多服务器联邦学习方案[J]. 信息网络安全, 2023, 23(7): 98-110. |

| [8] | 刘长杰, 石润华. 基于安全高效联邦学习的智能电网入侵检测模型[J]. 信息网络安全, 2023, 23(4): 90-101. |

| [9] | 赵佳, 高塔, 张建成. 基于改进贝叶斯网络的高维数据本地差分隐私方法[J]. 信息网络安全, 2023, 23(2): 19-25. |

| [10] | 陈得鹏, 刘肖, 崔杰, 仲红. 一种基于双阈值函数的成员推理攻击方法[J]. 信息网络安全, 2023, 23(2): 64-75. |

| [11] | 刘吉强, 王雪微, 梁梦晴, 王健. 基于共享数据集和梯度补偿的分层联邦学习框架[J]. 信息网络安全, 2023, 23(12): 10-20. |

| [12] | 杨丽, 朱凌波, 于越明, 苗银宾. 联邦学习与攻防对抗综述[J]. 信息网络安全, 2023, 23(12): 69-90. |

| [13] | 刘忻, 李韵宜, 王淼. 一种基于机密计算的联邦学习节点轻量级身份认证协议[J]. 信息网络安全, 2022, 22(7): 37-45. |

| [14] | 吕国华, 胡学先, 杨明, 徐敏. 基于联邦随机森林的船舶AIS轨迹分类算法[J]. 信息网络安全, 2022, 22(4): 67-76. |

| [15] | 刘峰, 杨成意, 於欣澄, 齐佳音. 面向去中心化双重差分隐私的谱图卷积神经网络[J]. 信息网络安全, 2022, 22(2): 39-46. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||