Netinfo Security ›› 2023, Vol. 23 ›› Issue (12): 10-20.doi: 10.3969/j.issn.1671-1122.2023.12.002

Previous Articles Next Articles

A Hierarchical Federated Learning Framework Based on Shared Dataset and Gradient Compensation

LIU Jiqiang, WANG Xuewei, LIANG Mengqing, WANG Jian( )

)

- Beijing Key Laboratory of Security and Privacy in Intelligent Transportation, Beijing Jiaotong University, Beijing 100044, China

-

Received:2023-10-07Online:2023-12-10Published:2023-12-13

CLC Number:

Cite this article

LIU Jiqiang, WANG Xuewei, LIANG Mengqing, WANG Jian. A Hierarchical Federated Learning Framework Based on Shared Dataset and Gradient Compensation[J]. Netinfo Security, 2023, 23(12): 10-20.

share this article

Add to citation manager EndNote|Ris|BibTeX

URL: http://netinfo-security.org/EN/10.3969/j.issn.1671-1122.2023.12.002

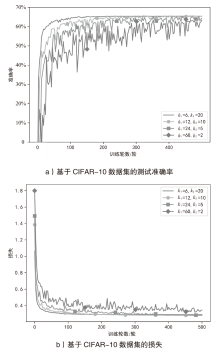

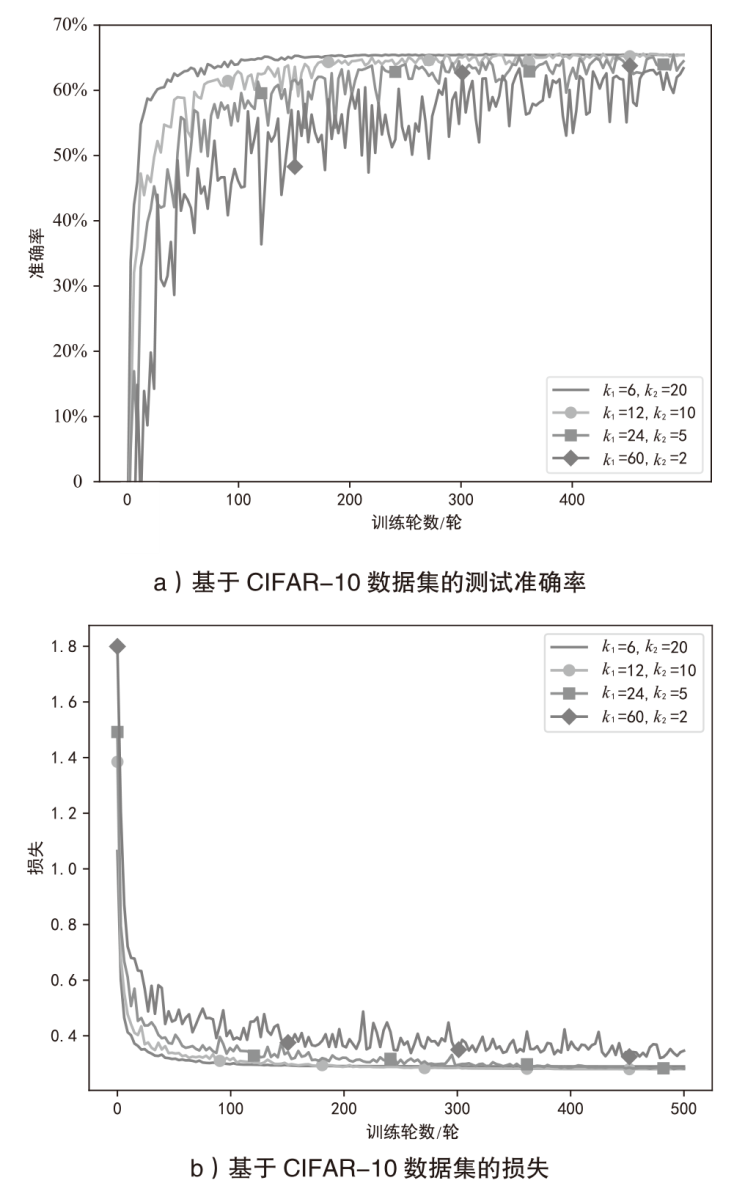

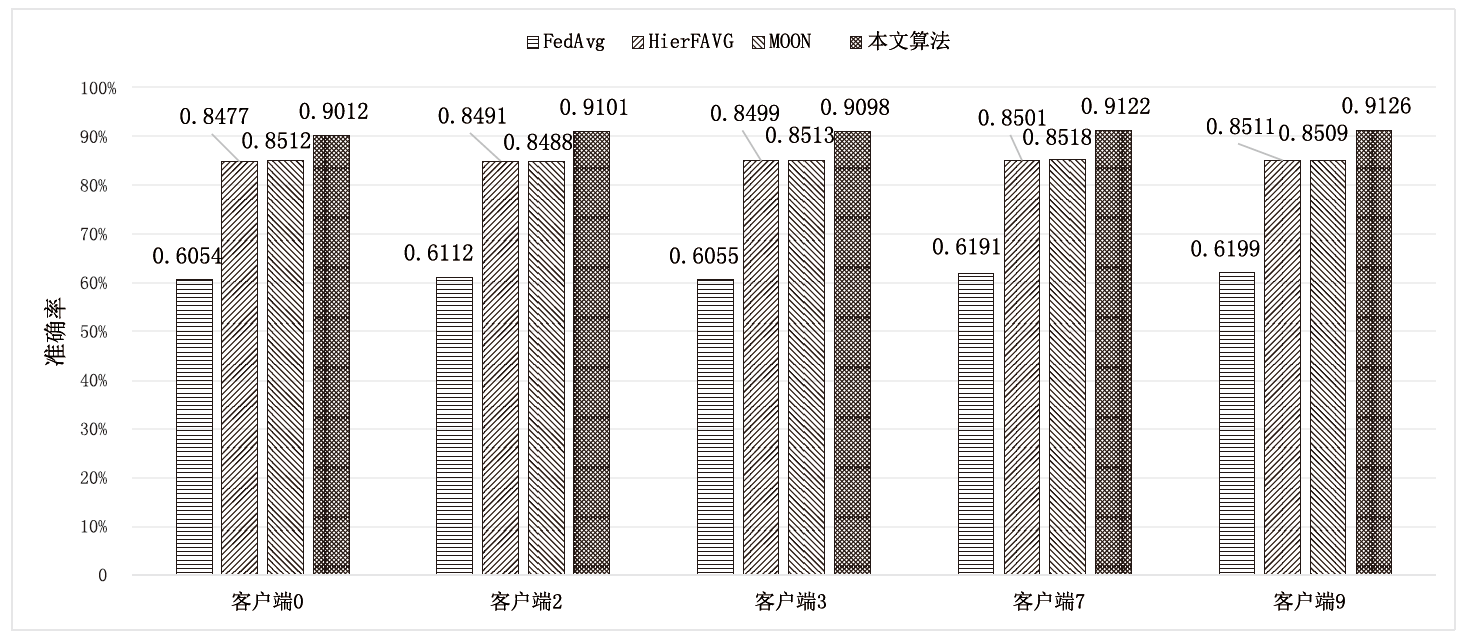

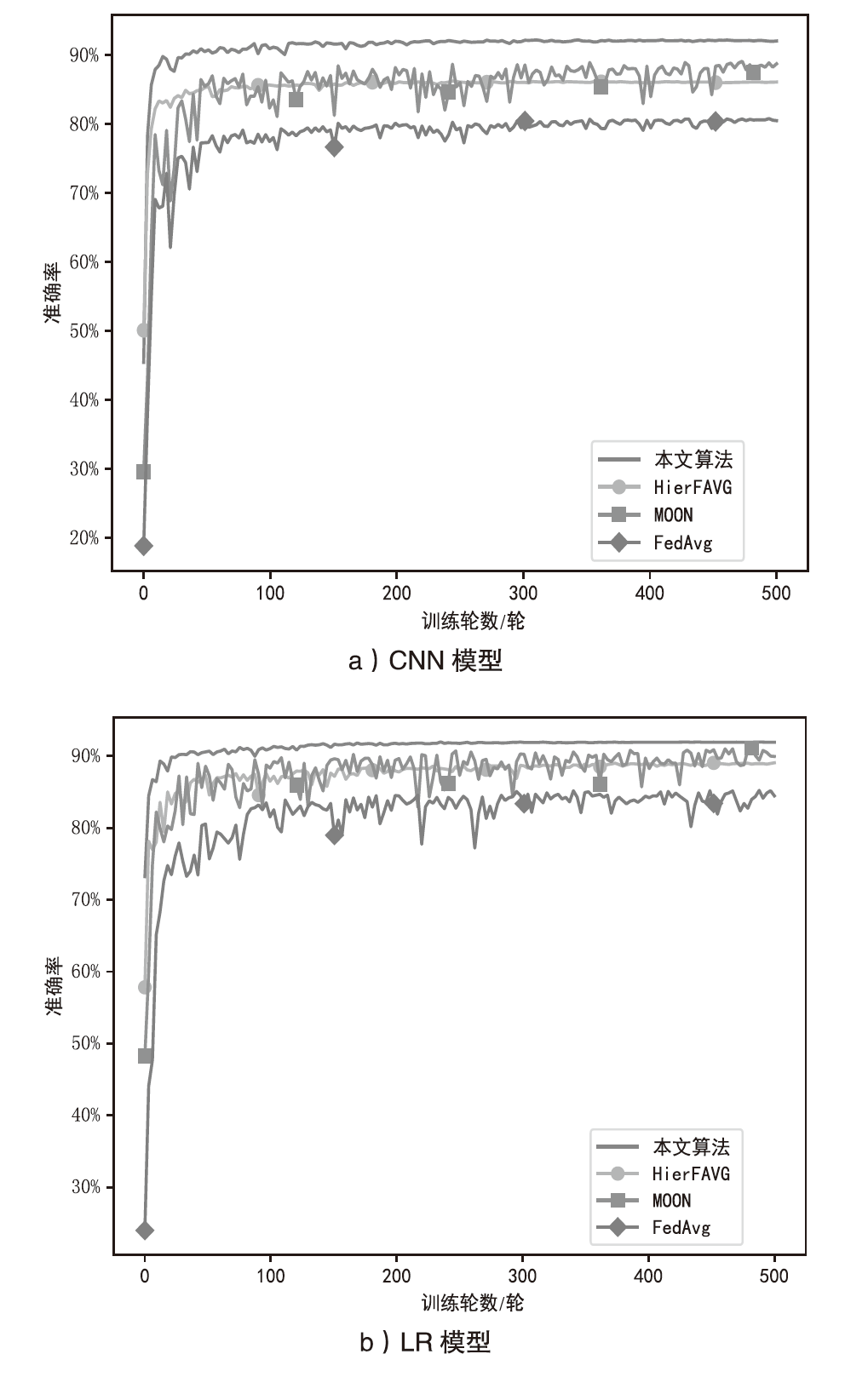

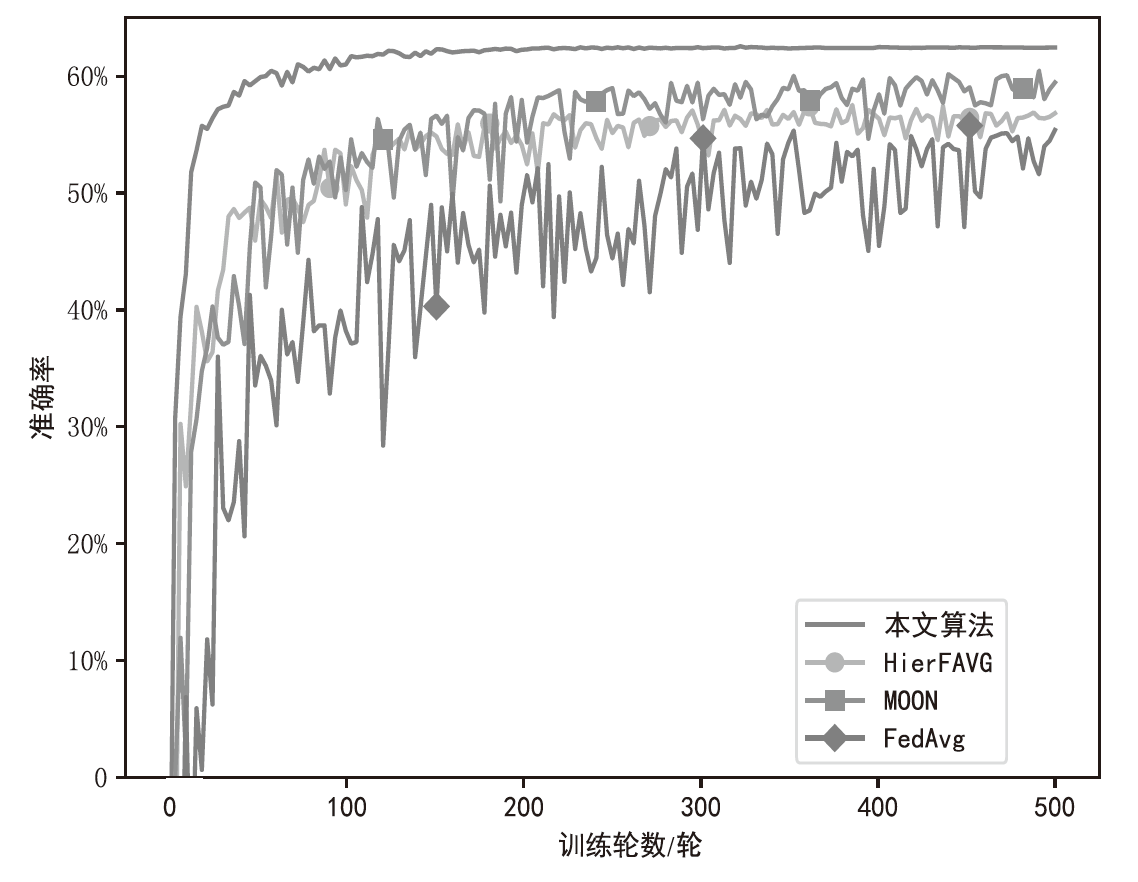

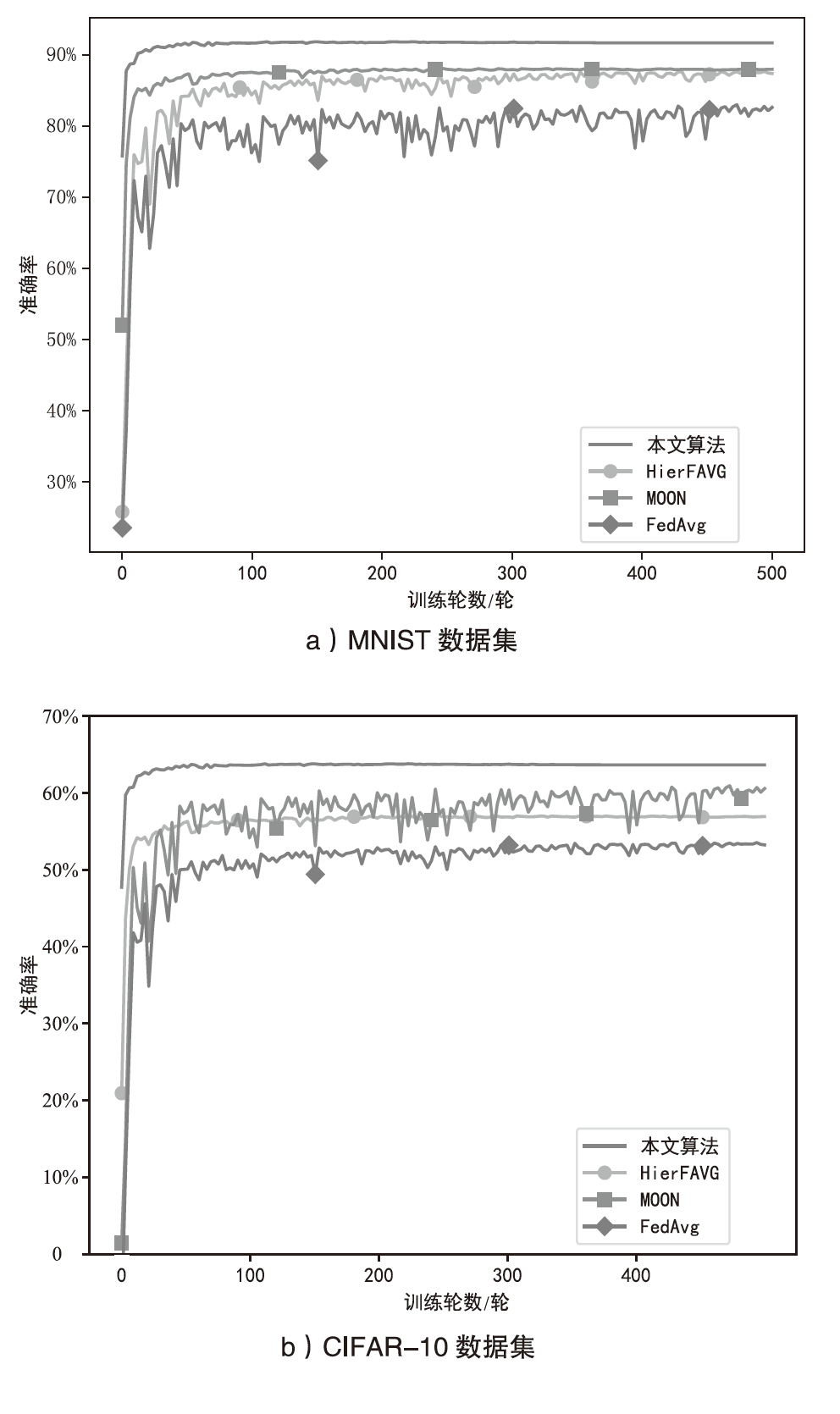

| 场景一 | ||||

|---|---|---|---|---|

| MNIST(CNN模型) | CIFAR-10(CNN模型) | |||

| 准确率 | 损失 | 准确率 | 损失 | |

| 本文算法 | 92.22% | 0.2757 | 63.02% | 0.2800 |

| HierFAVG | 89.73% | 0.2976 | 60.19% | 0.2876 |

| MOON | 90.03% | 0.2991 | 59.45% | 0.2911 |

| FedAvg | 78.41% | 0.3166 | 55.43% | 0.3601 |

| 场景二 | ||||

| MNIST(CNN模型) | CIFAR-10(CNN模型) | |||

| 准确率 | 损失 | 准确率 | 损失 | |

| 本文算法 | 91.99% | 0.2762 | 58.21% | 0.3301 |

| HierFAVG | 88.49% | 0.3253 | 52.11% | 0.2991 |

| MOON | 88.01% | 0.3213 | 52.66% | 0.2989 |

| FedAvg | 81.44% | 0.3701 | 43.02% | 0.3729 |

| [1] |

YANG Helin, LAM K Y, XIAO Liang, et al. Lead Federated Neuromorphic Learning for Wireless Edge Artificial Intelligence[J]. Nature Communications, 2022, 13(1): 4269-4280.

doi: 10.1038/s41467-022-32020-w pmid: 35879326 |

| [2] | YANG Fangchun, WANG Shangguang, LI Jinglin, et al. An Overview of Internet of Vehicles[J]. China Communications, 2014, 11(10): 1-15. |

| [3] |

KAIWARTYA O, ABDULLAH A H, CAO Yue, et al. Internet of Vehicles: Motivation, Layered Architecture, Network Model, Challenges, and Future Aspects[J]. IEEE Access, 2016, 4: 5356-5373.

doi: 10.1109/ACCESS.2016.2603219 URL |

| [4] |

CHEN Chen, ZENG Yini, LI Huan, et al. A Multihop Task Offloading Decision Model in Mec-Enabled Internet of Vehicles[J]. IEEE Internet of Things Journal, 2022, 10(4): 3215-3230.

doi: 10.1109/JIOT.2022.3143529 URL |

| [5] | CHEN Chen, LIU Lei, WAN Shaohua, et al. Data Dissemination for Industry 4.0 Applications in Internet of Vehicles Based on Short-Term Traffic Prediction[J]. ACM Transactions on Internet Technology(TOIT), 2021, 22(1): 1-18. |

| [6] |

WANG Teng, HUO Zheng, HUANG Yaxin, et al. A Review of Research on Privacy Protection Technologies in Federated Learning[J]. Journal of Computer Applications, 2023, 43(2): 437-449.

doi: 10.11772/j.issn.1001-9081.2021122072 |

|

王腾, 霍峥, 黄亚鑫, 等. 联邦学习中的隐私保护技术研究综述[J]. 计算机应用, 2023, 43(2):437-449.

doi: 10.11772/j.issn.1001-9081.2021122072 |

|

| [7] | PANDYA S. Federated Learning for Smart Cities: A Comprehensive Survey[EB/OL]. (2022-12-31)[2023-09-27]. https://doi.org/10.1016/j.seta.2022.102987. |

| [8] | BELTRÁN E T M, PÉREZ M Q, SÁNCHEZ P M S, et al. Decentralized Federated Learning: Fundamentals, State of the Art, Frameworks, Trends, and Challenges[J]. IEEE Communications Surveys & Tutorials, 2023, 25(4): 2983-3013. |

| [9] | MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[C]// JMLR. 20th International Conference on Artificial Intelligence and Statistics(AISTATS). Florida: JMLR, 2017: 1273-1282. |

| [10] |

MA Xiaodong, ZHU Jia, LIN Zhihao, et al. A State-of-the-Art Survey on Solving Non-IID Data in Federated Learning[J]. Future Generation Computer Systems, 2022, 135: 244-258.

doi: 10.1016/j.future.2022.05.003 URL |

| [11] | COLLINS L. Exploiting Shared Repre-Sentations for Personalized Federated Learning[EB/OL]. (2021-07-24)[2023-09-27]. https://proceedings.mlr.press/v139/collins21a.html. |

| [12] | KWATRA S, TORRA V. A k-Anonymised Federated Learning Framework with Decision Trees[C]// Springer. International Workshop on Data Privacy Management. Heidelberg: Springer, 2021: 106-120. |

| [13] | LI Qinbin, HE Bingsheng, SONG D. Model-Contrastive Federated Learning[C]// IEEE. The IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2021: 10713-10722. |

| [14] | JIANG Yihan, KONEČNÝ J, RUSH K, et al. Improving Federated Learning Personalization via Model Agnostic Meta Learning[EB/OL]. (2019-09-27)[2023-09-27]. https://doi.org/10.48550/arXiv.1909.12488. |

| [15] | DENNIS D K. Heterogeneity for the Win: One-Shot Federated Clustering[C]// ICML. The 38th International Conference on Machine Learning. New York: ICML, 2021: 2611-2620. |

| [16] | SRA S. Adadelay: Delay Adaptive Distributed Stochastic Convex Optimization[EB/OL]. (2015-08-20)[2023-09-27]. https://doi.org/10.48550/arXiv.1508.05003. |

| [17] | ODENA A. Faster Asynchronous Sgd[EB/OL]. (2016-01-15)[2023-09-27]. https://doi.org/10.48550/arXiv.1601.04033. |

| [18] | REDDY R V K, RAO B S, RAJU K P. Handwritten Hindi Digits Recognition Using Convolutional Neural Network with RMSprop Optimization[C]// IEEE. 2018 2nd International Conference on Intel-Ligent Computing and Control Systems(ICICCS). New York: IEEE, 2018: 45-51. |

| [19] | MITLIAGKAS I, ZHANG Ce, HADJIS S, et al. Asynchrony Begets Mo-Mentum, with an Application to Deep Learning[C]// IEEE. 2016 54th Annual Allerton Conference on Communication, Control, and Computing(Allerton). New York: IEEE, 2016: 997-1004. |

| [20] |

QIAN Ning. On the Momentum Term in Gradient Descent Learning Algorithms[J]. Neural Networks, 1999, 12(1): 145-151.

pmid: 12662723 |

| [21] | TAN Tao, XIE Hong. Asynchronous SGD with Stale Gradient Dynamic Adjustment for Deep Learning Training[J]. Social Acience Research Network, 2023, 112: 88-103. |

| [22] |

MU Xutong, SHEN Yulong, CHENG Ke, et al. Fedproc: Prototypical Contrastive Federated Learning on Non-IID Data[J]. Future Generation Computer Systems, 2023, 143: 93-104.

doi: 10.1016/j.future.2023.01.019 URL |

| [23] | LIU Lumin, ZHANG Jun, SONG S H, et al. Client-Edge-Cloud Hierarchical Federated Learning[C]// IEEE. ICC 2020 IEEE International Conference on Communications(ICC). New York: IEEE, 2020: 1-6. |

| [24] | ZHANG Yuxuan, XU Chao, YANG H H, et al. DPP-Based Client Selection for Federated Learning with NON-IID Data[C]// IEEE. ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing(ICASSP). New York: IEEE, 2023: 1-5. |

| [25] | GUO Junqi, XIONG Qingyun, YANG Minghui, et al. A Double-Compensation-Based Federated Learning Scheme for Data Privacy Protection in a Social IoT Scenario[J]. Computers, Materials & Continua, 2023, 76(1): 827-848. |

| [26] | ZHENG Shuxin, MENG Qi, WANG Taifeng, et al. Asynchronous Stochastic Gradient Descent with Delay Compensation[C]// ICML. 34th International Conference on Machine Learning. New York: ICML, 2017: 4120-4129. |

| [27] | LIU Jing, YUAN Haidong, LU Xiaoming, et al. Quantum Fisher Information Matrix and Multiparameter Estimation[EB/OL]. (2019-12-10)[2023-09-27]. https://doi.org/10.1088/1751-8121/ab5d4d. |

| [28] | LIAN Xiangru, HUANG Yijun, LI Yuncheng, et al. Asynchronous Parallel Stochastic Gradient for Nonconvex Optimization[C]// NIPS. The 28th International Conference on Neural Information Processing Systems-Volume 2. San Diego:NIPS, 2015: 2737-2745. |

| [29] | XIE Cong, KOYEJO S, GUPTA I. Asynchronous Federated Optimization[EB/OL]. (2020-12-05)[2023-09-27]. https://doi.org/10.48550/arXiv.1903.03934. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||