信息网络安全 ›› 2024, Vol. 24 ›› Issue (2): 262-271.doi: 10.3969/j.issn.1671-1122.2024.02.009

基于触发器逆向的联邦学习后门防御方法

- 中国科学技术大学网络空间安全学院,合肥 230031

-

收稿日期:2023-10-23出版日期:2024-02-10发布日期:2024-03-06 -

通讯作者:周鹏远 E-mail:pyzhou@ustc.edu.cn -

作者简介:林怡航(1998—),男,福建,硕士研究生,主要研究方向为联邦学习|周鹏远(1989—),男,天津,副研究员,博士,CCF会员,主要研究方向为可信人工智能、元宇宙和普适计算|吴治谦(1997—),男,浙江,硕士研究生,主要研究方向为知识图谱|廖勇(1980—),男,湖南,教授,博士,主要研究方向为大数据处理与分析及其在网络安全领域的应用 -

基金资助:国家重点研发计划(2021YFC3300500)

Federated Learning Backdoor Defense Method Based on Trigger Inversion

LIN Yihang, ZHOU Pengyuan( ), WU Zhiqian, LIAO Yong

), WU Zhiqian, LIAO Yong

- School of Cyber Science and Technology, University of Science and Technology of China, Hefei 230031, China

-

Received:2023-10-23Online:2024-02-10Published:2024-03-06 -

Contact:ZHOU Pengyuan E-mail:pyzhou@ustc.edu.cn

摘要:

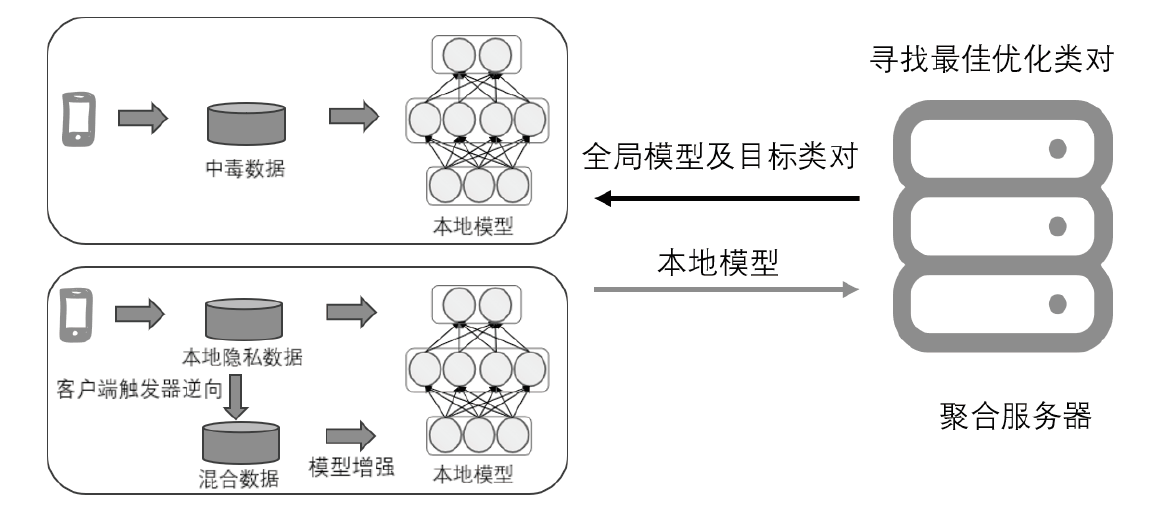

联邦学习作为一种新兴分布式机器学习范式,实现了多客户间的分布式协同模型训练,不需要上传用户的原始数据,从而保护了用户隐私。然而,在联邦学习中由于服务器无法审查客户端的本地数据集,恶意客户端可通过数据投毒将后门嵌入全局模型。传统的联邦学习后门防御方法大多基于模型检测的思想进行后门防御,而忽略了联邦学习自身的分布式特性。因此,文章提出一种基于触发器逆向的联邦学习后门防御方法,使聚合服务器和分布式客户端协作,利用触发器逆向技术生成额外的数据,增强客户端本地模型的鲁棒性,从而进行后门防御。在不同数据集上进行实验,实验结果表明,文章提出的方法可以有效防御后门攻击。

中图分类号:

引用本文

林怡航, 周鹏远, 吴治谦, 廖勇. 基于触发器逆向的联邦学习后门防御方法[J]. 信息网络安全, 2024, 24(2): 262-271.

LIN Yihang, ZHOU Pengyuan, WU Zhiqian, LIAO Yong. Federated Learning Backdoor Defense Method Based on Trigger Inversion[J]. Netinfo Security, 2024, 24(2): 262-271.

表2

不同算法防御单次后门攻击的实验结果

| 基线 | MNIST | Fashion-MNIST | CIFAT-10 | |||

|---|---|---|---|---|---|---|

| 主任务精度 | 攻击 成功率 | 主任务精度 | 攻击 成功率 | 主任务精度 | 攻击 成功率 | |

| 无防御 | 94.03% | 99.68% | 77.93% | 99.8% | 74.06% | 88.41% |

| DP | 94.08% | 100% | 77.79% | 99.65% | 71.18% | 89.20% |

| Trimmed Mean | 97.88% | 0.44% | 81.49% | 11.82% | 75.78% | 15.59% |

| RFA | 97.60% | 0.43% | 79.72% | 7.83% | 78.84% | 7.83% |

| CP | 62.38% | 35.33% | 62.35% | 29.08% | 64.66% | 74.20% |

| Flame | 97.50% | 0.43% | 76.92% | 15.93% | 79.26% | 5.53% |

| 本文方法 | 97.24% | 0.16% | 79.84% | 14.64% | 79.84% | 8.95% |

表3

不同算法防御连续后门攻击的实验结果

| 基线 | MNIST | Fashion-MNIST | CIFAT-10 | |||

|---|---|---|---|---|---|---|

| 主任务精度 | 攻击 成功率 | 主任务精度 | 攻击 成功率 | 主任务精度 | 攻击 成功率 | |

| 无防御 | 96.20% | 99.82% | 82.37% | 99.89% | 77.96% | 82.13% |

| DP | 95.32% | 86.52% | 79.57% | 99.70% | 78.13% | 81.21% |

| Trimmed Mean | 98.74% | 99.89% | 80.37% | 99.75% | 62.62% | 83.67% |

| RFA | 97.97% | 2.24% | 82.26% | 22.93% | 76.92% | 63.10% |

| CP | 95.73% | 58.08% | 67.77% | 25.43% | 62.51% | 63.97% |

| Flame | 98.08% | 0.31% | 77.23% | 17.81% | 77.44% | 81.60% |

| 本文方法 | 96.67% | 1.53% | 78.88% | 15.21% | 70.54% | 22.03% |

| [1] | LECUN Y, BENGIO Y. Convolutional Networks for Images, Speech, and Time Series[J]. The Handbook of Brain Theory and Neural Networks, 1995, 3361(10): 255-258. |

| [2] | CHAN Y S, ROTH D. Exploiting Syntactico-Semantic Structures for Relation Extraction[C]// ACM. The 49th Annual Meeting of the Association for Computational Linguistics:Human Language Technologies. New York: ACM, 2011: 551-560. |

| [3] | WEN Long, LU Zhichen, CUI Lingxiao. Deep Learning-Based Feature Engineering for Stock Price Movement Prediction[J]. Knowledge-Based Systems, 2019(164): 163-173. |

| [4] | VOIGT P, VON D B A. A Practical Guide[M]. Heidelberg: Springer, 2017. |

| [5] | PARDAU S L. The California Consumer Privacy Act: Towards a European-Style Privacy Regime in the United States[J]. Journal of Technology Law & Policy, 2018(23): 68-114. |

| [6] | CHESTERMAN S. After Privacy: The Rise of Facebook, the Fall of Wikileaks, and Singapore’s Personal Data Protection Act 2012[EB/OL]. (2012-12-01)[2023-10-17]. https://www.jstor.org/stable/24872218. |

| [7] | MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[EB/OL]. (2016-02-17)[2023-10-17]. https://arxiv.org/abs/1602.05629. |

| [8] | ANTUNES R S, ANDRE D C C, KUDERLE A, et al. Federated Learning for Healthcare: Systematic Review and Architecture Proposal[J]. ACM Transactions on Intelligent Systems and Technology (TIST), 2022, 13(4): 1-23. |

| [9] | DASH B, SHARMA P, ALI A. Federated Learning for Privacy-Preserving: A Review of PII Data Analysis in Fintech[J]. International Journal of Software Engineering & Applications (IJSEA), 2022, 13(4): 1-13. |

| [10] |

ZHENG Zhaohua, ZHOU Yize, SUN Yilong, et al. Applications of Federated Learning in Smart Cities: Recent Advances, Taxonomy, and Open Challenges[J]. Connection Science, 2022, 34(1): 1-28.

doi: 10.1080/09540091.2021.1936455 URL |

| [11] | LUO Xinjian, WU Yuncheng, XIAO Xiaokui, et al. Feature Inference Attack on Model Predictions in Vertical Federated Learning[C]// IEEE. 2021 IEEE 37th International Conference on Data Engineering (ICDE). New York:IEEE, 2021: 181-192. |

| [12] | ZHU Ligeng, LIU Zhijian, HAN Song. Deep Leakage from Gradients[J]. Advances in Neural Information Processing Systems, 2019(32): 14747-14756. |

| [13] | BHAGOJI A N, CHAKRABORTY S, MITTAL P, et al. Analyzing Federated Learning Through an Adversarial Lens[EB/OL]. (2019-11-25)[2023-10-17]. https://arxiv.org/abs/1811.12470. |

| [14] | BAGDASARYAN E, VEIT A, HUA Yiqing, et al. How to Backdoor Federated Learning[EB/OL]. (2019-08-06)[2023-10-17]. https://arxiv.org/abs/1807.00459. |

| [15] | MCMAHAN H B, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[EB/OL]. (2023-01-26)[2023-10-17]. https://arxiv.org/abs/1602.05629. |

| [16] | WANG Hongyi, SREENIVASAN K, RAJPUT S, et al. Attack of the Tails: Yes, You Really Can Backdoor Federated Learning[J]. Advances in Neural Information Processing Systems, 2020(33): 16070-16084. |

| [17] | ZHANG Zhiyuan, SU Qi, SUN Xu. Dim-Krum: Backdoor-Resistant Federated Learning for NLP with Dimension-Wise Krum-Based Aggregation[EB/OL]. (2022-10-13)[2023-10-17]. https://arxiv.org/abs/2210.06894. |

| [18] | NGUYEN T D, RIEGER P, MIETTINEN M, et al. Poisoning Attacks on Federated Learning-Based IoT Intrusion Detection System[EB/OL]. (2020-01-01)[2023-10-17]. https://www.semanticscholar.org/paper/Poisoning-Attacks-on-Federated-Learning-based-IoT-Nguyen-Rieger/35ff04db3be0e98c40c6483081484308daa9ad82. |

| [19] | NGUYEN T D, RIEGER P, CHEN Huili, et al. FLAME: Taming Backdoors in Federated Learning[C]// USENIX. 31st USENIX Security Symposium (USENIX Security 22). Berkeley:USENIX, 2022: 1415-1432. |

| [20] | DWORK C. Differential Privacy[C]// Springer. International Colloquium on Automata, Languages, and Programming. Heidelberg: Springer, 2006: 1-12. |

| [21] | BLANCHARD P, EL M E M, GUERRAOUI R, et al. Machine Learning with Adversaries: Byzantine Tolerant Gradient Descent[J]. Advances in Neural Information Processing Systems, 2017(30): 119-129. |

| [22] | XIE Cong, KOYEJO O, GUPTA I. Generalized Byzantine-Tolerant SGD[EB/OL]. (2018-02-27)[2023-10-17]. https://doi.org/10.48550/arXiv.1802.10116. |

| [23] | FUNG C, YOON C J, BESCHASTNIKH I. The Limitations of Federated Learning in Sybil Settings[C]// USENIX. 23rd International Symposium on Research in Attacks, Intrusions and Defenses(RAID 2020). Berkeley:USENIX, 2020: 301-316. |

| [24] | MUÑOZ-GONZÁLEZ L, CO K T, LUPU E C. Byzantine-Robust Federated Machine Learning Through Adaptive Model Averaging[EB/OL]. (2019-09-11)[2023-10-17]. https://doi.org/10.48550/arXiv.1909.05125. |

| [25] | SEKHARI A, ACHARYA J, KAMATH G, et al. Remember What You Want to Forget: Algorithms for Machine Unlearning[J]. Neural Information Processing Systems, 2021(34): 18075-18086. |

| [26] | WU Chen, ZHU Sencun, PRASENJIT M. Federated Unlearning with Knowledge Distillation[EB/OL]. (2022-01-24)[2023-10-17]. https://doi.org/10.48550/arXiv.2201.09441. |

| [27] | LI Zhuohang, ZHANG Jiaxin, LIU Yuyang, et al. Auditing Privacy Defenses in Federated Learning via Generative Gradient Leakage[C]// IEEE. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2022: 10132-10142. |

| [28] | TAO Guanhong, LIU Yingqi, SHEN Guangyu, et al. Model Orthogonalization: Class Distance Hardening in Neural Networks for Better Security[C]// IEEE. 2022 IEEE Symposium on Security and Privacy (SP). New York:IEEE, 2022: 1372-1389. |

| [29] | CAO Xiaoyu, FANG Minghong, LIU Jia, et al. FLTrust: Byzantine-Robust Federated Learning via Trust Bootstrapping[EB/OL]. (2020-04-12)[2023-10-17]. https://doi.org/10.48550/arXiv.2012.13995. |

| [30] | WATKINS C J C H. Learning from Delayed Rewards[EB/OL]. (1989-01-01)[2023-10-17]. https://www.researchgate.net/publication/33784417_Learning_From_Delayed_Rewards. |

| [31] | XIE Chulin, HUANG Keli, CHEN Pinyu, et al. DBA: Distributed Backdoor Attacks against Federated Learning[EB/OL]. (-12-20)[2023-10-17]. https://openreview.net/forum?id=rkgyS0VFvr. |

| [32] | PILLUTLA K, KAKADE S M, HARCHAOUI Z. Robust Aggregation for Federated Learning[J]. IEEE Transactions on Signal Processing, 2022(70): 1142-1154. |

| [33] | XIE Chulin, CHEN Minghao, CHEN Pinyu, et al. CRFL: Certifiably Robust Federated Learning against Backdoor Attacks[C]// ICML. International Conference on Machine Learning 2021. New York: PMLR, 2021: 11372-11382. |

| [34] | ZHANG Hongyi, CISSE M, DAUPHIN Y N, et al. Mixup: Beyond Empirical Risk Minimization[EB/OL]. (2017-10-25)[2023-10-17]. https://doi.org/10.48550/arXiv.1710.09412. |

| [35] | DEVRIES T, TAYLOR G W. Improved Regularization of Convolutional Neural Networks with Cutout[EB/OL]. (2017-08-15)[2023-10-17]. https://doi.org/10.48550/arXiv.1708.04552. |

| [1] | 金志刚, 丁禹, 武晓栋. 融合梯度差分的双边校正联邦入侵检测算法[J]. 信息网络安全, 2024, 24(2): 293-302. |

| [2] | 吴昊天, 李一凡, 崔鸿雁, 董琳. 基于零知识证明和区块链的联邦学习激励方案[J]. 信息网络安全, 2024, 24(1): 1-13. |

| [3] | 赵佳, 杨博凯, 饶欣宇, 郭雅婷. 基于联邦学习的Tor流量检测算法设计与实现[J]. 信息网络安全, 2024, 24(1): 60-68. |

| [4] | 徐茹枝, 戴理朋, 夏迪娅, 杨鑫. 基于联邦学习的中心化差分隐私保护算法研究[J]. 信息网络安全, 2024, 24(1): 69-79. |

| [5] | 赖成喆, 赵益宁, 郑东. 基于同态加密的隐私保护与可验证联邦学习方案[J]. 信息网络安全, 2024, 24(1): 93-105. |

| [6] | 彭翰中, 张珠君, 闫理跃, 胡成林. 联盟链下基于联邦学习聚合算法的入侵检测机制优化研究[J]. 信息网络安全, 2023, 23(8): 76-85. |

| [7] | 陈晶, 彭长根, 谭伟杰, 许德权. 基于差分隐私和秘密共享的多服务器联邦学习方案[J]. 信息网络安全, 2023, 23(7): 98-110. |

| [8] | 刘长杰, 石润华. 基于安全高效联邦学习的智能电网入侵检测模型[J]. 信息网络安全, 2023, 23(4): 90-101. |

| [9] | 刘吉强, 王雪微, 梁梦晴, 王健. 基于共享数据集和梯度补偿的分层联邦学习框架[J]. 信息网络安全, 2023, 23(12): 10-20. |

| [10] | 杨丽, 朱凌波, 于越明, 苗银宾. 联邦学习与攻防对抗综述[J]. 信息网络安全, 2023, 23(12): 69-90. |

| [11] | 刘忻, 李韵宜, 王淼. 一种基于机密计算的联邦学习节点轻量级身份认证协议[J]. 信息网络安全, 2022, 22(7): 37-45. |

| [12] | 吕国华, 胡学先, 杨明, 徐敏. 基于联邦随机森林的船舶AIS轨迹分类算法[J]. 信息网络安全, 2022, 22(4): 67-76. |

| [13] | 白宏鹏, 邓东旭, 许光全, 周德祥. 基于联邦学习的入侵检测机制研究[J]. 信息网络安全, 2022, 22(1): 46-54. |

| [14] | 徐硕, 张睿, 夏辉. 基于数据属性修改的联邦学习隐私保护策略[J]. 信息网络安全, 2022, 22(1): 55-63. |

| [15] | 路宏琳, 王利明, 杨婧. 一种新的参数掩盖联邦学习隐私保护方案[J]. 信息网络安全, 2021, 21(8): 26-34. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||