信息网络安全 ›› 2026, Vol. 26 ›› Issue (1): 49-58.doi: 10.3969/j.issn.1671-1122.2026.01.004

一种对抗GAN攻击的联邦隐私增强方法研究

- 军事科学院系统工程研究院,北京 100010

-

收稿日期:2025-11-07出版日期:2026-01-10发布日期:2026-02-13 -

通讯作者:施寅生shiyinshengjms@163.com -

作者简介:施寅生(1983—),男,河南,助理研究员,硕士,主要研究方向为网络安全|包阳(1978—),男,辽宁,研究员,硕士,主要研究方向为网络安全|庞晶晶(1992—),女,河南,硕士,主要研究方向为科技信息情报

Research on a Federated Privacy Enhancement Method against GAN Attacks

SHI Yinsheng( ), BAO Yang, PANG Jingjing

), BAO Yang, PANG Jingjing

- Institute of Systems Engineering, Academy of Military Sciences, People’s Liberation Army of China, Beijing 100010, China

-

Received:2025-11-07Online:2026-01-10Published:2026-02-13

摘要:

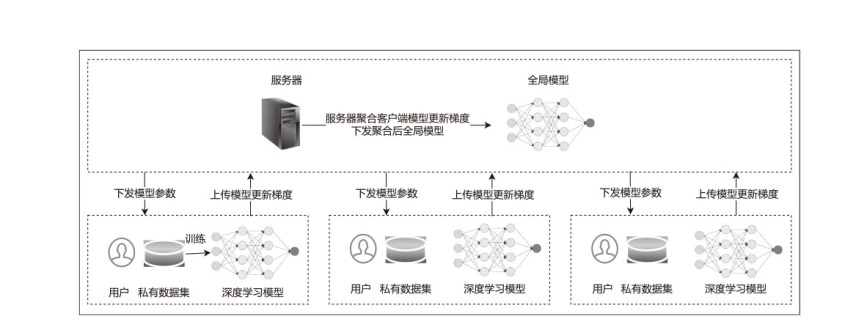

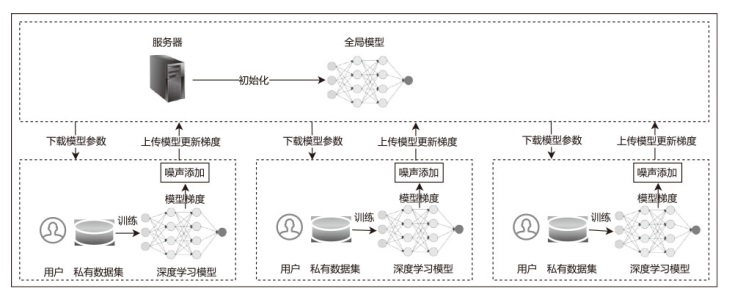

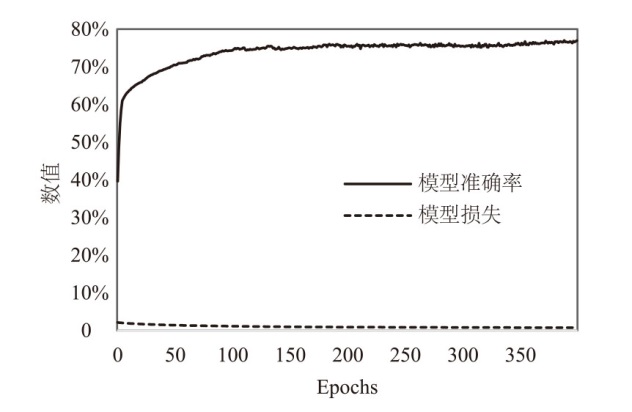

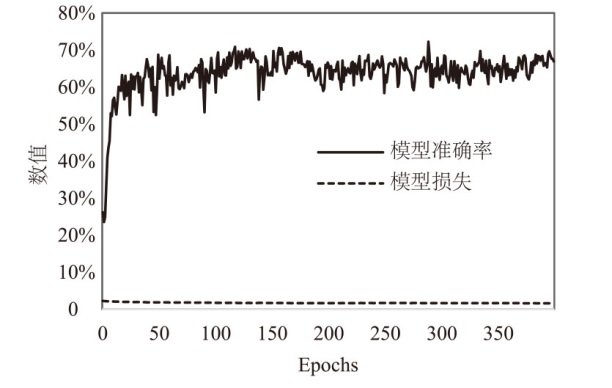

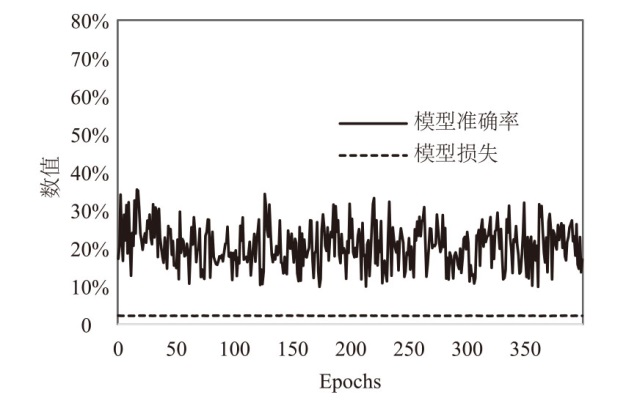

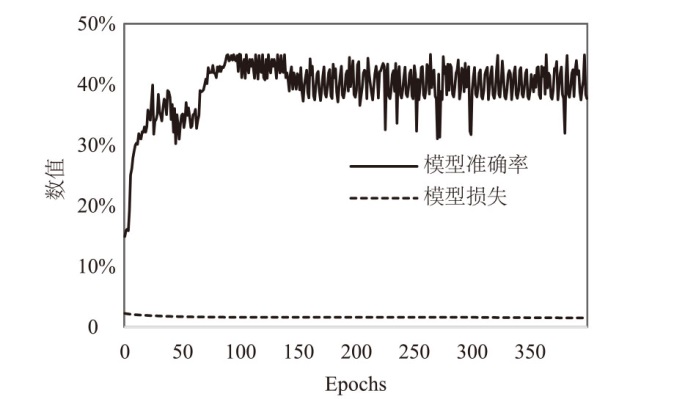

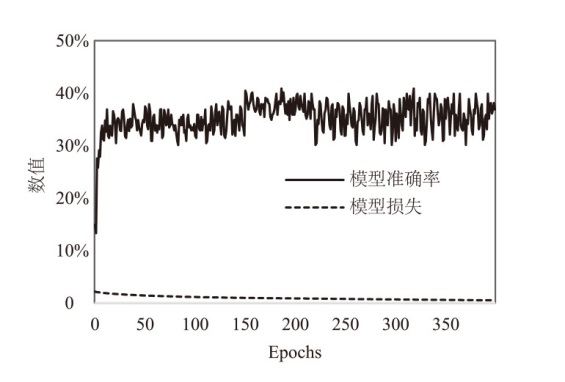

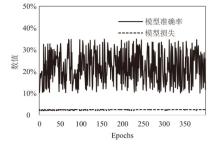

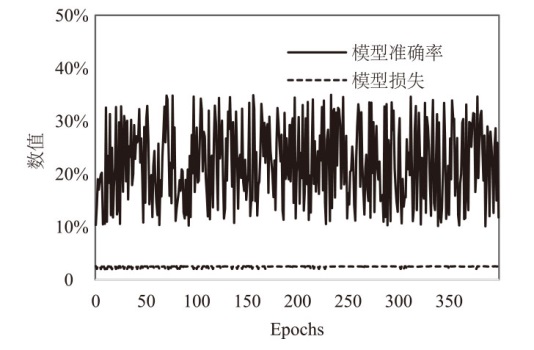

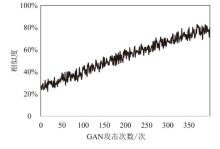

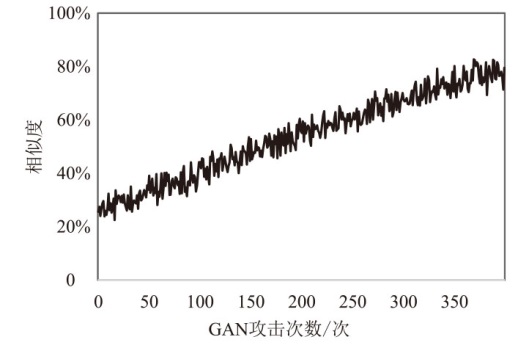

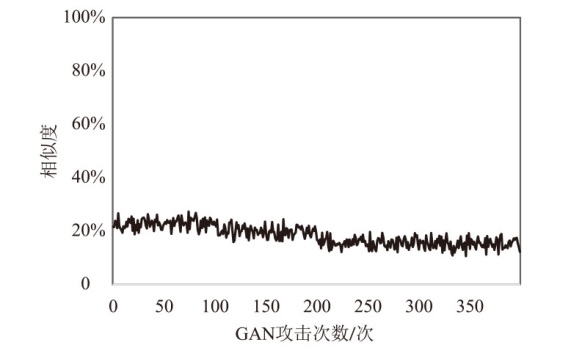

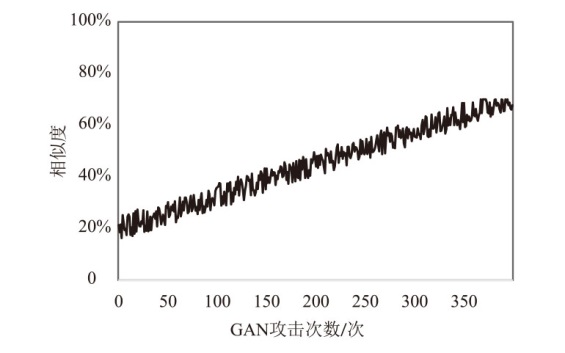

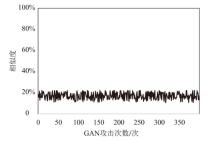

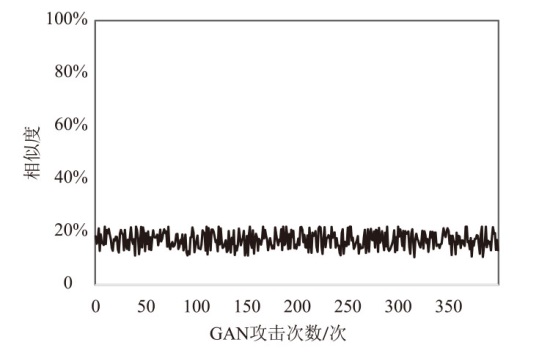

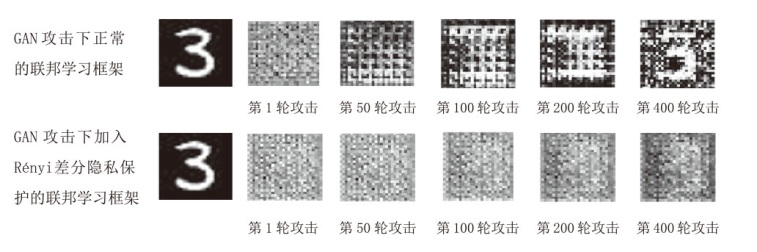

联邦学习通过分布式训练避免数据集中存储,然而,仍存在恶意客户端利用生成式对抗网络(GAN)攻击窃取隐私数据的风险。传统的差分隐私和加密机制等防御手段,存在模型性能与隐私效果权衡难或计算成本高等问题。文章针对联邦学习在图像识别任务中面临的GAN攻击风险,提出一种基于Rényi差分隐私的隐私增强方法,旨在提升模型的数据隐私性。Rényi差分隐私的串行组合机制使得在多轮迭代中隐私预算增长速率从传统差分隐私的线性降为亚线性,可有效降低噪声添加量。文章方法利用Rényi差分隐私紧密的噪声组合特性,在客户端梯度更新参数时,通过基于权均衡权重的梯度裁剪和优化的高斯噪声添加,实现差分隐私计算,进而降低隐私泄露风险,同时平衡模型可用性。实验表明,文章方法在模型全局准确性受影响程度可接受的前提下,实现本地数据的隐私保护,增强模型的隐私保护能力,进而有效抵御GAN攻击,保障图像数据隐私性。

中图分类号:

引用本文

施寅生, 包阳, 庞晶晶. 一种对抗GAN攻击的联邦隐私增强方法研究[J]. 信息网络安全, 2026, 26(1): 49-58.

SHI Yinsheng, BAO Yang, PANG Jingjing. Research on a Federated Privacy Enhancement Method against GAN Attacks[J]. Netinfo Security, 2026, 26(1): 49-58.

| [1] | MCMAHAN H B, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[C]// AISTATS. The 20th International Conference on AISTATS 2017. Indio: AISTATS, 2017: 1273-1282. |

| [2] | HITAJ B, ATENIESE G, PEREZ-CRUZ F. Deep Models under the GAN: Information Leakage from Collaborative Deep Learning[C]// ACM. Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2017: 603-618. |

| [3] | YIN Xuefei, ZHU Yanming, HU Jiankun. A Comprehensive Survey of Privacy-Preserving Federated Learning: A Taxonomy, Review, and Future Directions[J]. ACM Computing Surveys (CSUR), 2021, 54(6): 1-36. |

| [4] | DWORK C, MCSHERRY F, NISSIM K, et al. Calibrating Noise to Sensitivity in Private Data Analysis[C]// Springer. Theory of Cryptography. Heidelberg: Springer, 2006: 265-284. |

| [5] | MCSHERRY F, TALWAR K. Mechanism Design via Differential Privacy[C]// IEEE. The 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS 2007). New York: IEEE, 2007: 94-103. |

| [6] | NIKOLOV A, TALWAR K, ZHANG Li. The Geometry of Differential Privacy: The Sparse and Approximate Cases[C]// ACM. The Forty-Fifth Annual ACM Symposium on Theory of Computing. New York: ACM, 2013: 351-360. |

| [7] |

WU Xiang, ZHANG Yongting, SHI Minyu, et al. An Adaptive Federated Learning Scheme with Differential Privacy Preserving[J]. Future Generation Computer Systems, 2022, 127: 362-372.

doi: 10.1016/j.future.2021.09.015 URL |

| [8] | TRUEX S, LIU Ling, CHOW K H, et al. LDP-Fed: Federated Learning with Local Differential Privacy[C]// ACM. The Third ACM International Workshop on Edge Systems, Analytics and Networking. New York: ACM, 2020: 61-66. |

| [9] |

WEI Kang, LI Jun, DING Ming, et al. Federated Learning with Differential Privacy: Algorithms and Performance Analysis[J]. IEEE Transactions on Information Forensics and Security, 2020, 15: 3454-3469.

doi: 10.1109/TIFS.2020.2988575 URL |

| [10] | YU Da, ZHANG Huishuai, CHEN Wei, et al. Gradient Perturbation Is Underrated for Differentially Private Convex Optimization[C]// ACM. The Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence. New York: ACM, 2021: 3117-3123. |

| [11] | XU Ruzhi, TONG Yumeng, DAI Lipeng. Research on Federated Learning Adaptive Differential Privacy Method Based on Heterogeneous Data[J]. Netinfo Security, 2025, 25(1): 63-77. |

| 徐茹枝, 仝雨蒙, 戴理朋. 基于异构数据的联邦学习自适应差分隐私方法研究[J]. 信息网络安全, 2025, 25(1): 63-77. | |

| [12] | XU Runhua, BARACALDO N, ZHOU Yi, et al. HybridAlpha: An Efficient Approach for Privacy-Preserving Federated Learning[C]// ACM. The 12th ACM Workshop on Artificial Intelligence and Security. New York: ACM, 2019: 13-23. |

| [13] | JIN Weizhao, YAO Yuhang, HAN Shanshan, et al. FedML-HE: An Efficient Homomorphic-Encryption-Based Privacy-Preserving Federated Learning System[EB/OL](2024-06-17)[2025-11-01]. https://doi.org/10.48550/arXiv.2303.10837 |

| [14] |

YU Feng, LIN Hui, WANG Xiaoding, et al. Communication-Efficient Personalized Federated Meta-Learning in Edge Networks[J]. IEEE Transactions on Network and Service Management, 2023, 20(2): 1558-1571.

doi: 10.1109/TNSM.2023.3263831 URL |

| [15] |

YIN Lihua, FENG Jiyuan, XUN Hao, et al. A Privacy-Preserving Federated Learning for Multiparty Data Sharing in Social IoTs[J]. IEEE Transactions on Network Science and Engineering, 2021, 8(3): 2706-2718.

doi: 10.1109/TNSE.2021.3074185 |

| [16] |

RIVEST R L, SHAMIR A, ADLEMAN L. A Method for Obtaining Digital Signatures and Public-Key Cryptosystems[J]. Communications of the ACM. 1983, 26(1): 96-99.

doi: 10.1145/357980.358017 URL |

| [17] |

ZHANG Li, XU Jianbo, VIJAYAKUMAR P, et al. Homomorphic Encryption-Based Privacy-Preserving Federated Learning in IoT-Enabled Healthcare System[J]. IEEE Transactions on Network Science and Engineering, 2023, 10(5): 2864-2880.

doi: 10.1109/TNSE.2022.3185327 URL |

| [18] | ZHENG Chengbo, YAN Haonan, FU Caili, et al. Double Layer Federated Security Learning Architecture for Artificial Intelligence of Things[J]. Journal of Network and Information Security, 2024, 10(6): 71-80. |

| 郑诚波, 闫皓楠, 傅彩利, 等. 面向智能物联网的双层级联邦安全学习架构[J]. 网络与信息安全学报, 2024, 10(6): 71-80. | |

| [19] | LAI Chengzhe, ZHAO Yining, ZHENG Dong. A Privacy Protection and Verifiable Federated Learning Scheme Based on Homomorphic Encryption[J]. Netinfo Security, 2024, 24(1): 93-105. |

| 赖成喆, 赵益宁, 郑东. 基于同态加密的隐私保护与可验证联邦学习方案[J]. 信息网络安全, 2024, 24(1): 93-105. | |

| [20] | YAO Pan, ZHENG Chao, WANG He, et al. FedSHE: Privacy Preserving and Efficient Federated Learning with Adaptive Segmented CKKS Homomorphic Encryption[EB/OL](2024-07-04)[2025-11-01]. https://doi.org/10.1186/s42400-024-00232-w. |

| [21] | ZHANG Zehui, LI Qingdan, FU Yao, et al. Adaptive Federated Deep Learning with Non-IID Data[J]. Acta Automatica Sinica, 2023, 49(12): 2493-2506. |

| 张泽辉, 李庆丹, 富瑶, 等. 面向非独立同分布数据的自适应联邦深度学习算法[J]. 自动化学报, 2023, 49(12): 2493-2506. | |

| [22] | DWORK C, KENTHAPADI K, MCSHERRY F, et al. Our Data, Ourselves: Privacy via Distributed Noise Generation[C]// Springer. Advances in Cryptology-EUROCRYPT 2006. Heidelberg: Springer, 2006: 486-503. |

| [23] | MIRONOV I. Rényi Differential Privacy[C]// IEEE. 2017 IEEE 30th Computer Security Foundations Symposium (CSF). New York: IEEE, 2017: 263-275. |

| [24] | ABADI M, CHU A, GOODFELLOW I, et al. Deep Learning with Differential Privacy[C]// ACM. The 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. |

| [25] |

LI Wenling, YU Ping, CHENG Yanan, et al. Efficient and Privacy-Enhanced Federated Learning Based on Parameter Degradation[J]. IEEE Transactions on Services Computing, 2024, 17(5): 2304-2319.

doi: 10.1109/TSC.2024.3399659 URL |

| [1] | 郑开发, 骆振鹏, 刘嘉奕, 刘志全, 王赜, 吴云坤. 支持属性更新的轻量级联邦学习节点动态参与方案[J]. 信息网络安全, 2026, 26(1): 102-114. |

| [2] | 陈先意, 汪学波, 崔琦, 付章杰, 王茜茜, 曾一福. 面向个性化联邦学习的后门攻击与防御综述[J]. 信息网络安全, 2025, 25(9): 1418-1438. |

| [3] | 荀毅杰, 崔嘉容, 毛伯敏, 秦俊蔓. 基于联邦学习的智能汽车CAN总线入侵检测系统[J]. 信息网络安全, 2025, 25(6): 872-888. |

| [4] | 邓东上, 王伟业, 张卫东, 吴宣够. 基于模型特征方向的分层个性化联邦学习框架[J]. 信息网络安全, 2025, 25(6): 889-897. |

| [5] | 朱率率, 刘科乾. 基于掩码的选择性联邦蒸馏方案[J]. 信息网络安全, 2025, 25(6): 920-932. |

| [6] | 李佳东, 曾海涛, 彭莉, 汪晓丁. 一种保护数据隐私的匿名路由联邦学习框架[J]. 信息网络安全, 2025, 25(3): 494-503. |

| [7] | 王亚杰, 陆锦标, 李宇航, 范青, 张子剑, 祝烈煌. 基于可信执行环境的联邦学习分层动态防护算法[J]. 信息网络安全, 2025, 25(11): 1762-1773. |

| [8] | 徐茹枝, 仝雨蒙, 戴理朋. 基于异构数据的联邦学习自适应差分隐私方法研究[J]. 信息网络安全, 2025, 25(1): 63-77. |

| [9] | 郭倩, 赵津, 过弋. 基于分层聚类的个性化联邦学习隐私保护框架[J]. 信息网络安全, 2024, 24(8): 1196-1209. |

| [10] | 薛茗竹, 胡亮, 王明, 王峰. 基于联邦学习和区块链技术的TAP规则处理系统[J]. 信息网络安全, 2024, 24(3): 473-485. |

| [11] | 林怡航, 周鹏远, 吴治谦, 廖勇. 基于触发器逆向的联邦学习后门防御方法[J]. 信息网络安全, 2024, 24(2): 262-271. |

| [12] | 金志刚, 丁禹, 武晓栋. 融合梯度差分的双边校正联邦入侵检测算法[J]. 信息网络安全, 2024, 24(2): 293-302. |

| [13] | 何泽平, 许建, 戴华, 杨庚. 联邦学习应用技术研究综述[J]. 信息网络安全, 2024, 24(12): 1831-1844. |

| [14] | 兰浩良, 王群, 徐杰, 薛益时, 张勃. 基于区块链的联邦学习研究综述[J]. 信息网络安全, 2024, 24(11): 1643-1654. |

| [15] | 萨其瑞, 尤玮婧, 张逸飞, 邱伟杨, 马存庆. 联邦学习模型所有权保护方案综述[J]. 信息网络安全, 2024, 24(10): 1553-1561. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||