信息网络安全 ›› 2024, Vol. 24 ›› Issue (10): 1562-1569.doi: 10.3969/j.issn.1671-1122.2024.10.010

基于知识蒸馏的无数据个性化联邦学习算法

- 1.南开大学计算机学院,天津 300350

2.天津市网络与数据安全技术重点实验室,天津 300350

3.南开大学网络空间安全学院,天津 300350

-

收稿日期:2024-05-03出版日期:2024-10-10发布日期:2024-09-27 -

通讯作者:张健,zhang.jian@nankai.edu.cn -

作者简介:陈婧(2002—),女,安徽,硕士研究生,主要研究方向为数据安全|张健(1968—),男,天津,教授,博士,CCF会员,主要研究方向为网络安全、数据安全、云安全、系统安全 -

基金资助:国家重点研发计划(2022YFB3103202);天津市重点研发计划(20YFZCGX00680);天津市新一代人工智能科技重大专项(19ZXZNGX00090)

A Data-Free Personalized Federated Learning Algorithm Based on Knowledge Distillation

CHEN Jing1,2, ZHANG Jian1,2,3( )

)

- 1. College of Computer Science, Nankai University, Tianjin 300350, China

2. Tianjin Key Laboratory of Network and Data Security Technology, Tianjin 300350, China

3. College of Cyber Science, Nankai University, Tianjin 300350, China

-

Received:2024-05-03Online:2024-10-10Published:2024-09-27

摘要:

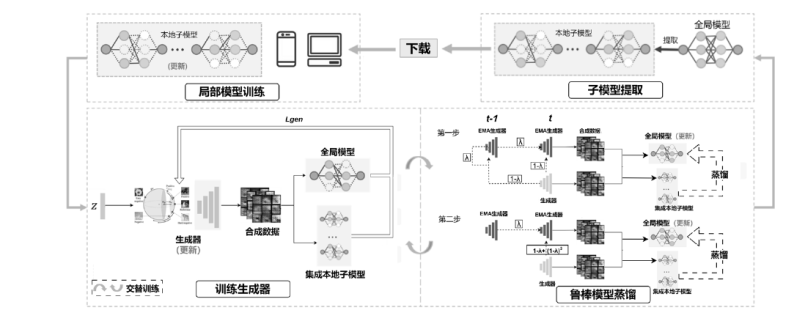

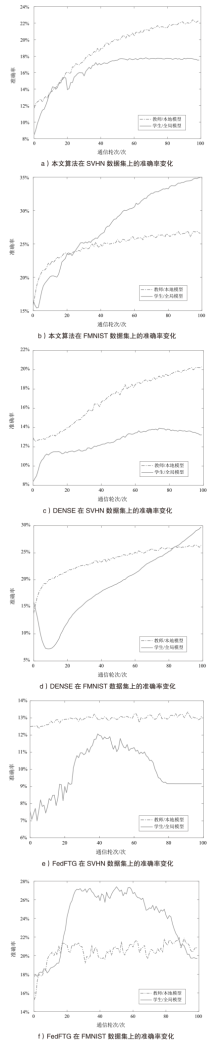

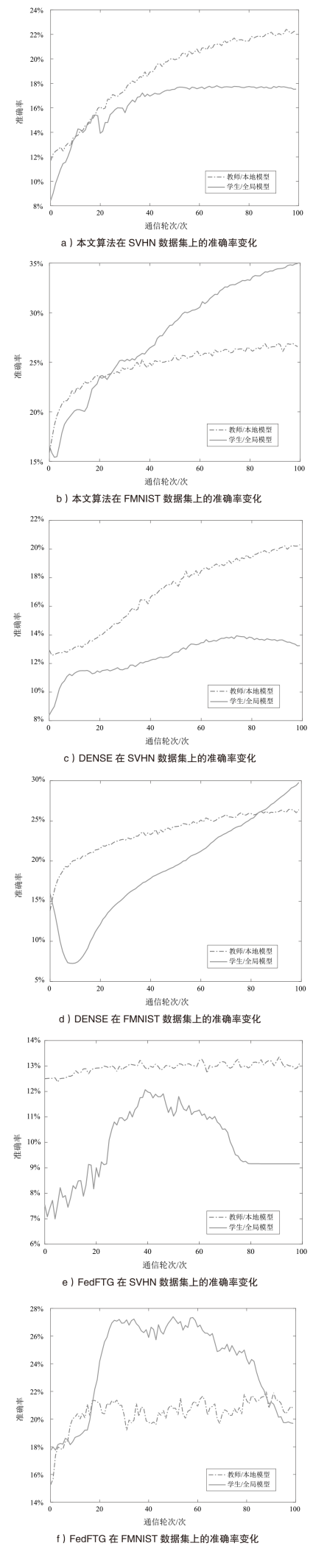

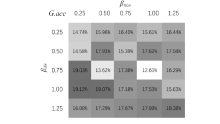

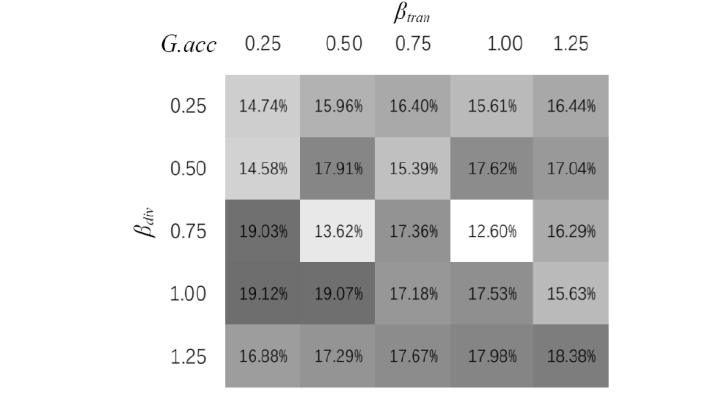

联邦学习算法通常面临着客户端之间差异巨大的问题,这些异质性会降低全局模型性能,文章使用知识蒸馏方法缓解这个问题。为了进一步解放公共数据,完善模型性能,文章所提的DFP-KD算法使用无数据方法合成训练数据,利用无数据知识蒸馏方法训练鲁棒的联邦学习全局模型;使用ReACGAN作为生成器部分,并且采用分步EMA快速更新策略,在避免全局模型灾难性遗忘的同时加快模型的更新速率。对比实验、消融实验和参数取值影响实验表明,DFP-KD算法比经典的无数据知识蒸馏算法在准确率、稳定性、更新速度方面都更具优势。

中图分类号:

引用本文

陈婧, 张健. 基于知识蒸馏的无数据个性化联邦学习算法[J]. 信息网络安全, 2024, 24(10): 1562-1569.

CHEN Jing, ZHANG Jian. A Data-Free Personalized Federated Learning Algorithm Based on Knowledge Distillation[J]. Netinfo Security, 2024, 24(10): 1562-1569.

| [1] | MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-Efficient Learning of Deep Networks from Decentralized Data[C]// AISTATS. 20th International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2017: 1273-1282. |

| [2] | ZHANG L. Federated Learning-Cracking the Smart Healthcare Data Security Privacy Puzzle[EB/OL]. (2020-08-04)[2024-04-20]. https://zhuanlan.zhihu.com/p/166482616. |

| [3] | LI Qinbin, DIAO Yiqun, CHEN Quan, et al. Federated Learning on Non-IID Data Silos: An Experimental Study[C]// IEEE. 38th International Conference on Data Engineering (ICDE). New York: IEEE, 2022: 965-978. |

| [4] | GONG Yanxia. Research on Personalized Method of Federated Learning in Heterogeneous Scenario[D]. GuiLin: Guangxi Normal University, 2023. |

| 龚艳霞. 异质场景下联邦学习的个性化方法研究[D]. 桂林: 广西师范大学, 2023. | |

| [5] | HUANG Hua. Research on Key Technologies of Federated Learning with Heterogeneous Data[D]. Xi’an: Xidian University, 2022. |

| 黄华. 异质性数据的联邦学习关键技术研究[D]. 西安: 西安电子科技大学, 2022. | |

| [6] | SHEN Tao, KUANG Kun, WU Chao, et al. The Challenge of Heterogeneity in Privacy Computing: Exploring the Co-Optimization Problem of Federated Learning in Distributed Heterogeneous Environments[J]. Artificial Intelligence, 2023(6): 1-13. |

| 沈弢, 况琨, 吴超, 等. 隐私计算中的异质性挑战:探索分布式异质环境下联邦学习的协同优化问题[J]. 人工智能, 2023(6): 1-13. | |

| [7] | ZHAN Fan. Research on Weighted Federated Distillation Algorithm for Non-IID Data[D]. Wuhan: Huazhong University of Science and Technology, 2022. |

| 詹帆. 面向非独立同分布数据的加权联邦蒸馏算法研究[D]. 武汉: 华中科技大学, 2022. | |

| [8] | CHEN Xuebin, REN Zhiqiang. PFKD: A Personalized Federated Learning Framework that Integrates Data Heterogeneity and Model Heterogeneity[J]. Journal of Nanjing University of Information Science & Technology, 2024, 16(4): 513-519. |

| 陈学斌, 任志强. PFKD: 综合考虑数据异构和模型异构的个性化联邦学习框架[J]. 南京信息工程大学学报, 2024, 16(4): 513-519. | |

| [9] | LIN Tao, KONG Lingjing, STICH S U, et al. Ensemble Distillation for Robust Model Fusion in Federated Learning[J]. Advances in Neural Information Processing Systems, 2020, 33: 2351-2363. |

| [10] | YANG Qiang. A Study of Image Classification Algorithm Based on Heterogeneous Federated Learning[D]. Chengdu: University of Electronic Science and Technology of China, 2023. |

| 杨强. 基于异构联邦学习的图像分类算法研究[D]. 成都: 电子科技大学, 2023. | |

| [11] | ZHUANG Yulin, LYU Lingjuan, SHI Chuan, et al. Data-Free Adversarial Knowledge Distillation for Graph Neural Networks[C]// IJCAI. 31st International Joint Conference on Artificial Intelligence. San Francisco: Morgan Kaufmann, 2022: 2441-2447. |

| [12] | ZHU Zhuangdi, HONG Junyuan, ZHOU Jiayu. Data-Free Knowledge Distillation for Heterogeneous Federated Learning[C]// ICML. 38th International Conference on Machine Learning. New York: PMLR, 2021: 12878-12889. |

| [13] | ZHANG Lin, SHEN Li, DING Liang, et al. Fine-Tuning Global Model Via Data-Free Knowledge Distillation for Non-IID Federated Learning[C]// IEEE. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2022: 10164-10173. |

| [14] | ZHANG Jie, CHEN Chen, LI Bo, et al. Dense: Data-Free One-Shot Federated Learning[J]. Advances in Neural Information Processing Systems, 2022, 35: 21414-21428. |

| [15] | LUO Kangyang, WANG Shuai, FU Yexuan, et al. DFRD: Data-Free Robustness Distillation for Heterogeneous Federated Learning[C]// NeurIPS. 37th International Conference on Neural Information Processing Systems. New York: Curran Associates, 2023: 17854-17866. |

| [16] | GOODFELLOW I, POUGET-ABADIE J, MIRZA M, et al. Generative Adversarial Networks[J]. Communications of the ACM, 2020, 63(11): 139-144. |

| [17] | JIANG Jinyang, WU Haotian, WANG Fengjuan, et al. Cross-Dimensional Reconstruction of Microstructures and Transport Properties Characterization of Porous Materials via Deep Convolutional Generative Adversarial Network[J]. Engineering Mechanics, 2024, 41: 1-14. |

| [18] | ODENA A, OLAH C, SHLENS J. Conditional Image Synthesis with Auxiliary Classifier Gans[C]// ICML. 34th International Conference on Machine Learning. New York: PMLR, 2017: 2642-2651. |

| [19] | KANG M, SHIM W, CHO M, et al. Rebooting Acgan: Auxiliary Classifier Gans with Stable Training[J]. Advances in Neural Information Processing Systems, 2021, 34: 23505-23518. |

| [20] | DO K, LE T H, NGUYEN D, et al. Momentum Adversarial Distillation: Handling Large Distribution Shifts in Data-Free Knowledge Distillation[J]. Advances in Neural Information Processing Systems, 2022, 35: 10055-10067. |

| [1] | 郭倩, 赵津, 过弋. 基于分层聚类的个性化联邦学习隐私保护框架[J]. 信息网络安全, 2024, 24(8): 1196-1209. |

| [2] | 薛茗竹, 胡亮, 王明, 王峰. 基于联邦学习和区块链技术的TAP规则处理系统[J]. 信息网络安全, 2024, 24(3): 473-485. |

| [3] | 林怡航, 周鹏远, 吴治谦, 廖勇. 基于触发器逆向的联邦学习后门防御方法[J]. 信息网络安全, 2024, 24(2): 262-271. |

| [4] | 金志刚, 丁禹, 武晓栋. 融合梯度差分的双边校正联邦入侵检测算法[J]. 信息网络安全, 2024, 24(2): 293-302. |

| [5] | 兰浩良, 王群, 徐杰, 薛益时, 张勃. 基于区块链的联邦学习研究综述[J]. 信息网络安全, 2024, 24(11): 1643-1654. |

| [6] | 萨其瑞, 尤玮婧, 张逸飞, 邱伟杨, 马存庆. 联邦学习模型所有权保护方案综述[J]. 信息网络安全, 2024, 24(10): 1553-1561. |

| [7] | 吴立钊, 汪晓丁, 徐恬, 阙友雄, 林晖. 面向半异步联邦学习的防御投毒攻击方法研究[J]. 信息网络安全, 2024, 24(10): 1578-1585. |

| [8] | 吴昊天, 李一凡, 崔鸿雁, 董琳. 基于零知识证明和区块链的联邦学习激励方案[J]. 信息网络安全, 2024, 24(1): 1-13. |

| [9] | 赵佳, 杨博凯, 饶欣宇, 郭雅婷. 基于联邦学习的Tor流量检测算法设计与实现[J]. 信息网络安全, 2024, 24(1): 60-68. |

| [10] | 徐茹枝, 戴理朋, 夏迪娅, 杨鑫. 基于联邦学习的中心化差分隐私保护算法研究[J]. 信息网络安全, 2024, 24(1): 69-79. |

| [11] | 赖成喆, 赵益宁, 郑东. 基于同态加密的隐私保护与可验证联邦学习方案[J]. 信息网络安全, 2024, 24(1): 93-105. |

| [12] | 彭翰中, 张珠君, 闫理跃, 胡成林. 联盟链下基于联邦学习聚合算法的入侵检测机制优化研究[J]. 信息网络安全, 2023, 23(8): 76-85. |

| [13] | 陈晶, 彭长根, 谭伟杰, 许德权. 基于差分隐私和秘密共享的多服务器联邦学习方案[J]. 信息网络安全, 2023, 23(7): 98-110. |

| [14] | 刘长杰, 石润华. 基于安全高效联邦学习的智能电网入侵检测模型[J]. 信息网络安全, 2023, 23(4): 90-101. |

| [15] | 刘吉强, 王雪微, 梁梦晴, 王健. 基于共享数据集和梯度补偿的分层联邦学习框架[J]. 信息网络安全, 2023, 23(12): 10-20. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||