信息网络安全 ›› 2024, Vol. 24 ›› Issue (8): 1252-1264.doi: 10.3969/j.issn.1671-1122.2024.08.011

基于集成学习的成员推理攻击方法

- 中国科学技术大学计算机科学与技术学院,合肥 230027

-

收稿日期:2023-11-07出版日期:2024-08-10发布日期:2024-08-22 -

通讯作者:薛吟兴yxxue@ustc.edu.cn -

作者简介:赵伟(1998—),男,安徽,硕士研究生,主要研究方向为人工智能安全|任潇宁(1997—),男,山西,博士研究生,CCF会员,主要研究方向为神经网络安全和测试|薛吟兴(1982—),男,江苏,研究员,博士,CCF会员,主要研究方向为物联网安全、软件系统安全、区块链软件安全和深度学习 -

基金资助:国家自然科学基金(61972373);江苏省基础研究计划(自然科学基金)(BK20201192)

Membership Inference Attacks Method Based on Ensemble Learning

ZHAO Wei, REN Xiaoning, XUE Yinxing( )

)

- School of Computer Science and Technology, University of Science and Technology of China, Hefei 230027, China

-

Received:2023-11-07Online:2024-08-10Published:2024-08-22

摘要:

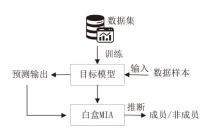

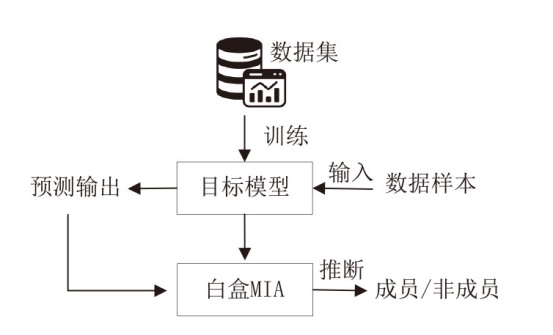

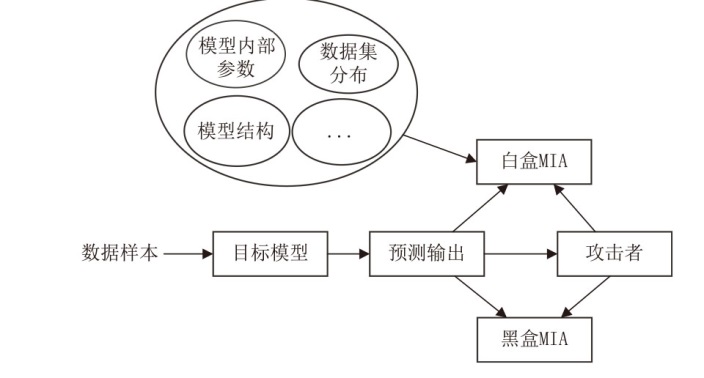

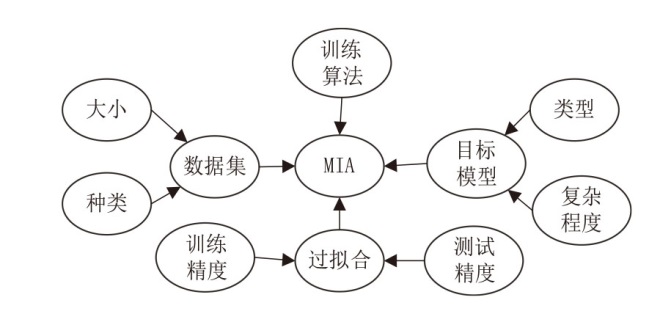

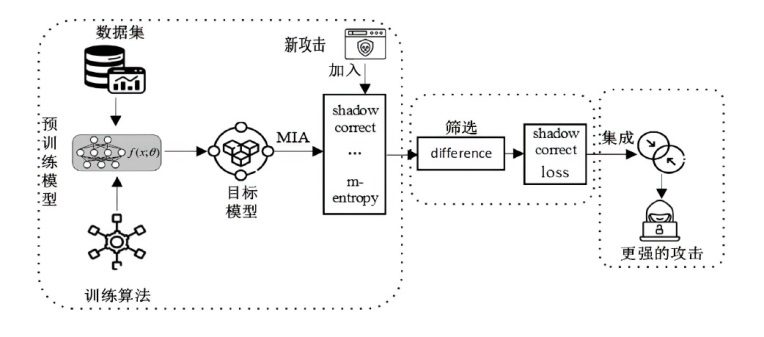

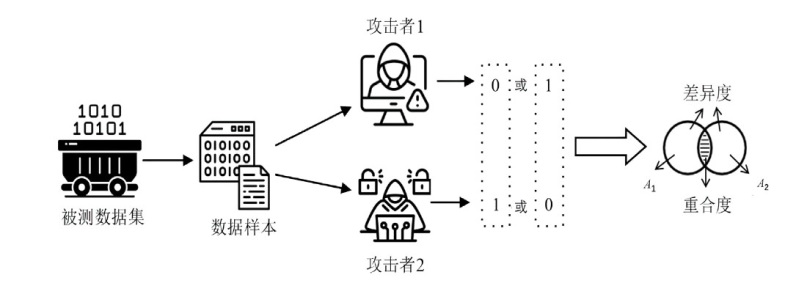

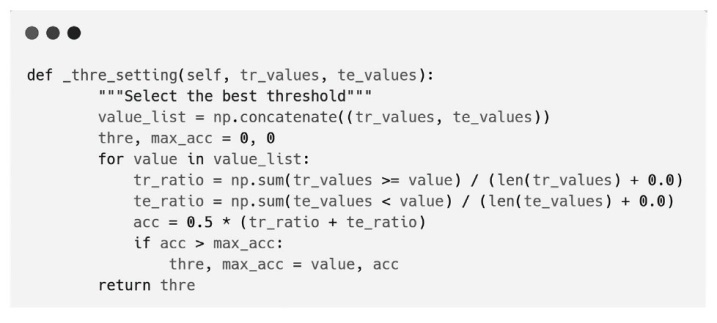

随着机器学习技术的迅速发展和广泛应用,其涉及的数据隐私问题也引发了广泛关注。成员推理攻击是一种通过推理数据样本是否属于模型训练集合的攻击方法,对医疗、金融等领域的个人隐私构成威胁。然而,现有成员推理攻击的攻击性能有限,并且差分隐私、知识蒸馏等防御措施减轻了其对个人隐私的威胁。文章深入分析了多种针对分类模型的黑盒成员推理攻击,提出一种攻击性能更好且不易被防御的基于集成学习的成员推理攻击方法。首先分析目标模型的泛化差距、攻击成功率和攻击差异度之间的关系,然后通过不同攻击之间的差异度分析筛选出具有代表性的成员推理攻击,最后利用集成技术对筛选出的攻击方法进行集成优化,以增强攻击效果。实验结果表明,相较于已有的成员推理攻击,基于集成学习的成员推理攻击方法在多种模型和数据集上展现了更好的攻击性能和稳定性。通过深入分析该攻击方法的数据集、模型结构和泛化差距等因素,可为防御此类成员推理攻击提供有益参考。

中图分类号:

引用本文

赵伟, 任潇宁, 薛吟兴. 基于集成学习的成员推理攻击方法[J]. 信息网络安全, 2024, 24(8): 1252-1264.

ZHAO Wei, REN Xiaoning, XUE Yinxing. Membership Inference Attacks Method Based on Ensemble Learning[J]. Netinfo Security, 2024, 24(8): 1252-1264.

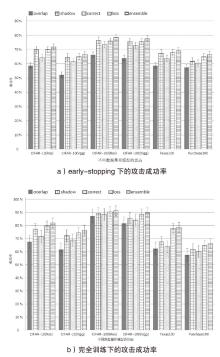

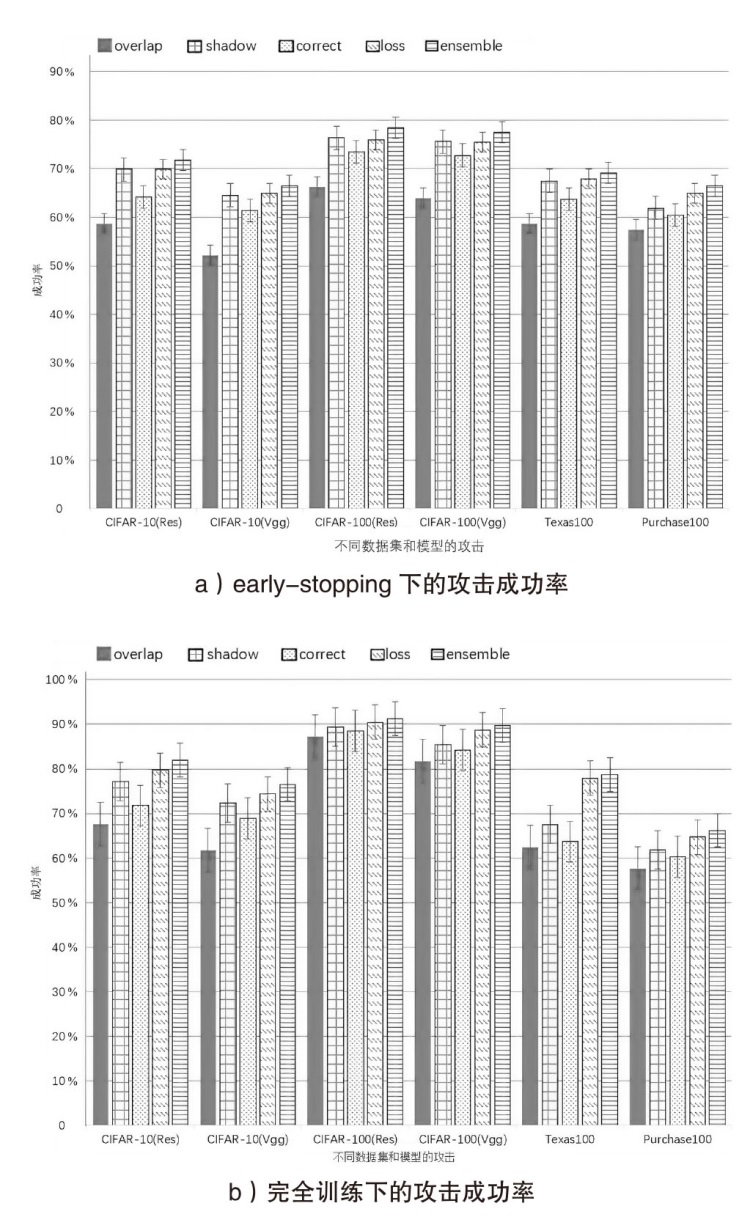

表3

目标模型完全训练下的MIA攻击成功率

| 数据集 模型 | CIFAR10 (ResNet20) | CIFAR10 (Vgg11_bn) | CIFAR100 (ResNet20) | CIFAR100 (Vgg11_bn) | Texas 100 | Purchase 100 |

|---|---|---|---|---|---|---|

| train_acc | 100% | 100% | 100% | 100% | 99.80% | 100% |

| test_acc | 72.30% | 74.20% | 34.30% | 42.50% | 50.70% | 88.70% |

| gap | 27.70% | 25.80% | 65.70% | 57.50% | 49.10% | 11.30% |

| shadow attack | 77.11% | 72.34% | 89.45% | 85.38% | 67.46% | 61.85% |

| correctness | 71.78% | 68.93% | 88.56% | 84.22% | 63.68% | 60.30% |

| m-entropy | 79.80% | 74.19% | 90.50% | 88.74% | 77.62% | 63.41% |

| entropy | 78.83% | 73.47% | 88.93% | 87.75% | 70.75% | 63.38% |

| loss | 79.72% | 74.35% | 90.44% | 88.74% | 77.92% | 64.77% |

| confidence | 79.61% | 74.42% | 90.48% | 88.57% | 77.46% | 63.35% |

表4

目标模型early-stopping下的MIA攻击成功率

| 数据集 模型 | CIFAR10 (ResNet20) | CIFAR10 (Vgg11_bn) | CIFAR100 (ResNet20) | CIFAR100 (Vgg11_bn) | Texas 100 | Purchase 100 |

|---|---|---|---|---|---|---|

| epoch | 10.00% | 20.00% | 20.00% | 15.00% | 15.00% | 10.00% |

| train_acc | 81.30% | 83.20% | 64.70% | 69.60% | 67.40% | 84.50% |

| test_acc | 59.70% | 66.10% | 24.80% | 32.70% | 32.40% | 72.90% |

| gap | 21.60% | 17.10% | 39.90% | 36.90% | 35.00% | 11.60% |

| shadow attack | 69.87% | 64.55% | 76.37% | 75.63% | 67.46% | 61.88% |

| correctness | 64.23% | 61.37% | 73.52% | 72.78% | 63.68% | 60.41% |

| loss | 69.96% | 65.02% | 75.98% | 75.54% | 67.92% | 65.02% |

表5

不同攻击的差异度

| 攻击差异度 | CIFAR10(ResNet20) | CIFAR10(Vgg11_bn) | CIFAR100(ResNet20) | |||

|---|---|---|---|---|---|---|

| 完全 训练 | early-stopping | 完全 训练 | early- stopping | 完全 训练 | early-stopping | |

| (C, S) | 8.99% | 8.64% | 7.19% | 5.86% | 2.33% | 6.37% |

| (C, L) | 11.92% | 8.81% | 8.94% | 6.15% | 3.46% | 5.58% |

| (S, L) | 5.97% | 0.09% | 5.77% | 2.75% | 2.05% | 3.53% |

| (L, P) | 0.07% | 0.06% | 0.05% | 0.05% | 0.03% | 0.04% |

| 攻击差异度 | CIFAR100 (Vgg11_bn) | Texas100 | Purchase100 | |||

| (C, S) | 2.62% | 5.87% | 4.96% | 5.96% | 3.65% | 3.67% |

| (C, L) | 6.06% | 5.98% | 15.62% | 6.32% | 6.85% | 6.83% |

| (S, L) | 4.78% | 3.47% | 11.88% | 2.44% | 6.14% | 5.52% |

| (L, P) | 0.01% | 0.01% | 0.03% | 0.03% | 0.02% | 0.01% |

表6

对完全训练的目标模型攻击的召回率

| 数据集 模型 | CIFAR10 (ResNet20) | CIFAR10 (Vgg11_bn) | CIFAR100 (ResNet20) | CIFAR100 (Vgg11_bn) | Texas 100 | Purchase 100 |

|---|---|---|---|---|---|---|

| shadow attack | 99.74% | 99.72% | 100% | 99.98% | 98.45% | 96.01% |

| correctness | 99.35% | 98.89% | 99.99% | 100% | 97.86% | 95.67% |

| loss | 99.82% | 99.84% | 99.96% | 99.98% | 99.04% | 98.89% |

| m-entropy | 99.86% | 99.84% | 99.97% | 99.98% | 99.11% | 99.02% |

| confidence | 99.85% | 99.82% | 99.99% | 99.96% | 99.13% | 98.99% |

| ensemble | 99.89% | 99.92% | 100% | 100% | 99.21% | 99.10% |

| [1] | HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep Residual Learning for Image Recognition[C]// IEEE. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2016: 770-778. |

| [2] | DEVLIN J, CHANG Mingwei, KENTON L, et al. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding[EB/OL]. (2018-10-11)[2023-11-02]. https://arxiv.org/abs/1810.04805. |

| [3] | LIANG Xiao, WAN Xiaoyue, LU Xiaozhen, et al. IoT Security Techniques Based on Machine Learning: How do IoT Devices Use AI to Enhance Security?[J]. IEEE Signal Processing Magazine, 2018, 35 (5): 41-49. |

| [4] | CARLINI N, LIU Chang, ERLINGSSON U, et al. The Secret Sharer: Evaluating and Testing Unintended Memorization in Neural Networks[C]// USENIX. 28th USENIX Security Symposium (USENIX Security 19). Berkeley: USENIX, 2019: 267-284. |

| [5] | SONG Congzheng, RISTENPART T, SHMATIKOV V. Machine Learning Models that Remember Too Much[C]// ACM. The 2017 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2017: 587-601. |

| [6] | FREDRIKSON M, JHA S, RISTENPART T. Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures[C]// ACM. Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2015: 1322-1333. |

| [7] | GANJU K, WANG Qi, YANG Wei, et al. Property Inference Attacks on Fully Connected Neural Networks Using Permutation Invariant Representations[C]// ACM. Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2018: 619-633. |

| [8] | TRAMÈR F, ZHANG Fan, JUELS A, et al. Stealing Machine Learning Models via Prediction APIs[C]// USENIX. 25th USENIX Security Symposium (USENIX Security 16). Berkeley: USENIX, 2016: 601-618. |

| [9] | SHOKRI R, STRONATI M, SONG Congzheng, et al. Membership Inference Attacks Against Machine Learning Models[C]// IEEE. 2017 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2017: 3-18. |

| [10] | SALEM A, ZHANG Yang, HUMBERT M, et al. ML-Leaks: Model and Data Independent Membership Inference Attacks and Defenses on Machine Learning Models[C]// Internet Society. Proceedings 2019 Network and Distributed System Security Symposium. New York: Internet Society, 2019: 1-15. |

| [11] | YEOM S, GIACOMELLI I, FREDRIKSON M, et al. Privacy Risk in Machine Learning: Analyzing the Connection to Overfitting[C]// IEEE. 31st Computer Security Foundations Symposium. New York: IEEE, 2018: 268-282. |

| [12] | NASR M, SHOKRI R, HOUMANSADR A. Comprehensive Privacy Analysis of Deep Learning: Passive and Active White-Box Inference Attacks against Centralized and Federated Learning[C]// IEEE. 2019 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2019: 739-753. |

| [13] | JIA Jinyuan, SALEM A, BACKES M, et al. MemGuard: Defending against Black-Box Membership Inference Attacks via Adversarial Examples[C]// ACM. Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2019: 259-274. |

| [14] | SONG Liwei, MITTAL P. Systematic Evaluation of Privacy Risks of Machine Learning Models[C]// USENIX. 30th USENIX Security Symposium (USENIX Security 21). Berkeley: USENIX, 2021: 2615-2632. |

| [15] | LONG Yunhui, BINDSCHAEDLER V, WANG Lei, et al. Understanding Membership Inferences on Well-Generalized Learning Models[EB/OL]. (2018-02-13)[2023-11-02]. https://arxiv.org/pdf/1802.04889.pdf. |

| [16] | SHOKRI R, STROBEL M, ZICK Y. On the Privacy Risks of Model Explanations[C]// ACM. The 2021 AAAI/ACM Conference on AI, Ethics, and Society. New York: ACM, 2021: 231-241. |

| [17] | TRUEX S, LIU Ling, GURSOY M E, et al. Demystifying Membership Inference Attacks in Machine Learning as a Service[C]// IEEE. IEEE Transactions on Services Computing. New York: IEEE, 2021: 2073-2089. |

| [18] | CHEN Junjie, WANG W H, SHI Xinghua. Differential Privacy Protection against Membership Inference Attack on Machine Learning for Genomic Data[C]// World Scientific. Proceedings of the Pacific Symposium (Biocomputing 2021). Singapore: World Scientific, 2020: 26-37. |

| [19] | CHEN Dingfan, YU Ning, ZHANG Yang, et al. GAN-Leaks: A Taxonomy of Membership Inference Attacks against Generative Models[C]// ACM. Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2020: 343-362. |

| [20] | LEINO K, FREDRIKSON M. Stolen Memories: Leveraging Model Memorization for Calibrated White-Box Membership Inference[C]// USENIX. 29th USENIX Security Symposium (USENIX Security 2020). Berkeley: USENIX, 2020: 1605-1622. |

| [21] | YEOM S, GIACOMELLI I, FREDRIKSON M, et al. Privacy Risk in Machine Learning: Analyzing the Connection to Overfitting[C]// IEEE. 2018 IEEE 31st Computer Security Foundations Symposium (CSF). New York: IEEE, 2018: 268-282. |

| [22] | BENTLEY J W, GIBNEY D, HOPPENWORTH G, et al. Quantifying Membership Inference Vulnerability via Generalization Gap and Other Model Metrics[EB/OL]. (2020-09-11)[2023-11-02]. https://arxiv.org/abs/2009.05669v1. |

| [23] | SONG Liwei, MITTAL P. Systematic Evaluation of Privacy Risks of Machine Learning Models[C]// USENIX. 30th USENIX Security Symposium (USENIX Security 21). Berkeley: USENIX, 2021: 2615-2632. |

| [24] | NASR M, SHOKRI R, HOUMANSADR A. Machine Learning with Membership Privacy Using Adversarial Regularization[C]// ACM. The 2018 ACM SIGSAC Conference on Computer and Communications Security (CCS). New York: ACM, 2021: 634-646. |

| [25] | ABADI M, CHU A, GOODFELLOW I, et al. Deep Learning with Differential Privacy[C]// ACM. The 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. |

| [26] | SHEJWALKAR V, HOUMANSADR A. Membership Privacy for Machine Learning Models through Knowledge Transfer[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, 35(11): 9549-9557. |

| [27] | HUI Bo, YANG Yuchen, YUAN Haolin, et al. Practical Blind Membership Inference Attack via Differential Comparisons[C]// Internet Society. Network and Distributed System Security (NDSS) Symposium 2021. New York: Internet Society, 2021: 1-17. |

| [1] | 项慧, 薛鋆豪, 郝玲昕. 基于语言特征集成学习的大语言模型生成文本检测[J]. 信息网络安全, 2024, 24(7): 1098-1109. |

| [2] | 屠晓涵, 张传浩, 刘孟然. 恶意流量检测模型设计与实现[J]. 信息网络安全, 2024, 24(4): 520-533. |

| [3] | 江荣, 刘海天, 刘聪. 基于集成学习的无监督网络入侵检测方法[J]. 信息网络安全, 2024, 24(3): 411-426. |

| [4] | 李晨蔚, 张恒巍, 高伟, 杨博. 基于AdaN自适应梯度优化的图像对抗迁移攻击方法[J]. 信息网络安全, 2023, 23(7): 64-73. |

| [5] | 陈得鹏, 刘肖, 崔杰, 仲红. 一种基于双阈值函数的成员推理攻击方法[J]. 信息网络安全, 2023, 23(2): 64-75. |

| [6] | 邢凌凯, 张健. 基于HPC的虚拟化平台异常检测技术研究与实现[J]. 信息网络安全, 2023, 23(10): 64-69. |

| [7] | 王浩洋, 李伟, 彭思维, 秦元庆. 一种基于集成学习的列车控制系统入侵检测方法[J]. 信息网络安全, 2022, 22(5): 46-53. |

| [8] | 仝鑫, 王罗娜, 王润正, 王靖亚. 面向中文文本分类的词级对抗样本生成方法[J]. 信息网络安全, 2020, 20(9): 12-16. |

| [9] | 曾颖明, 王斌, 郭敏. 基于群体智能的网络安全协同防御技术研究[J]. 信息网络安全, 2020, 20(9): 52-56. |

| [10] | 马泽文, 刘洋, 徐洪平, 易航. 基于集成学习的DoS攻击流量检测技术[J]. 信息网络安全, 2019, 19(9): 115-119. |

| [11] | 汤健, 孙春来, 李东. 基于在线集成学习技术的工业控制网络入侵防范技术探讨[J]. 信息网络安全, 2014, 14(9): 86-91. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||