| [1] |

ZARGAR S T, JOSHI J, TIPPER D. A Survey of Defense Mechanisms Against Distributed Denial of Service (DDoS) Flooding Attacks[J]. IEEE Communications Surveys & Tutorials, 2013, 15(4): 2046-2069.

|

| [2] |

WU Hanqing. White Hats Talk about Web Security[M]. Beijing: Electronic Industry Press, 2013.

|

|

吴翰清. 白帽子讲Web安全[M]. 北京: 电子工业出版社, 2013.

|

| [3] |

WONG O C, TOM B. Layer DDoSLayer 7 DDoS Attacks. OWASP AppSec DC 2010. (2010-11-11)[2023-08-03]. https://owasp.org/www-pdf-archive/Layer_7_DDOS.pdf

|

| [4] |

ARI I, HONG Bo, MILLER E L, et al. Managing Flash Crowds on the Internet[C]// IEEE. 11th IEEE/ACM International Symposium on Modeling, Analysis and Simulation of Computer Telecommunications Systems. New York: IEEE, 2003: 246-249.

|

| [5] |

LUO Wenhua, CHENG Jiaxing. Hybrid DDoS Attack Distributed Detection System Based on Hadoop Architecture[J]. Netinfo Security, 2021, 21(2): 61-69.

|

|

罗文华, 程家兴. 基于Hadoop架构的混合型DDoS攻击分布式检测系统[J]. 信息网络安全, 2021, 21(2): 61-69.

|

| [6] |

PRIYA S S, SIVARAM M, YUVARAJ D, et al. Machine Learning Based DDoS Detection[C]// IEEE. 2020 International Conference on Emerging Smart Computing and Informatics (ESCI). New York: IEEE, 2020: 234-237.

|

| [7] |

HIRAKAWA T, OGURA K, BISTA B B, et al. A Defense Method Against Distributed Slow HTTP DoS Attack[C]// IEEE. 2016 19th International Conference on Network-Based Information Systems (NBiS). New York: IEEE, 2016: 152-158.

|

| [8] |

MURALEEDHARAN N, JANET B. Behaviour Analysis of HTTP Based Slow Denial of Service Attack[C]// IEEE. 2017 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET). New York: IEEE, 2017: 1851-1856.

|

| [9] |

CHEN Yi, ZHANG Meijing, XU Fajian. HTTP Slow DoS Attack Detection Method Based on One-Dimensional Convolutional Neural Network[J]. Computer Applications, 2020, 40(10): 2973-2979.

|

|

陈旖, 张美璟, 许发见. 基于一维卷积神经网络的HTTP慢速DoS攻击检测方法[J]. 计算机应用, 2020, 40(10): 2973-2979.

doi: 10.11772/j.issn.1001-9081.2020020172

|

| [10] |

SHANG Fubo. Research on Intrusion Detection Technology Based on Deep Learning[D]. Shenyang: Shenyang Jianzhu University, 2021.

|

|

商富博. 基于深度学习的入侵检测技术研究[D]. 沈阳: 沈阳建筑大学, 2021.

|

| [11] |

SHLENS J. A Tutorial on Principal Component Analysis[EB/OL]. (2014-04-03)[2023-08-03]. http://arxiv.org/pdf/1404.1100.pdf.

|

| [12] |

SHI Hongbo, CHEN Yuwen, CHEN Xin. Review of SMOTE Oversampling and Its Improved Algorithms[J]. Journal of Intelligent Systems, 2019, 14(6): 1073-1083.

|

|

石洪波, 陈雨文, 陈鑫. SMOTE过采样及其改进算法研究综述[J]. 智能系统学报, 2019, 14(6): 1073-1083.

|

| [13] |

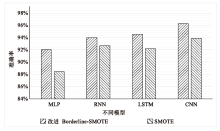

CHAWLA N V, BOWYER K W, HALL L O, et al. SMOTE: Synthetic Minority Over-Sampling Technique[J]. Journal of Artificial Intelligence Research, 2002(16): 321-357.

|

| [14] |

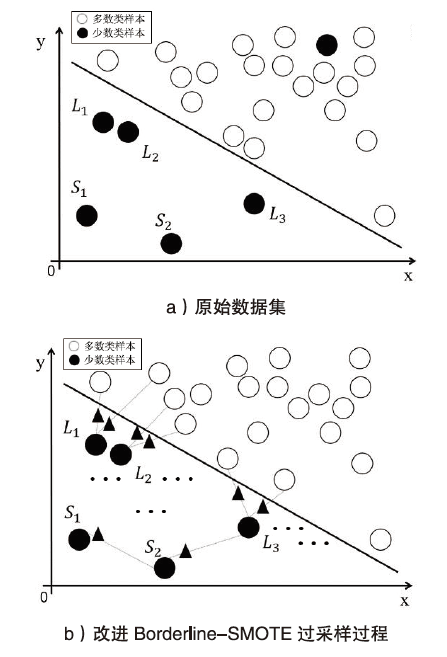

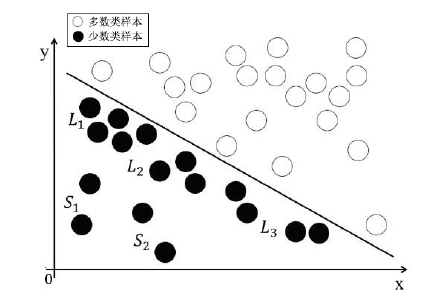

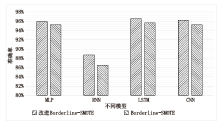

HAN Hui, WANGWenyuan, MAOBinghuan. Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Data Sets Learning[C]// Springer. International Conference on Intelligent Computing. Heidelberg: Springer, 2005: 878-887.

|

| [15] |

JIA Jing, WANG Qingsheng, CHEN Yongle, et al. DDoS Attack Detection Method Based on Attention Mechanism[J]. Computer Engineering and Design, 2021, 42(9): 2439-2445.

|

|

贾婧, 王庆生, 陈永乐, 等. 基于注意力机制的DDoS攻击检测方法[J]. 计算机工程与设计, 2021, 42(9): 2439-2445.

|

| [16] |

VASWANI A, SHAZEER N, PARMAR N, et al. Attention is All You Need[J]. Neural Information Processing Systems, 2017(30): 6000-6010.

|

| [17] |

YAN Liang, JI Shaopei, LIU Dong, et al. Network Intrusion Detection Based on GRU and Feature Embedding[J]. Journal of Applied Science, 2021, 39(4): 559-568.

|

|

颜亮, 姬少培, 刘栋, 等. 基于GRU与特征嵌入的网络入侵检测[J]. 应用科学学报, 2021, 39(4): 559-568.

|

| [18] |

CHO K, VAN M B, GULCEHRE C, et al. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation[EB/OL]. (2014-06-03)[2023-08-03]. https://arxiv.org/abs/1406.1078.

|

| [19] |

SHARAFALDIN I, LASHKARI A H, GHORBANI A A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization[EB/OL]. (2018-01-01)[2023-08-03]. https://www.researchgate.net/publication/322870768_Toward_Generating_a_New_Intrusion_Detection_Dataset_and_Intrusion_Traffic_Characterization.

|

), LU Lufan2, SU Yaoyang2, NIE Wei2

), LU Lufan2, SU Yaoyang2, NIE Wei2