信息网络安全 ›› 2014, Vol. 14 ›› Issue (10): 38-43.doi: 10.3969/j.issn.1671-1122.2014.10.007

基于规则库和网络爬虫的漏洞检测技术研究与实现

杜雷, 辛阳

- 北京邮电大学信息安全中心,北京 100876

Research and Implementation of Web Vulnerability Detection Technology Based on Rule Base and Web Crawler

DU Lei, XIN Yang

- Information Security Center, Beijing University of Posts and Telecommunications, Beijing 100876, China

摘要:

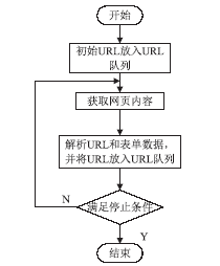

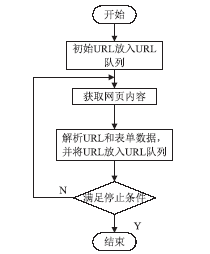

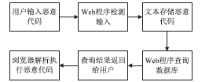

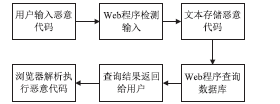

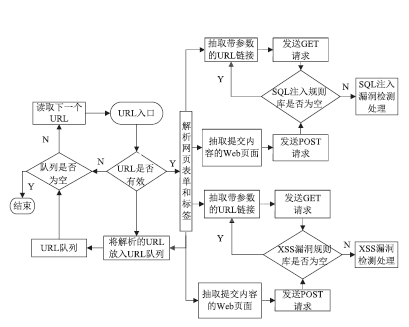

Web技术是采用HTTP或HTTPS协议对外提供服务的应用程序,Web应用也逐渐成为软件开发的主流之一,但Web应用中存在的各种安全漏洞也逐渐暴露出来,如SQL注入、XSS漏洞,给人们带来巨大的经济损失。为解决Web网站安全问题,文章通过对Web常用漏洞如SQL注入和XSS的研究,提出了一种新的漏洞检测方法,一种基于漏洞规则库、使用网络爬虫检测SQL注入和XSS的技术。网络爬虫使用HTTP协议和URL链接来遍历获取网页信息,通过得到的网页链接,并逐步读取漏洞规则库里的规则,构造成可检测出漏洞的链接形式,自动对得到的网页链接发起GET请求以及POST请求,这个过程一直重复,直到规则库里的漏洞库全部读取构造完毕,然后继续使用网络爬虫和正则表达式获取网页信息,重复上述过程,这样便实现了检测SQL注入和XSS漏洞的目的。此方法丰富了Web漏洞检测的手段,增加了被检测网页的数量,同时涵盖了HTTP GET和HTTP POST两种请求方式,最后通过实验验证了利用此技术对Web网站进行安全检测的可行性,能够准确检测网站是否含有SQL注入和XSS漏洞。

中图分类号: