信息网络安全 ›› 2024, Vol. 24 ›› Issue (12): 1799-1818.doi: 10.3969/j.issn.1671-1122.2024.12.001

基于可信执行环境的安全推理研究进展

- 1.北京航空航天大学网络空间安全学院,北京 100191

2.中电信数智科技有限公司,北京 100036

3.飞腾信息技术有限公司,北京 100083

-

收稿日期:2024-10-15出版日期:2024-12-10发布日期:2025-01-10 -

通讯作者:刘潇liux45@chinatelecom.cn -

作者简介:孙钰(1985—),男,山东,副教授,博士,主要研究方向为智能系统安全|熊高剑(2000—),男,贵州,博士研究生,主要研究方向为人工智能安全|刘潇(1989—),男,北京,博士,主要研究方向为云计算安全、人工智能安全|李燕(1984—),女,河北,硕士,主要研究方向为信息技术应用创新 -

基金资助:国家自然科学基金(62472015);CCF-飞腾基金(202306)

A Survey on Trusted Execution Environment Based Secure Inference

SUN Yu1, XIONG Gaojian1, LIU Xiao2( ), LI Yan3

), LI Yan3

- 1. School of Cyber Science and Technology, Beihang University, Beijing 100191, China

2. China Telecom Digital Intelligence Technology Co., Ltd., Beijing 100036, China

3. Phytium Information Technology Co., Ltd., Beijiing 100083, China

-

Received:2024-10-15Online:2024-12-10Published:2025-01-10

摘要:

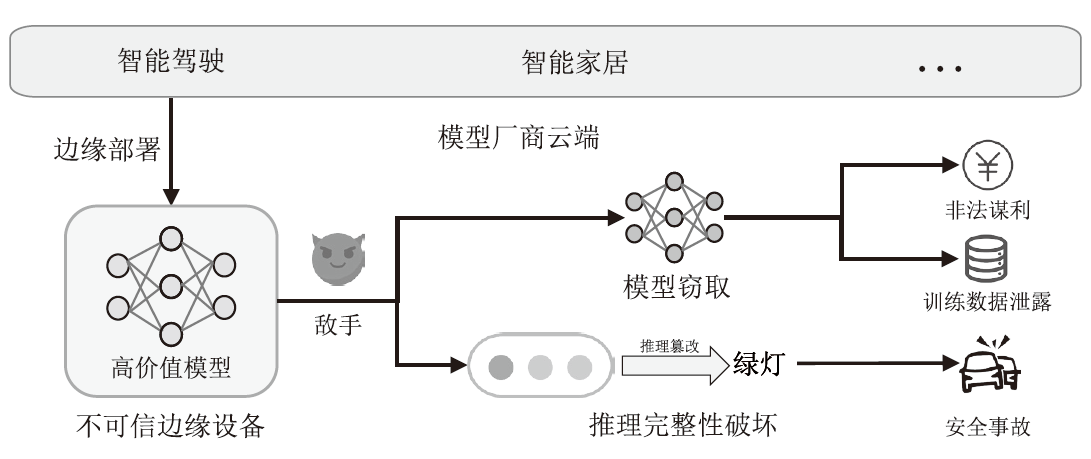

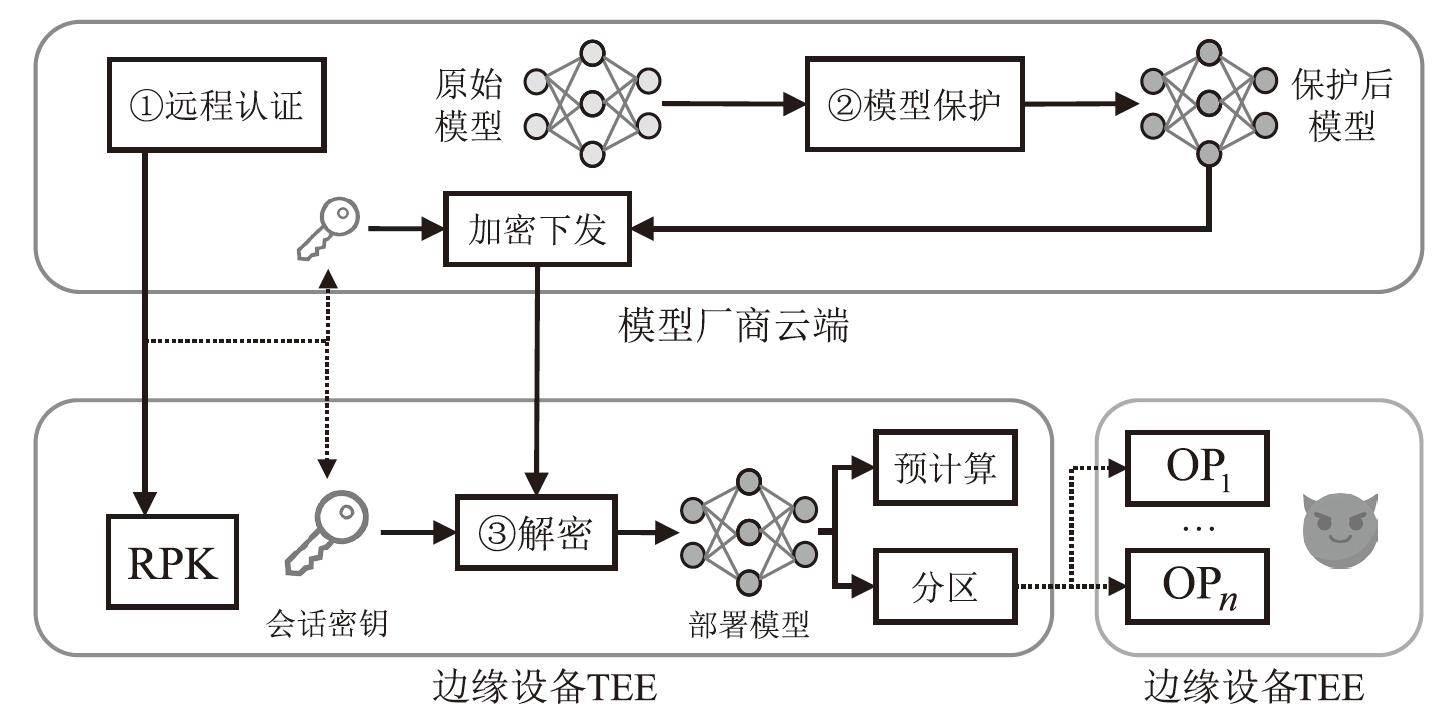

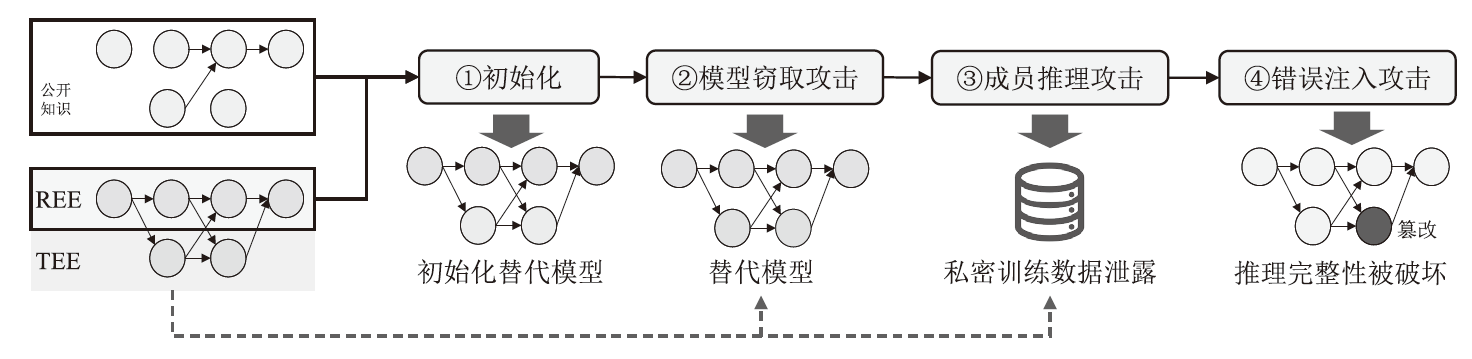

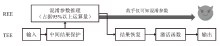

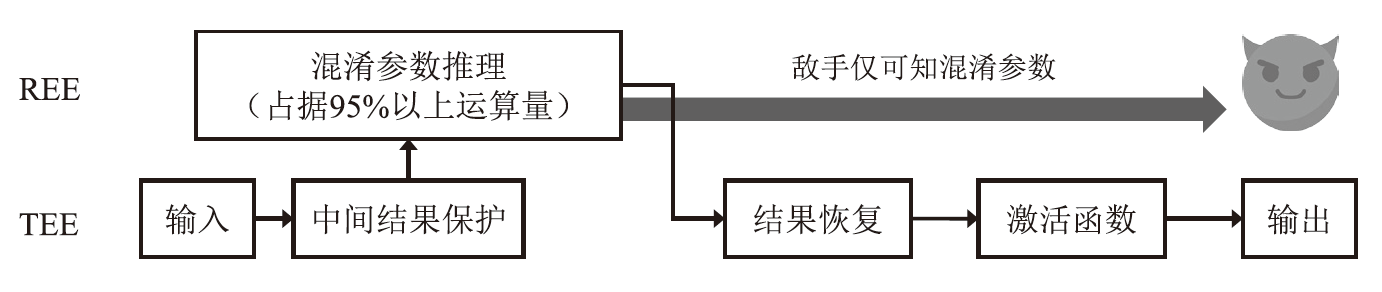

近年来,以深度神经网络为代表的机器学习技术在自动驾驶、智能家居和语音助手等领域获得了广泛应用。在上述高实时要求场景下,多数服务商将模型部署在边缘设备以规避通信带来的网络时延与通信开销。然而,边缘设备不受服务商控制,所部署模型易遭受模型窃取、错误注入和成员推理等攻击,进而导致高价值模型失窃、推理结果操纵及私密数据泄露等严重后果,使服务商市场竞争力受到致命打击。为解决上述问题,众多学者致力于研究基于可信执行环境(TEE)的安全推理,在保证模型可用性条件下保护模型的参数机密性与推理完整性。文章首先介绍相关背景知识,给出安全推理的定义,并归纳其安全模型;然后对现有TEE安全推理的模型机密性保护方案与推理完整性保护方案进行了分类介绍和比较分析;最后展望了TEE安全推理的未来研究方向。

中图分类号:

引用本文

孙钰, 熊高剑, 刘潇, 李燕. 基于可信执行环境的安全推理研究进展[J]. 信息网络安全, 2024, 24(12): 1799-1818.

SUN Yu, XIONG Gaojian, LIU Xiao, LI Yan. A Survey on Trusted Execution Environment Based Secure Inference[J]. Netinfo Security, 2024, 24(12): 1799-1818.

表1

常见神经网络的运行内存及在TEE中的推理表现

| 神经网络 | 参数大小 / MB | 运行内存/ MB | CPU推理时延/ s | TEE推理时延/ s | TEE时延上升倍率 |

|---|---|---|---|---|---|

| AlexNet | 204.70 | 603.8 | 0.098 | 3.17 | 32.4× |

| VGG19 | 548.10 | 1210.6 | 0.970 | 45.40 | 46.8× |

| ResNet50 | 97.50 | 714.5 | 0.480 | 16.85 | 35.1× |

| Inception v3 | 90.92 | 742.3 | 0.670 | 23.65 | 35.3× |

| DenseNet169 | 56.60 | 845.0 | 0.930 | 35.15 | 37.8× |

| YOLO v5s | 27.60 | 1136.8 | 1.100 | 48.18 | 43.8× |

表2

模型机密性保护方案分类与总结

| 分类 | 安全推理方法 | TEE平台 | 部署 平台 | 安全 上界 | 准确率 无损 | REE 加速 | 内存调度优化 | 参数 混淆 | 中间 结果 保护 |

|---|---|---|---|---|---|---|---|---|---|

| 保护全部模型 | MLCapsule[ | Intel SGX | 端侧 | √ | √ | × | √ | × | √ |

| Occlumency[ | Intel SGX | 端侧 | √ | √ | × | √ | × | √ | |

| Vessels[ | Intel SGX | 端侧 | √ | √ | × | √ | × | √ | |

| Lasagna[ | Intel SGX | 多终端 | √ | √ | × | √ | × | √ | |

| Penetralium[ | Intel SGX | 端侧 | √ | × | × | √ | × | √ | |

| 保护模型浅层 | TMP[ | Intel SGX | 端云 | × | √ | √ | × | × | × |

| Serdab[ | Intel SGX | 多终端 | × | √ | √ | × | × | × | |

| Origami[ | Intel SGX | 端云 | × | √ | √ | × | × | √ | |

| 保护模型深层 | DarkneTZ[ | Arm TrustZone | 端侧 | × | √ | √ | × | × | × |

| Shredder[ | — | 端云 | × | × | √ | × | × | √ | |

| eNNClave[ | Intel SGX | 端侧 | × | × | √ | × | × | √ | |

| 保护模型关键参数 | AegisDNN[ | Intel SGX | 端侧 | × | √ | √ | × | × | × |

| Magnitude[ | Intel SGX | 端侧 | × | √ | √ | × | √ | √ | |

| SOTER[ | Intel SGX | 端侧 | × | √ | √ | × | √ | √ | |

| ShadowNet[ | Arm TrustZone | 端侧 | × | √ | √ | √ | √ | √ | |

| NNSplitter[ | — | 端侧 | × | √ | √ | × | √ | × | |

| TEESlice[ | Intel SGX | 端侧 | × | × | √ | × | × | √ |

表3

保护模型关键参数方案总结

| 安全推理 方法 | 参数混淆 机制 | 中间结果 保护机制 | 所保护关键 参数 | 核心思路 |

|---|---|---|---|---|

| AegisDNN[ | 无 | 无 | 静默数据破坏方法,评估 所得重要层 | 动态规划算法,在给定时延下利用TEE保护重要层参数 |

| Magnitude[ | 加性混淆 | OTP | 1%的大幅值 参数 | 混淆1%大幅值 参数,在TEE内进行结果准确恢复 |

| SOTER[ | 可结合乘法 | 可结合乘法 | 推理结果恢复参数 | 混淆80%网络层参数,在TEE内进行结果准确 恢复 |

| ShadowNet[ | 加性混淆& 乘性混淆 | OTP | 推理结果恢复参数 | 混淆所有参数,在TEE内进行结果准确恢复 |

| NNSplitter[ | 反向优化 混淆 | 无 | 强化学习搜索关键参数 | 利用强化学习搜索关键参数,并基于反向优化混淆 |

| TEESlice[ | 无 | OTP | 重要模型切片 | 先分区后训练,将有价值信息限制在TEE分区中保护 |

表4

保护模型关键参数方案总结

| 分类 | 计算场景 | 安全上界 | 不可绕过 | 失败概率 |

|---|---|---|---|---|

| TEE保护 推理[ | TEE | √ | √ | 0 |

| 基于Freivalds 算法[ | TEE | × | √ | |

| 动态指纹比对 方案[ | REE | × | × | 0.01% |

| 分类 | 实时性 | 计算量 | 复杂度 | 核心思路 |

| TEE保护 推理[ | 弱 | TEE保护全推理过程,提供完整性 | ||

| 基于Freivalds 算法[ | 中 | 基于Freivalds算法,在TEE内以低复杂度验证REE侧推理结果完整性 | ||

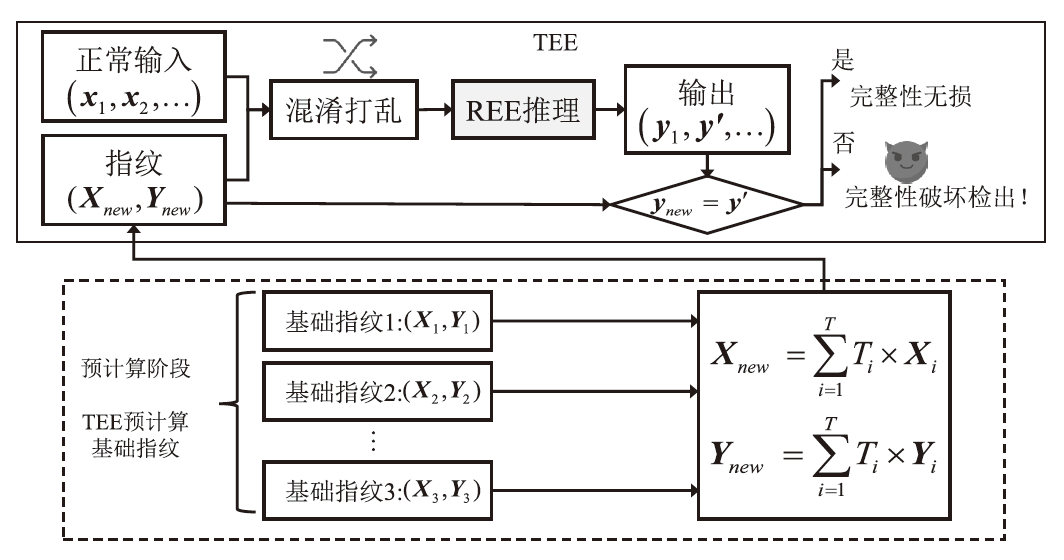

| 动态指纹比对 方案[ | 强 | 动态生成挑战值并混入批次数据,TEE校验响应值 |

| [1] | RIBEIRO M, GROLINGER K, CAPRETZ M A M. MLaaS: Machine Learning as a Service[C]// IEEE. The 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA). New York: IEEE, 2015: 896-902. |

| [2] | HUSSAIN R, ZEADALLY S. Autonomous Cars: Research Results, Issues, and Future Challenges[J]. IEEE Communications Surveys & Tutorials, 2018, 21(2): 1275-1313. |

| [3] | CHEN Jiena, ZHANG Mingzhuo, DU Dehui, et al. Autonomous Driving Behavior Decision-Making with RoboSim Model Based on Bayesian Network[J]. Journal of Software, 2023, 34(8): 3836-3852. |

| 陈洁娜, 张铭茁, 杜德慧, 等. 基于贝叶斯网络构建RoboSim模型的自动驾驶行为决策[J]. 软件学报, 2023, 34(8):3836-3852. | |

| [4] | DENG Hexuan, DING Liang, LIU Xuebo, et al. Improving Simultaneous Machine Translation with Monolingual Data[C]// AAAI. The Thirty-Seventh AAAI Conference on Artificial Intelligence and Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence and Thirteenth Symposium on Educational Advances in Artificial Intelligence. Menlo Park: AAAI, 2023: 12728-12736. |

| [5] | ZHAO Yuran, MENG Kui. Research on English-Chinese Machine Translation Based on Sentence Grouping[J]. Netinfo Security, 2021, 21(7): 63-71. |

| 赵彧然, 孟魁. 基于句子分组的中英机器翻译研究[J]. 信息网络安全, 2021, 21(7):63-71. | |

| [6] | STOJKOSKA B L R, TRIVODALIEV K V. A Review of Internet of Things for Smart Home: Challenges and Solutions[J]. Journal of Cleaner Production, 2017, 140: 1454-1464. |

| [7] | MAO Bo, XU Ke, JIN Yuehui, et al. DeepHome: A Control Model of Smart Home Based on Deep Learning[J]. Chinese Journal of Computers, 2018, 41(12): 2689-2701. |

| 毛博, 徐恪, 金跃辉, 等. DeepHome:一种基于深度学习的智能家居管控模型[J]. 计算机学报, 2018, 41(12):2689-2701. | |

| [8] | BUCZAK A L, GUVEN E. A Survey of Data Mining and Machine Learning Methods for Cyber Security Intrusion Detection[J]. IEEE Communications Surveys & Tutorials, 2016, 18(2): 1153-1176. |

| [9] | CHENG Tianheng, SONG Lin, GE Yixiao, et al. YOLO-World: Real-Time Open-Vocabulary Object Detection[EB/OL]. (2024-02-22)[2024-10-10]. https://doi.org/10.48550/arXiv.2401.17270. |

| [10] | SUN Zhichuang, SUN Ruimin, LU Long. Mind Your Weight(s): A Large-Scale Study on Insufficient Machine Learning Model Protection in Mobile Apps[C]// USENIX. The 30th USENIX Security Symposium (USENIX Security 21). Berkeley: USENIX, 2021: 1955-1972. |

| [11] | Guosheng Securities Co., Ltd.. How Much Power does ChatGPT Need?[EB/OL]. (2023-02-12)[2024-10-10]. https://www.fxbaogao.com/detail/3565665. |

| 国盛证券有限责任公司. ChatGPT 需要多少算力[EB/OL]. (2023-02-12)[2024-10-10]. https://www.fxbaogao.com/detail/3565665. | |

| [12] | RAKIN A S, HE Zhezhi, FAN Deliang. Bit-Flip Attack: Crushing Neural Network with Progressive Bit Search[C]// IEEE. The 2019 IEEE/CVF International Conference on Computer Vision (ICCV). New York: IEEE, 2019: 1211-1220. |

| [13] | YAO Fan, RAKIN A S, FAN Deliang, et al. DeepHammer: Depleting the Intelligence of Deep Neural Networks through Targeted Chain of Bit Flips[C]// USENIX. 29th USENIX Security Symposium (USENIX Security 20). Berkeley: USENIX, 2020: 1463-1480. |

| [14] | RADFORD A, KIM J W, HALLACY C, et al. Learning Transferable Visual Models from Natural Language Supervision[C]// PMLR. International Conference on Machine Learning. New York: PMLR, 2021: 8748-8763. |

| [15] | ACHIAM J, ADLER S, AGARWAL S, et al. GPT-4 Technical Report[EB/OL]. (2023-03-15)[2024-10-10]. https://arxiv.org/abs/2303.08774. |

| [16] | Baidu Online Network Technology (Beijing) Co., Ltd.. AgentBuilder[EB/OL]. (2023-11-15)[2024-10-10]. https://agents.baidu.com/docs/model/ERNIE_bot_introduce/. |

| 百度在线网络技术(北京)有限公司. 文心智能体平台[EB/OL]. (2023-11-15)[2024-10-10]. https://agents.baidu.com/docs/model/ERNIE_bot_introduce/. | |

| [17] | BAI Jinze, BAI Shuai, CHU Yunfei, et al. Qwen Technical Report[EB/OL]. (2023-09-28)[2024-10-10]. https://arxiv.org/abs/2309.16609. |

| [18] | ZHOU Chunyi, CHEN Dawei, WANG Shang, et al. Research and Challenge of Distributed Deep Learning Privacy and Security Attack[J]. Journal of Computer Research and Development, 2021, 58(5): 927-943. |

| 周纯毅, 陈大卫, 王尚, 等. 分布式深度学习隐私与安全攻击研究进展与挑战[J]. 计算机研究与发展, 2021, 58(5):927-943. | |

| [19] | HE Xinlei, LI Zheng, XU Weilin, et al. Membership-Doctor: Comprehensive Assessment of Membership Inference against Machine Learning Models[EB/OL]. (2022-08-22)[2024-10-10]. https://arxiv.org/abs/2208.10445. |

| [20] | SHOKRI R, STRONATI M, SONG Congzheng, et al. Membership Inference Attacks against Machine Learning Models[C]// IEEE. The 2017 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2017: 3-18. |

| [21] | CHOQUETTE-CHOO C A, TRAMER F, CARLINI N, et al. Label-Only Membership Inference Attacks[C]// PMLR. The 38th International Conference on Machine Learning. New York: PMLR, 2021: 1964-1974. |

| [22] | GILAD-BACHRACH R, DOWLIN N, LAINE K, et al. CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy[C]// PMLR. The 33rd International Conference on Machine Learning. New York: PMLR, 2016: 201-210. |

| [23] | MISHRA P, LEHMKUHL R, SRINIVASAN A, et al. Delphi: A Cryptographic Inference System for Neural Networks[C]// USENIX. The 29th USENIX Security Symposium (USENIX Security 20). Berkeley: USENIX, 2020: 2505-2522. |

| [24] | JUVEKAR C, VAIKUNTANATHAN V, CHANDRAKASAN A. Gazelle: A Low Latency Framework for Secure Neural Network Inference[EB/OL]. (2018-01-16)[2024-10-10]. https://arxiv.org/abs/1801.05507. |

| [25] | RATHEE D, RATHEE M, KUMAR N, et al. CrypTFlow2: Practical 2-Party Secure Inference[C]// ACM. The 2020 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2020: 325-342. |

| [26] | AL B A, JIN Chao, LIN Jie, et al. Towards the AlexNet Moment for Homomorphic Encryption: HCNN, the First Homomorphic CNN on Encrypted Data with GPUs[J]. IEEE Transactions on Emerging Topics in Computing, 2021, 9(3): 1330-1343. |

| [27] | NG L K L, CHOW S S M. SoK: Cryptographic Neural-Network Computation[C]// IEEE. The 2023 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2023: 497-514. |

| [28] | WAGH S, TOPLE S, BENHAMOUDA F, et al. Falcon: Honest-Majority Maliciously Secure Framework for Private Deep Learning[J]. Proceedings on Privacy Enhancing Technologies, 2021, 2021(1): 188-208. |

| [29] | LIU Zhijuan, GAO Jun, DING Qifeng, et al. Research on Development of Trusted Execution Environment Technology on Mobile Platform[J]. Netinfo Security, 2018, 18(2): 84-91. |

| 刘志娟, 高隽, 丁启枫, 等. 移动终端TEE技术进展研究[J]. 信息网络安全, 2018, 18(2):84-91. | |

| [30] | Standardization Administration of the People’s Republic of China. Information Security Technology-Trusted Execution Environment-Basic Security Specification[EB/OL]. (2022-04-15)[2024-10-10]. https://openstd.samr.gov.cn/bzgk/gb/newGbInfo?hcno=B5B601094A265390BF353980D90E534D. |

| 中国国家标准化管理委员会. 信息安全技术可信执行环境基本安全规范[EB/OL]. (2022-04-15)[2024-10-10]. https://openstd.samr.gov.cn/bzgk/gb/newGbInfo?hcno=B5B601094A265390BF353980D90E534D. | |

| [31] | ZHANG Fengwei, ZHOU Lei, ZHANG Yiming, et al. Trusted Execution Environment: State-of-the-Art and Future Directions[J]. Journal of Computer Research and Development, 2024, 61(1): 243-260. |

| 张锋巍, 周雷, 张一鸣, 等. 可信执行环境:现状与展望[J]. 计算机研究与发展, 2024, 61(1): 243-260. | |

| [32] | TANG Yu, ZHANG Chi. A Privacy Protection Scheme for Information-Centric Networking Based on Intel SGX[J]. Netinfo Security, 2023, 23(6): 55-65. |

| 唐雨, 张驰. 一种基于Intel SGX的信息中心网络隐私保护方案[J]. 信息网络安全, 2023, 23(6):55-65. | |

| [33] | FAN Guannan, DONG Pan. Research on Trusted Execution Environment Building Technology Based on TrustZone[J]. Netinfo Security, 2016, 16(3): 21-27. |

| 范冠男, 董攀. 基于TrustZone的可信执行环境构建技术研究[J]. 信息网络安全, 2016, 16(3):21-27. | |

| [34] | AMD. SEV-SNP: Strengthening VM Isolation with Integrity Protection and More[EB/OL]. [2024-10-10]. https://www.amd.com/system/files/TechDocs/SEV-SNP-strengthening-vmisolation-with-integrity-protection-and-more.pdf. |

| [35] | Phytium Information Technology Co., Ltd.. Phytium Trusted Execution Environment Technology White Paper[EB/OL]. (2023-05-31)[2024-10-10]. https://www.phytium.com.cn/homepage/download/. |

| 飞腾信息技术有限公司. 飞腾可信执行环境技术白皮书[EB/OL]. (2023-05-31)[2024-10-10]. https://www.phytium.com.cn/homepage/download/. | |

| [36] | Phytium Information Technology Co., Ltd.. Phytium-Optee[EB/OL]. (2024-05-30)[2024-10-10]. https://gitee.com/phytium_embedded/phytium-optee. |

| 飞腾信息技术有限公司. Phytium-Optee[EB/OL]. (2024-05-30)[2024-10-10]. https://gitee.com/phytium_embedded/phytium-optee. | |

| [37] | SHEN Youren, TIAN Hongliang, CHEN Yu, et al. Occlum: Secure and Efficient Multitasking Inside a Single Enclave of Intel SGX[C]// ACM. The Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems. New York: ACM, 2020: 955-970. |

| [38] | TSAI C, PORTER D E, VIJ M. Graphene-SGX: A Practical Library OS for Unmodified Applications on SGX[C]// USENIX. The 2017 USENIX Conference on Usenix Annual Technical Conference. Berkeley: USENIX, 2017: 645-658. |

| [39] | KELEKET G C J Y, YI Wenzhe, WANG Juan. A Lightweight Trusted Execution Environment Construction Method for Fabric Chaincode Based on SGX[J]. Netinfo Security, 2022, 22(7): 73-83. |

| KELEKET G C J Y, 易文哲, 王鹃. 一种基于SGX的轻量Fabric链码可信执行环境构建方法[J]. 信息网络安全, 2022, 22(7):73-83. | |

| [40] | LEE T, LIN Zhiqi, PUSHP S, et al. Occlumency: Privacy-Preserving Remote Deep-Learning Inference Using SGX[C]// ACM. The 25th Annual International Conference on Mobile Computing and Networking. New York: ACM, 2019: 1-17. |

| [41] | YANG Mengda, YI Wenzhe, WANG Juan, et al. Penetralium: Privacy-Preserving and Memory-Efficient Neural Network Inference at the Edge[J]. Future Generation Computer Systems, 2024, 156: 30-41. |

| [42] | Ultralytics. Yolov5 of Ultralytics HUB[EB/OL]. (2024-10-05)[2024-10-10]. https://github.com/ultralytics/yolov5. |

| [43] | SANYAL S, ADDEPALLI S, BABU R V. Towards Data-Free Model Stealing in a Hard Label Setting[C]// IEEE. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2022: 15263-15272. |

| [44] | OREKONDY T, SCHIELE B, FRITZ M. Knockoff Nets: Stealing Functionality of Black-Box Models[C]// IEEE. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2019: 4949-4958. |

| [45] | YU Honggang, YANG Kaichen, ZHANG Teng, et al. CloudLeak: Large-Scale Deep Learning Models Stealing through Adversarial Examples[EB/OL]. (2020-02-23)[2024-10-10]. https://www.ndss-symposium.org/wp-content/uploads/2020/02/24178-paper.pdf. |

| [46] | LIU Yannan, WEI Lingxiao, LUO Bo, et al. Fault Injection Attack on Deep Neural Network[C]// IEEE. 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD). New York: IEEE, 2017: 131-138. |

| [47] | VAN D V V, FRATANTONIO Y, LINDORFER M, et al. Drammer: Deterministic Rowhammer Attacks on Mobile Platforms[C]// ACM. The 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 1675-1689. |

| [48] | SASY S, GORBUNOV S, FLETCHER C W. ZeroTrace: Oblivious Memory Primitives from Intel SGX[EB/OL]. (2018-02-18)[2024-10-10]. https://www.semanticscholar.org/paper/ZeroTrace-:-Oblivious-Memory-Primitives-from-Intel-Sasy-Gorbunov/7fd257b33847ac8f07da472a7f8c10311d9061dc. |

| [49] | DESSOUKY G, FRASSETTO T, SADEGHI A R. HYBCACHE: Hybrid Side-Channel-Resilient Caches for Trusted Execution Environments[C]// USENIX. The 29th USENIX Conference on Security Symposium. Berkeley: USENIX, 2020: 451-468. |

| [50] | YAROM Y, FALKNER K. FLUSH+RELOAD: A High Resolution, Low Noise, L3 Cache Side-Channel Attack[C]//USENIX. The 23rd USENIX Security Symposium. Berkeley: USENIX, 2014: 719-732. |

| [51] | EVTYUSHKIN D, RILEY R, ABU-GHAZALEH N C A E, et al. BranchScope: A New Side-Channel Attack on Directional Branch Predictor[C]// ACM. The Twenty-Third International Conference on Architectural Support for Programming Languages and Operating Systems. New York: ACM, 2018, 53: 693-707. |

| [52] | WANG Wenhao, CHEN Guoxing, PAN Xiaorui, et al. Leaky Cauldron on the Dark Land: Understanding Memory Side-Channel Hazards in SGX[C]// ACM. The 2017 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2017: 2421-2434. |

| [53] | CHEN Guoxing, CHEN Sanchuan, XIAO Yuan, et al. SgxPectre: Stealing Intel Secrets from SGX Enclaves via Speculative Execution[J]. IEEE Security & Privacy, 2020, 18(3): 28-37. |

| [54] | ZHANG Ziqi, GONG Chen, CAI Yifeng, et al. No Privacy Left Outside: On the (in-)Security of TEE-Shielded DNN Partition for on-Device ML[C]// IEEE. 2024 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2024: 3327-3345. |

| [55] | JOHNSON S, SCARLATA V, ROZAS C, et al. Intel Software Guard Extensions: EPID Provisioning and Attestation Services[EB/OL]. (2016-12-30)[2024-10-10]. https://community.intel.com/legacyfs/online/drupal_files/managed/57/0e/ww10-2016-sgx-provisioning-and-attestation-final.pdf. |

| [56] | HUA Weizhe, ZHANG Zhiru, SUH G E. Reverse Engineering Convolutional Neural Networks through Side-Channel Information Leaks[C]// IEEE. 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC). New York: IEEE, 2018: 1-6. |

| [57] | RAKIN A S, CHOWDHURYY M H I, YAO Fan, et al. DeepSteal: Advanced Model Extractions Leveraging Efficient Weight Stealing in Memories[C]// IEEE. 2022 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2022: 1157-1174. |

| [58] | YAN Mengjia, FLETCHER C, TORRELLAS J. Cache Telepathy: Leveraging Shared Resource Attacks to Learn DNN Architectures[C]// USENIX. The 29th USENIX Conference on Security Symposium. Berkeley: USENIX, 2020: 2003-2020. |

| [59] | HANZLIK L, ZHANG Yang, GROSSE K, et al. MLCapsule: Guarded Offline Deployment of Machine Learning as a Service[C]// IEEE. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). New York: IEEE, 2021: 3295-3304. |

| [60] | KIM K, KIM C H, RHEE J J, et al. Vessels: Efficient and Scalable Deep Learning Prediction on Trusted Processors[C]// ACM. The 11th ACM Symposium on Cloud Computing. New York: ACM, 2020: 462-476. |

| [61] | LI Yuepeng, ZENG Deze, GU Lin, et al. Efficient and Secure Deep Learning Inference in Trusted Processor Enabled Edge Clouds[J]. IEEE Transactions on Parallel and Distributed Systems, 2022, 33(12): 4311-4325. |

| [62] | GU Zhongshu, HUANG Heqing, ZHANG Jialong, et al. Confidential Inference via Ternary Model Partitioning[EB/OL]. (2018-07-03)[2024-10-10]. https://api.semanticscholar.org/CorpusID:235946760. |

| [63] | ELGAMAL T, NAHRSTEDT K. Serdab: An IoT Framework for Partitioning Neural Networks Computation Across Multiple Enclaves[C]// IEEE. 2020 20th IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing (CCGRID). New York: IEEE, 2020: 519-528. |

| [64] | NARRA K G, LIN Zhifeng, WANG Yongqin, et al. Origami Inference: Private Inference Using Hardware Enclaves[C]// IEEE. 2021 IEEE 14th International Conference on Cloud Computing (CLOUD). New York: IEEE, 2021: 78-84. |

| [65] | MO Fan, SHAMSABADI A S, KATEVAS K, et al. DarkneTZ: Towards Model Privacy at the Edge Using Trusted Execution Environments[C]// ACM. Proceedings of the 18th International Conference on Mobile Systems, Applications, and Services. New York: ACM, 2020: 161-174. |

| [66] | MIRESHGHALLAH F, TARAM M, RAMRAKHYANI P, et al. Shredder: Learning Noise Distributions to Protect Inference Privacy[C]// ACM. Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems. New York: ACM, 2020: 3-18. |

| [67] | SCHLÖGL A, BÖHME R. ENNclave: Offline Inference with Model Confidentiality[C]// ACM. Proceedings of the 13th ACM Workshop on Artificial Intelligence and Security. New York: ACM, 2020: 93-104. |

| [68] | XIANG Yecheng, WANG Yidi, CHOI H, et al. AegisDNN: Dependable and Timely Execution of DNN Tasks with SGX[C]// IEEE. 2021 IEEE Real-Time Systems Symposium (RTSS). New York: IEEE, 2021: 68-81. |

| [69] | HOU Jiahui, LIU Huiqi, LIU Yunxin, et al. Model Protection: Real-Time Privacy-Preserving Inference Service for Model Privacy at the Edge[J]. IEEE Transactions on Dependable and Secure Computing, 2022, 19(6): 4270-4284. |

| [70] | SHEN Tianxiang, QI Ji, JIANG Jianyu, et al. SOTER: Guarding Black-Box Inference for General Neural Networks at the Edge[C]// USENIX. 2022 USENIX Annual Technical Conference (USENIX ATC 22). Berkeley: USENIX, 2022: 723-738. |

| [71] | SUN Zhichuang, SUN Ruimin, LIU Changming, et al. ShadowNet: A Secure and Efficient On-Device Model Inference System for Convolutional Neural Networks[C]// IEEE. 2023 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2023: 1596-1612. |

| [72] | ZHOU Tong, LUO Yukui, REN Shaolei, et al. NNSplitter: An Active Defense Solution to DNN Model via Automated Weight Obfuscation[C]// ICML. The 40th International Conference on Machine Learning. New York: ACM, 2023: 42614-42624. |

| [73] | BREIER J, JAP D, HOU Xiaolu, et al. SNIFF: Reverse Engineering of Neural Networks with Fault Attacks[J]. IEEE Transactions on Reliability, 2022, 71(4): 1527-1539. |

| [74] | ROLNICK D, KORDING K. Reverse-Engineering Deep ReLU Networks[C]// ICML. The 37th International Conference on Machine Learning. New York: ACM, 2020: 8178-8187. |

| [75] | LIU Zhibo, YUAN Yuanyuan, WANG Shuai, et al. Decompiling x86 Deep Neural Network Executables[C]// USENIX. The Proceedings of the 32nd USENIX Security Symposium. Berkeley: USENIX, 2023: 7357-7374. |

| [76] | HU Jian, GONG Ke, MAO Yimin, et al. Parallel Deep Convolution Neural Network Optimization Based on Im2col[J]. Application Research of Computers, 2022, 39(10): 2950-2956. |

| 胡健, 龚克, 毛伊敏, 等. 基于Im2col的并行深度卷积神经网络优化算法[J]. 计算机应用研究, 2022, 39(10):2950-2956. | |

| [77] | YANAI K, TANNO R, OKAMOTO K. Efficient Mobile Implementation of a CNN-Based Object Recognition System[C]// ACM. Proceedings of the 24th ACM international conference on Multimedia. New York: ACM, 2016: 362-366. |

| [78] | YE Hanchen, ZHANG Xiaofan, HUANG Zhize, et al. HybridDNN: A Framework for High-Performance Hybrid DNN Accelerator Design and Implementation[C]// IEEE. 2020 57th ACM/IEEE Design Automation Conference (DAC). New York: IEEE, 2020: 1-6. |

| [79] | TIAN Hongliang, ZHANG Qiong, YAN Shoumeng, et al. Switchless Calls Made Practical in Intel SGX[C]// ACM. Proceedings of the 3rd Workshop on System Software for Trusted Execution. New York: ACM, 2018: 22-27. |

| [80] | SWAMI Y. SGX Remote Attestation Is Not Sufficient[EB/OL]. (2017-08-01)[2024-10-10]. https://api.semanticscholar.org/CorpusID:41067899. |

| [81] | TRAMER F, BONEH D. Slalom: Fast, Verifiable and Private Execution of Neural Networks in Trusted Hardware[EB/OL]. (2018-06-08)[2024-10-10]. https://api.semanticscholar.org/CorpusID:47012356. |

| [82] | ZHANG Yuheng, JIA Ruoxi, PEI Hengzhi, et al. The Secret Revealer: Generative Model-Inversion Attacks against Deep Neural Networks[C]// IEEE. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2020: 250-258. |

| [83] | FREDRIKSON M, JHA S, RISTENPART T. Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures[C]// ACM. The 22nd ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2015: 1322-1333. |

| [84] | WU Xi, FREDRIKSON M, JHA S, et al. A Methodology for Formalizing Model-Inversion Attacks[C]// IEEE. 2016 IEEE 29th Computer Security Foundations Symposium (CSF). New York: IEEE, 2016: 355-370. |

| [85] | XU Sheng, LI Yanjing, LIN Mingbao, et al. Q-DETR: An Efficient Low-Bit Quantized Detection Transformer[C]// IEEE. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2023: 3842-3851. |

| [86] | NEYSHABUR B, SEDGHI H, ZHANG Chiyuan. What is Being Transferred in Transfer Learning?[J]. Advances in Neural Information Processing Systems, 2020, 33: 512-523. |

| [87] | WEISS K, KHOSHGOFTAAR T M, WANG Dingding. A Survey of Transfer Learning[J]. Journal of Big Data, 2016, 3: 1-40. |

| [88] | HU E J, SHEN Yelong, WALLIS P, et al. LoRA: Low-Rank Adaptation of Large Language Models[EB/OL]. (2021-06-17)[2024-10-10]. https://api.semanticscholar.org/CorpusID:235458009. |

| [89] | PAN Rui, LIU Xiang, DIAO Shizhe, et al. LISA: Layerwise Importance Sampling for Memory-Efficient Large Language Model Fine-Tuning[EB/OL]. (2024-03-26)[2024-10-10]. https://arxiv.org/abs/2403.17919. |

| [90] | AGHAJANYAN A, ZETTLEMOYER L, GUPTA S. Intrinsic Dimensionality Explains the Effectiveness of Language Model Fine-Tuning[EB/OL]. (2020-12-22)[2024-10-10]. https://doi.org/10.48550/arxiv.2012.13255. |

| [91] | WANG Ji, BAO Weidong, SUN Lichao, et al. Private Model Compression via Knowledge Distillation[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33(1): 1190-1197. |

| [92] | POLINO A, PASCANU R, ALISTARH D. Model Compression via Distillation and Quantization[EB/OL]. (2018-02-15)[2024-10-10]. https://api.semanticscholar.org/CorpusID:3323727. |

| [93] | HWANG R, KANG M, LEE J, et al. GROW: A Row-Stationary Sparse-Dense GEMM Accelerator for Memory-Efficient Graph Convolutional Neural Networks[C]// IEEE. 2023 IEEE International Symposium on High-Performance Computer Architecture (HPCA). New York: IEEE, 2023: 42-55. |

| [94] | MISHRA A, LATORRE J A, POOL J, et al. Accelerating Sparse Deep Neural Networks[EB/OL]. (2021-04-16)[2024-10-10]. https://arxiv.org/abs/2104.08378. |

| [95] | AGARAP A F. Deep Learning Using Rectified Linear Units (ReLU)[EB/OL]. (2018-03-22)[2024-10-10]. https://arxiv.org/abs/1803.08375. |

| [96] | ZHANG Chao, WOODLAND P C. Parameterised sigmoid and ReLU Hidden Activation Functions for DNN Acoustic Modelling[C]// ISCA. 16th Annual Conference of the International Speech Communication Association. Dresden: ISCA, 2015: 3224-3228. |

| [97] | NEHAL A, AHLAWAT P. Securing IoT Applications with OP-TEE from Hardware Level OS[C]// IEEE. 2019 3rd International Conference on Electronics, Communication and Aerospace Technology (ICECA). New York: IEEE, 2019: 1441-1444. |

| [98] | Linaro. Open Portable Trusted Execution Environment[EB/OL]. (2024-07-12)[2024-10-10]. https://www.op-tee.org. |

| [99] | ZHANG Ziqi, NG L K L, LIU Bingyan, et al. TEESlice: Slicing DNN Models for Secure and Efficient Deployment[C]// ACM. The 2nd ACM International Workshop on AI and Software Testing/Analysis. New York: ACM, 2022: 1-8. |

| [100] | MORGADO P, VASCONCELOS N. NetTailor:Tuning the Architecture, Not Just the Weights[C]// IEEE. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2019: 3039-3049. |

| [101] | SUN Yu, XIONG Gaojian, LIU Jianhua, et al. TSQP: Safeguarding Real-Time Inference for Quantization Neural Networks on Edge Devices[EB/OL]. [2024-10-10]. https://www.computer.org/csdl/proceedings-article/sp/2025/223600a001/21B7PUpAEnu. |

| [102] | FREIVALDS R. Fast Probabilistic Algorithms[C]// Springer. The International Symposium on Mathematical Foundations of Computer Science. Heidelberg: Springer, 2005: 57-69. |

| [103] | HAN Kai, WANG Yunhe, CHEN Hanting, et al. A Survey on Vision Transformer[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(1): 87-110. |

| [104] | CARION N, MASSA F, SYNNAEVE G, et al. End-to-End Object Detection with Transformers[M]. Heidelberg: Springer, 2020. |

| [105] | TOUVRON H, LAVRIL T, IZACARD G, et al. LLaMA: Open and Efficient Foundation Language Models[EB/OL]. (2023-02-27)[2024-10-10]. https://api.semanticscholar.org/CorpusID:257219404. |

| [106] | Hangzhou Linkway Technology Co., Ltd.. Om Vision Large Model[EB/OL]. (2024-04-06)[2024-10-10]. https://om.linker.cc/. |

| 杭州联汇科技股份有限公司. 欧姆视觉大模型[EB/OL]. (2024-04-06)[2024-10-10]. https://om.linker.cc/. | |

| [107] | CHENG P, OZGA W, VALDEZ E, et al. Intel TDX Demystified: A Top-Down Approach[J]. ACM Computing Surveys, 2024, 56(9): 1-33. |

| [108] | LI Xupeng, LI Xuheng, DALL C, et al. Design and Verification of the Arm Confidential Compute Architecture[C]// USENIX. The 16th USENIX Symposium on Operating Systems Design and Implementation (OSDI 22). Berkeley: USENIX, 2022: 465-484. |

| [109] | ROMBACH R, BLATTMANN A, LORENZ D, et al. High-Resolution Image Synthesis with Latent Diffusion Models[C]// IEEE. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR). New York: IEEE, 2021: 10674-10685. |

| [110] | LIU Yixin, ZHANG Kai, LI Yuan, et al. Sora: A Review on Background, Technology, Limitations, and Opportunities of Large Vision Models[EB/OL]. (2024-02-27)[2024-10-10]. https://api.semanticscholar.org/CorpusID:268032569. |

| [111] | DUAN Sijing, WANG Dan, REN Ju, et al. Distributed Artificial Intelligence Empowered by End-Edge-Cloud Computing: A Survey[J]. IEEE Communications Surveys & Tutorials, 2023, 25(1): 591-624. |

| [112] | HU Shijing, DENG Ruijun, DU Xin, et al. LAECIPS: Large Vision Model Assisted Adaptive Edge-Cloud Collaboration for IoT-Based Perception System[EB/OL]. (2024-04-16)[2024-10-10]. https://api.semanticscholar.org/CorpusID:269157260. |

| [113] | CHEN Ning, YANG Jie, CHENG Zhipeng, et al. GainNet: Coordinates the Odd Couple of Generative AI and 6G Networks[J]. IEEE Network, 2024, 38(5): 56-65. |

| [1] | 唐雨, 张驰. 一种基于Intel SGX的信息中心网络隐私保护方案[J]. 信息网络安全, 2023, 23(6): 55-65. |

| [2] | KELEKET GOMA Christy Junior Yannick, 易文哲, 王鹃. 一种基于SGX的轻量Fabric链码可信执行环境构建方法[J]. 信息网络安全, 2022, 22(7): 73-83. |

| [3] | 尤玮婧, 刘丽敏, 马悦, 韩东. 基于安全硬件的云端数据机密性验证方案[J]. 信息网络安全, 2020, 20(12): 1-8. |

| [4] | 刘志娟, 高隽, 丁启枫, 王跃武. 移动终端TEE技术进展研究[J]. 信息网络安全, 2018, 18(2): 84-91. |

| [5] | 范冠男, 董攀. 基于TrustZone的可信执行环境构建技术研究[J]. 信息网络安全, 2016, 16(3): 21-27. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||