信息网络安全 ›› 2025, Vol. 25 ›› Issue (1): 63-77.doi: 10.3969/j.issn.1671-1122.2025.01.006

基于异构数据的联邦学习自适应差分隐私方法研究

- 华北电力大学控制与计算机工程学院,北京 102206

-

收稿日期:2024-09-28出版日期:2025-01-10发布日期:2025-02-14 -

通讯作者:仝雨蒙 E-mail:tongym02@163.com -

作者简介:徐茹枝(1966—),女,江西,教授,博士,主要研究方向为AI安全、智能电网|仝雨蒙(2002—),女,河北,硕士研究生,主要研究方向为联邦学习|戴理朋(1999—),男,安徽,硕士研究生,主要研究方向为联邦学习、差分隐私 -

基金资助:国家重点研发计划(62372173)

Research on Federated Learning Adaptive Differential Privacy Method Based on Heterogeneous Data

XU Ruzhi, TONG Yumeng( ), DAI Lipeng

), DAI Lipeng

- School of Control and Computer Engineering, North China Electric Power University, Beijing 102206, China

-

Received:2024-09-28Online:2025-01-10Published:2025-02-14 -

Contact:TONG Yumeng E-mail:tongym02@163.com

摘要:

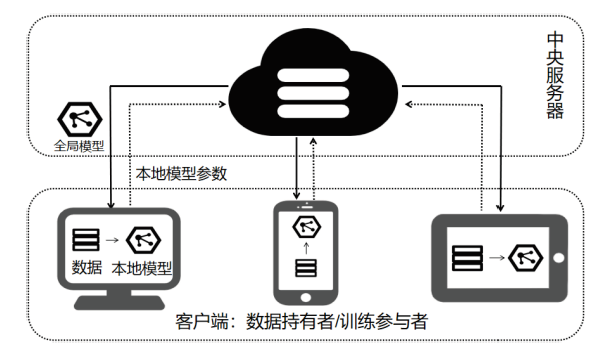

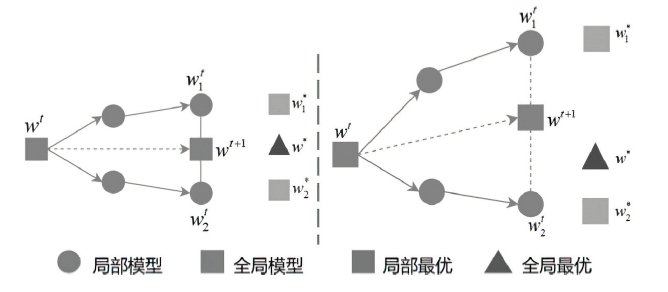

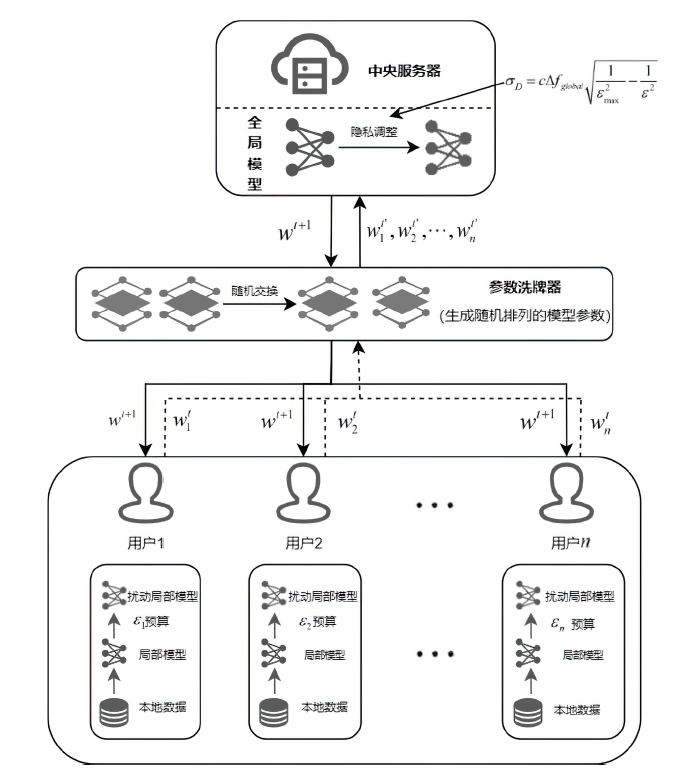

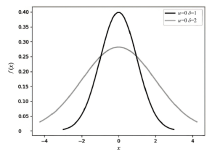

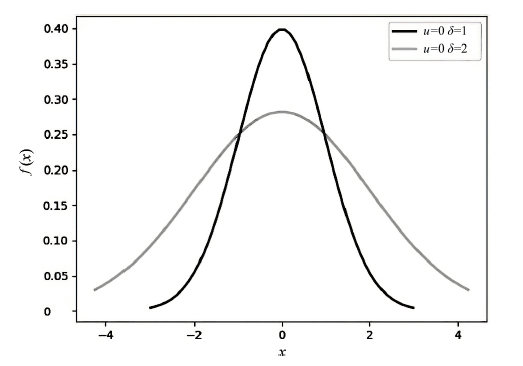

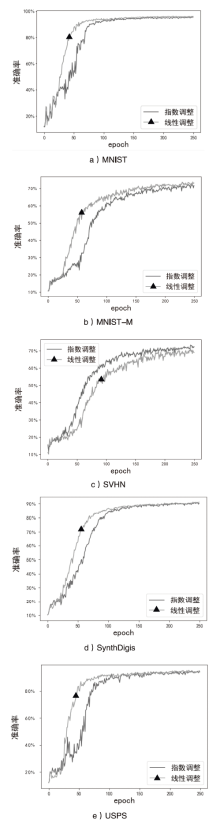

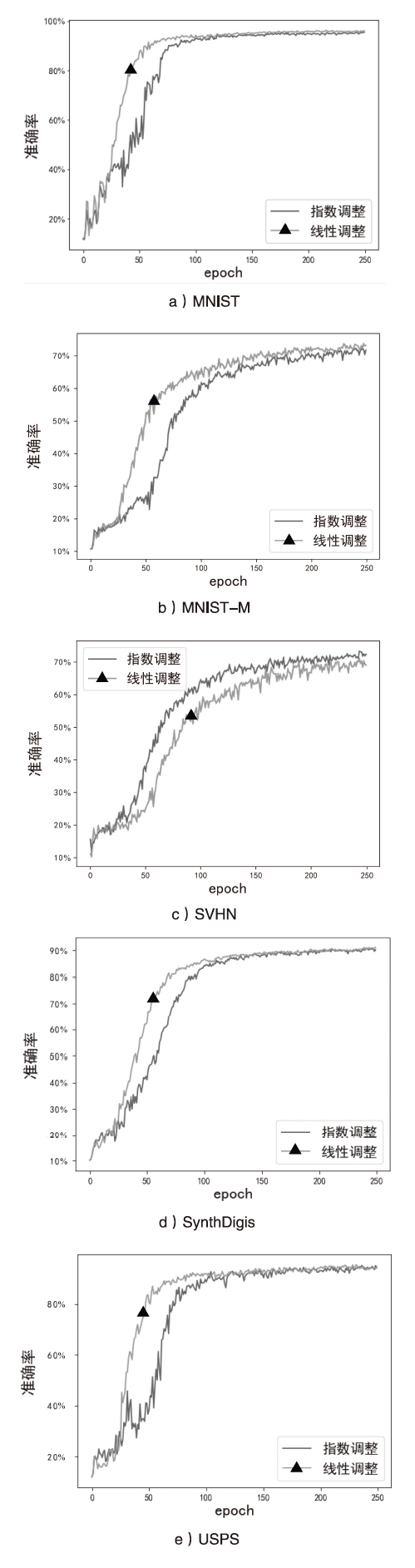

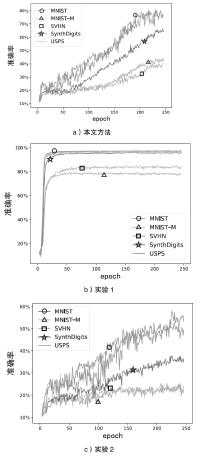

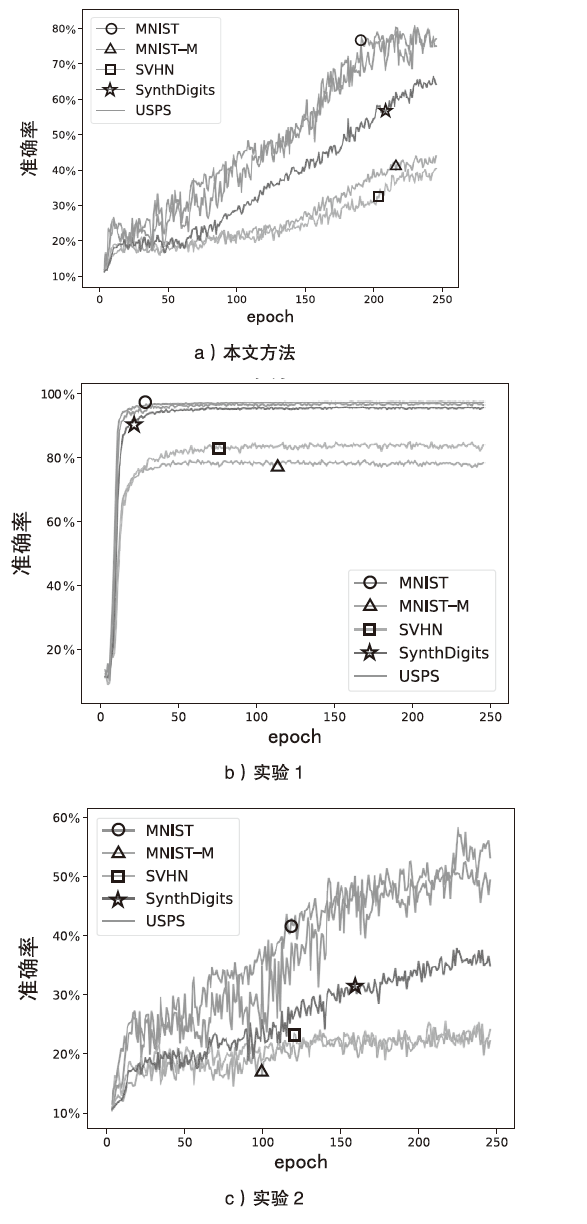

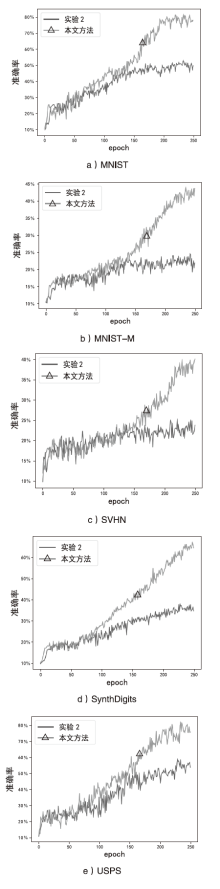

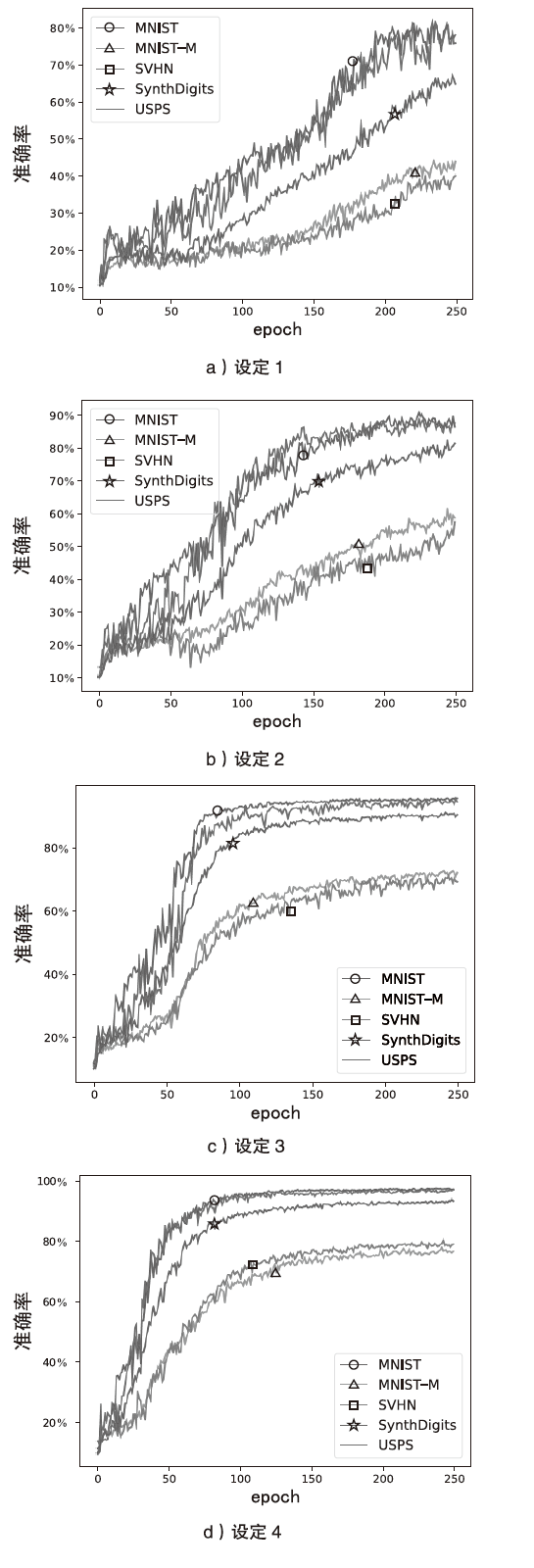

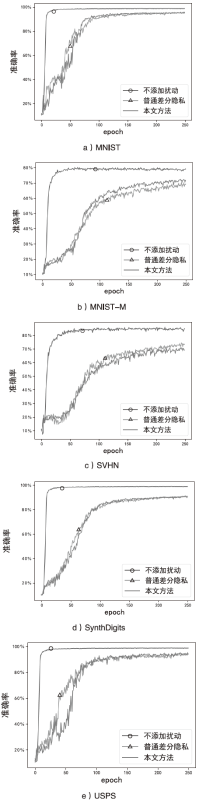

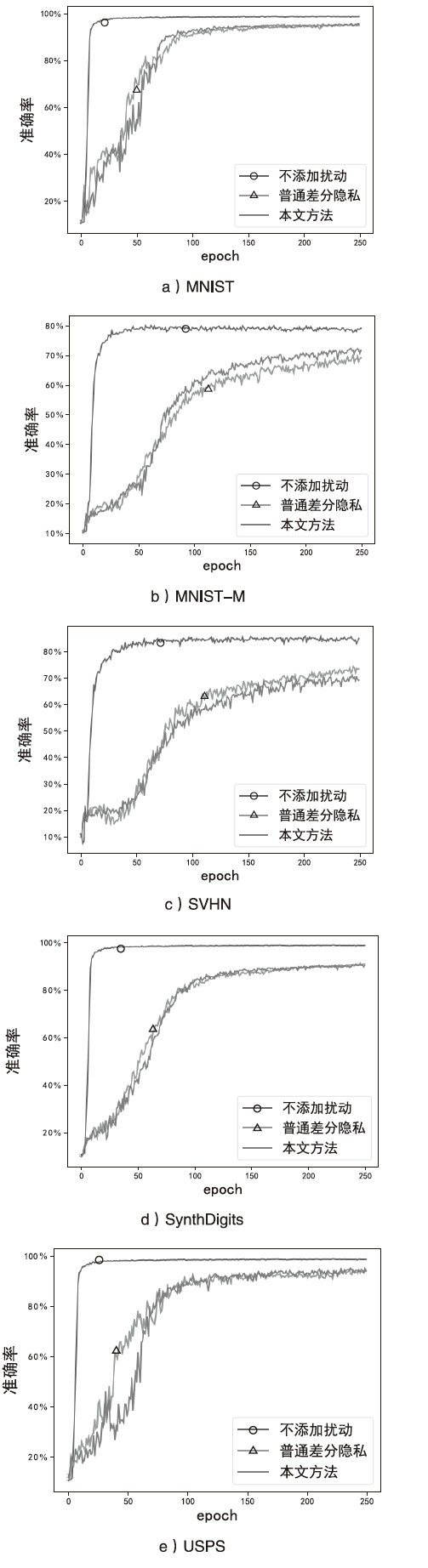

在联邦学习中,由于需要大量的参数交换,可能会引发来自不可信参与设备的安全威胁。为了保护训练数据和模型参数,必须采用有效的隐私保护措施。鉴于异构数据的不均衡特性,文章提出一种自适应性差分隐私方法来保护基于异构数据的联邦学习的安全性。首先为不同的客户端设置不同的初始隐私预算,对局部模型的梯度参数添加高斯噪声;其次在训练过程中根据每一轮迭代的损失函数值,动态调整各个客户端的隐私预算,加快收敛速度;接着设定一个可信的中央节点,对不同客户端的局部模型的每一层参数进行随机交换,然后将混淆过后的局部模型参数上传到中央服务器进行聚合;最后中央服务器聚合可信中央节点上传的混淆参数,根据预先设定的全局隐私预算阈值,对全局模型添加合适的噪声,进行隐私修正,实现服务器层面的隐私保护。实验结果表明,在相同的异构数据条件下,相对于普通的差分隐私方法,该方法具有更快的收敛速度以及更好的模型性能。

中图分类号:

引用本文

徐茹枝, 仝雨蒙, 戴理朋. 基于异构数据的联邦学习自适应差分隐私方法研究[J]. 信息网络安全, 2025, 25(1): 63-77.

XU Ruzhi, TONG Yumeng, DAI Lipeng. Research on Federated Learning Adaptive Differential Privacy Method Based on Heterogeneous Data[J]. Netinfo Security, 2025, 25(1): 63-77.

| [1] |

XIONG Shiqiang, HE Daojing, WANG Zhendong, et al. A Review of Federated Learning and its Security and Privacy Protection[J]. Computer Engineering, 2024, 50(5): 1-15.

doi: 10.19678/j.issn.1000-3428.0067782 |

|

熊世强, 何道敬, 王振东, 等. 联邦学习及其安全与隐私保护研究综述[J]. 计算机工程, 2024, 50(5): 1-15.

doi: 10.19678/j.issn.1000-3428.0067782 |

|

| [2] | ACAR A, AKSU H, ULUAGAC A S, et al. A Survey on Homomorphic Encryption Schemes: Theory and Implementation[J]. ACM Computing Surveys (Csur), 2018, 51(4): 1-35. |

| [3] | LI Xiang. On the Convergence of Fedavg on Non-IID Data[EB/OL]. (2020-06-25)[2024-09-15]. https://doi.org/10.48550/arXiv.1907.02189. |

| [4] | ZHAO Yue. Federated Learning with Non-IID Data[EB/OL]. (2022-07-21)[2024-09-15]. https://doi.org/10.48550/arXiv.1806.00582. |

| [5] | ABADI M, CHU A, GOODFELLOW I, et al. Deep Learning with Differential Privacy[C]// ACM. Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. |

| [6] | DU Wenliang, ATALLAH M J. Secure Multi-Party Computation Problems and their Applications: A Review and Open Problems[C]// ACM.Proceedings of the 2001 Workshop on New Security Paradigms. New York: ACM, 2001: 13-22. |

| [7] | HASHEMI H, WANG Yongqin, ANNAVARAM M. DarKnight: An Accelerated Framework for Privacy and Integrity Preserving Deep Learning Using Trusted Hardware[C]// ACM. MICRO-54: 54th Annual IEEE/ACM International Symposium on Microarchitecture. New York: ACM, 2021: 212-224. |

| [8] | MOHAMMADI N, BAI Jianan, FAN Qiang, et al. Differential Privacy Meets Federated Learning under Communication Constraints[J]. IEEE Internet of Things Journal, 2021, 9(22): 22204-22219. |

| [9] | GONG Xuan, SONG Liangchen, VEDULA R, et al. Federated Learning with Privacy-Preserving Ensemble Attention Distillation[J]. IEEE Transactions on Medical Imaging, 2022, 42(7): 2057-2067. |

| [10] | GAO Dashan, LIU Yang, HUANG Anbu, et al. Privacy-Preserving Heterogeneous Federated Transfer Learning[C]// IEEE. 2019 IEEE International Conference on Big Data (Big Data). New York: IEEE, 2019: 2552-2559. |

| [11] | NOBLE M, BELLET A, DIEULEVEUT A. Differentially Private Federated Learning on Heterogeneous Data[C]// PMLR. International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2022: 10110-10145. |

| [12] | YANG Li, ZHU Lingbo, YU Yueming, et al. Review of Federal Learning and Offensive-Defensive Confrontation[J]. Netinfo Security, 2023, 23(12): 69-90. |

| 杨丽, 朱凌波, 于越明, 等. 联邦学习与攻防对抗综述[J]. 信息网络安全, 2023, 23(12): 69-90. | |

| [13] | MAMMEN P M. Federated Learning: Opportunities and Challenges[EB/OL]. (2021-01-14)[2024-09-15]. https://doi.org/10.48550/arXiv.2101.05428. |

| [14] | YANG Liuyan, HE Juanjuan, FU Yue, et al. Federated Learning for Medical Imaging Segmentation via Dynamic Aggregation on Non-IID Data Silos[J]. Electronics, 2023, 12(7): 1687-1707. |

| [15] | LI Qinbin, DIAO Yiqun, CHEN Quan, et al. Federated Learning on Non-IId Data Silos: An Experimental Study[C]// IEEE. 2022 IEEE 38th International Conference on Data Engineering (ICDE). New York: IEEE, 2022: 965-978. |

| [16] | DWORK C. Differential Privacy[C]// Springer. International Colloquium on Automata, Languages, and Programming. Heidelberg: Springer, 2006: 1-12. |

| [17] | XU Ruzhi, DAI Lipeng, XIA Diya, et al. Research on Centralized Differential Privacy Algorithm for Federated Learning[J]. Netinfo Security, 2024, 24(1): 69-79. |

| 徐茹枝, 戴理朋, 夏迪娅, 等. 基于联邦学习的中心化差分隐私保护算法研究[J]. 信息网络安全, 2024, 24(1): 69-79. | |

| [18] | GIRGIS A M, DATA D, DIGGAVI S, et al. Shuffled Model of Federated Learning: Privacy, Accuracy and Communication Trade-Offs[J]. IEEE Journal on Selected Areas in Information Theory, 2021, 2(1): 464-478. |

| [1] | 郭倩, 赵津, 过弋. 基于分层聚类的个性化联邦学习隐私保护框架[J]. 信息网络安全, 2024, 24(8): 1196-1209. |

| [2] | 尹春勇, 贾续康. 基于策略图的三维位置隐私发布算法研究[J]. 信息网络安全, 2024, 24(4): 602-613. |

| [3] | 薛茗竹, 胡亮, 王明, 王峰. 基于联邦学习和区块链技术的TAP规则处理系统[J]. 信息网络安全, 2024, 24(3): 473-485. |

| [4] | 林怡航, 周鹏远, 吴治谦, 廖勇. 基于触发器逆向的联邦学习后门防御方法[J]. 信息网络安全, 2024, 24(2): 262-271. |

| [5] | 金志刚, 丁禹, 武晓栋. 融合梯度差分的双边校正联邦入侵检测算法[J]. 信息网络安全, 2024, 24(2): 293-302. |

| [6] | 何泽平, 许建, 戴华, 杨庚. 联邦学习应用技术研究综述[J]. 信息网络安全, 2024, 24(12): 1831-1844. |

| [7] | 兰浩良, 王群, 徐杰, 薛益时, 张勃. 基于区块链的联邦学习研究综述[J]. 信息网络安全, 2024, 24(11): 1643-1654. |

| [8] | 顾海艳, 柳琪, 马卓, 朱涛, 钱汉伟. 基于可用性的数据噪声添加方法研究[J]. 信息网络安全, 2024, 24(11): 1731-1738. |

| [9] | 萨其瑞, 尤玮婧, 张逸飞, 邱伟杨, 马存庆. 联邦学习模型所有权保护方案综述[J]. 信息网络安全, 2024, 24(10): 1553-1561. |

| [10] | 陈婧, 张健. 基于知识蒸馏的无数据个性化联邦学习算法[J]. 信息网络安全, 2024, 24(10): 1562-1569. |

| [11] | 吴立钊, 汪晓丁, 徐恬, 阙友雄, 林晖. 面向半异步联邦学习的防御投毒攻击方法研究[J]. 信息网络安全, 2024, 24(10): 1578-1585. |

| [12] | 吴昊天, 李一凡, 崔鸿雁, 董琳. 基于零知识证明和区块链的联邦学习激励方案[J]. 信息网络安全, 2024, 24(1): 1-13. |

| [13] | 赵佳, 杨博凯, 饶欣宇, 郭雅婷. 基于联邦学习的Tor流量检测算法设计与实现[J]. 信息网络安全, 2024, 24(1): 60-68. |

| [14] | 徐茹枝, 戴理朋, 夏迪娅, 杨鑫. 基于联邦学习的中心化差分隐私保护算法研究[J]. 信息网络安全, 2024, 24(1): 69-79. |

| [15] | 尹春勇, 蒋奕阳. 基于个性化时空聚类的差分隐私轨迹保护模型[J]. 信息网络安全, 2024, 24(1): 80-92. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||