Netinfo Security ›› 2025, Vol. 25 ›› Issue (10): 1570-1578.doi: 10.3969/j.issn.1671-1122.2025.10.008

Previous Articles Next Articles

Research on the Application of Large Language Model in False Positive Handling for Managed Security Services

HU Longhui1, SONG Hong1( ), WANG Weiping1, YI Jia2, ZHANG Zhixiong2

), WANG Weiping1, YI Jia2, ZHANG Zhixiong2

- 1. School of Computer Science and Engineering, Central South University, Changsha 410083, China

2. Sangfor Technologies Co., Ltd., Shenzhen 518052, China

-

Received:2025-03-03Online:2025-10-10Published:2025-11-07 -

Contact:SONG Hong E-mail:songhong@csu.edu.cn

CLC Number:

Cite this article

HU Longhui, SONG Hong, WANG Weiping, YI Jia, ZHANG Zhixiong. Research on the Application of Large Language Model in False Positive Handling for Managed Security Services[J]. Netinfo Security, 2025, 25(10): 1570-1578.

share this article

Add to citation manager EndNote|Ris|BibTeX

URL: http://netinfo-security.org/EN/10.3969/j.issn.1671-1122.2025.10.008

| 参数名 | 参数值 | 参数名 | 参数值 |

|---|---|---|---|

| preprocessing_num_workers | 16 | learning_rate | 5×10-5 |

| lr_scheduler_type | cosine | cutoff_len | 2048 |

| finetuning_type | LoRA | save_steps | 100 |

| per_device_train_batch_size | 2 | max_samples | 1×105 |

| gradient_accumulation_steps | 8 | fp16 | True |

| max_grad_norm | 1.0 | logging_steps | 5 |

| num_train_epochs | 3.0 | lora_rank | 8 |

| optim | adamw_torch | lora_alpha | 16 |

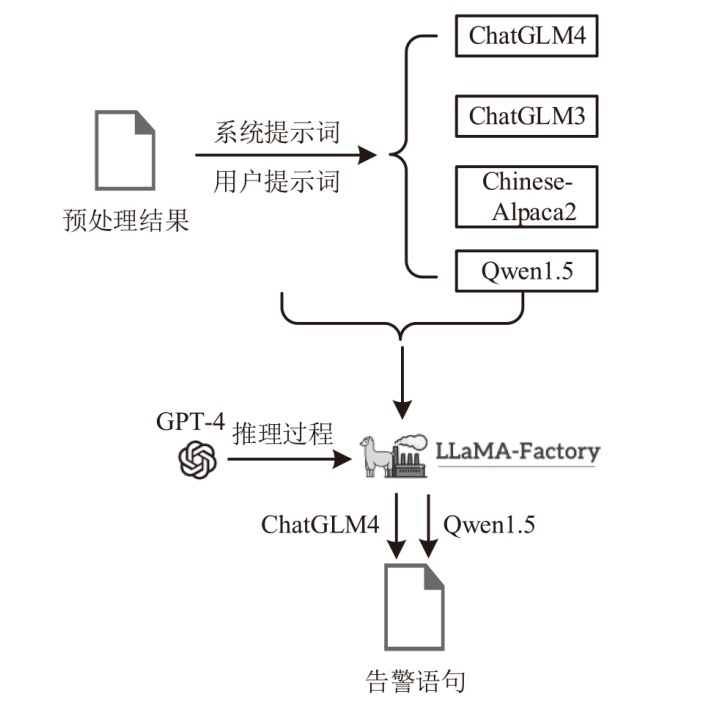

| 大语言模型 | 提示工程技术 | Rouge-L |

|---|---|---|

| ChatGLM4 | CoT + Few Shot | 86.322% |

| CoT | 80.415% | |

| Few Shot | 84.243% | |

| Zero Shot | 79.558% | |

| ChatGLM3 | CoT + Few Shot | 79.088% |

| CoT | 51.188% | |

| Few Shot | 83.725% | |

| Zero Shot | 58.590% | |

| Chinese-Alpaca2 | CoT + Few Shot | 66.785% |

| CoT | 54.743% | |

| Few Shot | 69.468% | |

| Zero Shot | 21.675% | |

| Qwen1.5 | CoT + Few Shot | 85.664% |

| CoT | 80.099% | |

| Few Shot | 81.235% | |

| Zero Shot | 80.495% |

| [1] | KOKULU F B, SONEJI A, BAO T, et al. Matched and Mismatched SOCs: A Qualitative Study on Security Operations Center Issues[C]// ACM. The 2019 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2019: 1955-1970. |

| [2] | HASSAN W U, NOUREDDINE M A, DATTA P, et al. OmegaLog: High-Fidelity Attack Investigation via Transparent Multi-Layer Log Analysis[EB/OL]. (2020-02-23)[2025-03-01]. https://www.ndss-symposium.org/ndss-paper/omegalog-high-fidelity-attack-investigation-via-transparent-multi-layer-log-analysis/. |

| [3] | YU Le, MA Shiqing, ZHANG Zhuo, et al. ALchemist: Fusing Application and Audit Logs for Precise Attack Provenance Without Instrumentation[EB/OL]. (2021-02-21)[2025-03-01]. https://dx.doi.org/10.14722/ndss.2021.24445. |

| [4] |

ZHONG Chen, LIN Tao, LIU Peng, et al. A Cyber Security Data Triage Operation Retrieval System[J]. Computers & Security, 2018, 76: 12-31.

doi: 10.1016/j.cose.2018.02.011 URL |

| [5] | HASSAN W U, GUO Shengjian, LI Ding, et al. NoDoze: Combatting Threat Alert Fatigue with Automated Provenance Triage[EB/OL]. (2019-02-22)[2025-03-01]. https://www.researchgate.net/publication/348915407_NoDoze_Combatting_Threat_Alert_Fatigue_with_Automated_Provenance_Triage. |

| [6] |

DAS S, SAHA S, PRIYOTI A T, et al. Network Intrusion Detection and Comparative Analysis Using Ensemble Machine Learning and Feature Selection[J]. IEEE Transactions on Network and Service Management, 2022, 19(4): 4821-4833.

doi: 10.1109/TNSM.2021.3138457 URL |

| [7] |

ATEFINIA R, AHMADI M. Network Intrusion Detection Using Multi-Architectural Modular Deep Neural Network[J]. The Journal of Supercomputing, 2021, 77(4): 3571-3593.

doi: 10.1007/s11227-020-03410-y |

| [8] | ZHANG Changlin, TONG Xin, TONG Hui, et al. A Survey of Large Language Models in the Domain of Cybersecurity[J]. Netinfo Security, 2024, 24(5): 778-793. |

| 张长琳, 仝鑫, 佟晖, 等. 面向网络安全领域的大语言模型技术综述[J]. 信息网络安全, 2024, 24(5):778-793. | |

| [9] | CUI Yiming, YANG Ziqing, YAO Xin. Efficient and Effective Text Encoding for Chinese Llama and Alpaca[EB/OL]. (2024-02-23)[2025-03-01]. https://doi.org/10.48550/arXiv.2304.08177. |

| [10] | ZENG Aohan, XU Bin, WANG Bowen, et al. ChatGLM: A Family of Large Language Models from GLM-130b to GLM-4 All Tools[EB/OL]. (2024-07-30)[2025-03-01]. https://doi.org/10.48550/arXiv.2406.12793. |

| [11] | BAI Jinze, BAI Shuai, CHU Yunfei, et al. Qwen Technical Report[EB/OL]. (2023-09-28)[2025-03-01]. https://doi.org/10.48550/arXiv.2309.16609. |

| [12] | BROWN T, MANN B, RYDER N, et al. Language Models are Few-Shot Learners[J]. Neural Information Processing Systems, 2020(33): 1877-1901. |

| [13] | WEI J, WANG Xuezhi, SCHUURMANS D, et al. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models[J]. Neural Information Processing Systems, 2022(35): 24824-24837. |

| [14] | LIN C Y. ROUGE: A Package for Automatic Evaluation of Summaries[C]// ACL. 2004 Annual Meeting of the Association for Computational Linguistics. Stroudsburg: ACL, 2004: 74-81. |

| [15] | ACHIAM J, ADLER S, AGARWAL S, et al. GPT-4 Technical Report[EB/OL]. (2023-03-15)[2025-03-01]. https://doi.org/10.48550/arXiv.2303.08774. |

| [16] | ZHENG Yaowei, ZHANG Richong, ZHANG Junhao, et al. LlamaFactory: Unified Efficient Fine-Tuning of 100+ Language Models[EB/OL]. (2024-06-27)[2025-03-01]. https://doi.org/10.48550/arXiv.2403.13372. |

| [17] | LYU Kai, YANG Yuqing, LIU Tengxiao, et al. Full Parameter Fine-Tuning for Large Language Models with Limited Resources[C]// ACL. The 62nd Annual Meeting of the Association for Computational Linguistics. Stroudsburg: ACL, 2024: 8187-8198. |

| [18] |

ZHANG Qintong, WANG Yuchao, WANG Hexi, et al. Comprehensive Review of Large Language Model Fine-Tuning[J]. Computer Engineering and Applications, 2024, 60(17): 17-33.

doi: 10.3778/j.issn.1002-8331.2312-0035 |

|

张钦彤, 王昱超, 王鹤羲, 等. 大语言模型微调技术的研究综述[J]. 计算机工程与应用, 2024, 60(17): 17-33.

doi: 10.3778/j.issn.1002-8331.2312-0035 |

|

| [19] | HU E J, SHEN Yelong, WALLIS P, et al. LoRA: Low-Rank Adaptation of Large Language Models[EB/OL]. (2021-10-16)[2025-03-01]. https://doi.org/10.48550/arXiv.2106.09685. |

| [1] | HU Yucui, GAO Haotian, ZHANG Jie, YU Hang, YANG Bin, FAN Xuejian. Automated Exploitation of Vulnerabilities in Vehicle Network Security [J]. Netinfo Security, 2025, 25(9): 1348-1356. |

| [2] | LIU Hui, ZHU Zhengdao, WANG Songhe, WU Yongcheng, HUANG Linquan. Jailbreak Detection for Large Language Model Based on Deep Semantic Mining [J]. Netinfo Security, 2025, 25(9): 1377-1384. |

| [3] | WANG Lei, CHEN Jiongyi, WANG Jian, FENG Yuan. Intelligent Reverse Analysis Method of Firmware Program Interaction Relationships Based on Taint Analysis and Textual Semantics [J]. Netinfo Security, 2025, 25(9): 1385-1396. |

| [4] | ZHANG Yanyi, RUAN Shuhua, ZHENG Tao. Research on REST API Design Security Testing [J]. Netinfo Security, 2025, 25(8): 1313-1325. |

| [5] | CHEN Ping, LUO Mingyu. Research on Large Model Analysis Methods for Kernel Race Vulnerabilities in Cloud-Edge-Device Scenarios [J]. Netinfo Security, 2025, 25(7): 1007-1020. |

| [6] | FENG Wei, XIAO Wenming, TIAN Zheng, LIANG Zhongjun, JIANG Bin. Research on Semantic Intelligent Recognition Algorithms for Meteorological Data Based on Large Language Models [J]. Netinfo Security, 2025, 25(7): 1163-1171. |

| [7] | ZHANG Xuewang, LU Hui, XIE Haofei. A Data Augmentation Method Based on Graph Node Centrality and Large Model for Vulnerability Detection [J]. Netinfo Security, 2025, 25(4): 550-563. |

| [8] | XIE Mengfei, FU Jianming, YAO Renyi. Research on LLM-Based Fuzzing of Native Multimedia Libraries [J]. Netinfo Security, 2025, 25(3): 403-414. |

| [9] | QIN Zhongyuan, WANG Tiantian, LIU Weiqiang, ZHANG Qunfang. Advances in Watermarking Techniques for Large Language Models [J]. Netinfo Security, 2025, 25(2): 177-193. |

| [10] | CHEN Haoran, LIU Yu, CHEN Ping. Endogenous Security Heterogeneous Entity Generation Method Based on Large Language Model [J]. Netinfo Security, 2024, 24(8): 1231-1240. |

| [11] | XIANG Hui, XUE Yunhao, HAO Lingxin. Large Language Model-Generated Text Detection Based on Linguistic Feature Ensemble Learning [J]. Netinfo Security, 2024, 24(7): 1098-1109. |

| [12] | GUO Xiangxin, LIN Jingqiang, JIA Shijie, LI Guangzheng. Security Analysis of Cryptographic Application Code Generated by Large Language Model [J]. Netinfo Security, 2024, 24(6): 917-925. |

| [13] | QIN Zhenkai, XU Mingchao, JIANG Ping. Research on the Construction Method and Application of Case Knowledge Graph Based on Prompt Learning [J]. Netinfo Security, 2024, 24(11): 1773-1782. |

| [14] | LI Jiao, ZHANG Yuqing, WU Yabiao. Data Augmentation Method via Large Language Model for Relation Extraction in Cybersecurity [J]. Netinfo Security, 2024, 24(10): 1477-1483. |

| [15] | HUANG Kaijie, WANG Jian, CHEN Jiongyi. A Large Language Model Based SQL Injection Attack Detection Method [J]. Netinfo Security, 2023, 23(11): 84-93. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||