Netinfo Security ›› 2025, Vol. 25 ›› Issue (6): 859-871.doi: 10.3969/j.issn.1671-1122.2025.06.002

Previous Articles Next Articles

Lightweight Malicious Traffic Detection Method Based on Knowledge Distillation

SUN Jianwen1( ), ZHANG Bin1, SI Nianwen2, FAN Ying3

), ZHANG Bin1, SI Nianwen2, FAN Ying3

- 1. Department of Cryptogram Engineering, Information Engineering University, Zhengzhou 450001, China

2. Information System Engineering Institute, Information Engineering University, Zhengzhou 450001, China

3. College of Equipment Management and Support, Engineering University of PAP, Xi’an 710038, China

-

Received:2025-02-20Online:2025-06-10Published:2025-07-11

CLC Number:

Cite this article

SUN Jianwen, ZHANG Bin, SI Nianwen, FAN Ying. Lightweight Malicious Traffic Detection Method Based on Knowledge Distillation[J]. Netinfo Security, 2025, 25(6): 859-871.

share this article

Add to citation manager EndNote|Ris|BibTeX

URL: http://netinfo-security.org/EN/10.3969/j.issn.1671-1122.2025.06.002

| 测试 数据集 | 模型 (transformer层数) | AC | PR | RC | F1 | 推理速度(个/s) |

|---|---|---|---|---|---|---|

| USTC-TFC2016 | Model-T(12) | 99.60% | 98.80% | 99.12% | 98.95% | 1033.58 |

| Model-S(1) | 99.38% | 97.82% | 98.62% | 98.17% | 10917.74 | |

| ISCX-VPN2016-Service | Model-T(12) | 100% | 100% | 100% | 100% | 1315.90 |

| Model-S(1) | 100% | 100% | 100% | 100% | 13620.15 | |

| CSE-CIC-IDS2018 | Model-T(12) | 99.94% | 97.57% | 99.99% | 98.54% | 1051.96 |

| Model-S(1) | 99.86% | 96.17% | 98.95% | 97.21% | 10789.78 |

| 方法 | 模型大小 /MB | USTC-TFC2016 | ISCX-VPN2016-Service | ||

|---|---|---|---|---|---|

| AC | F1 | AC | F1 | ||

| ET-BERT[ | 504 | 98.97% | 99.02% | 99.92% | 99.95% |

| TrafficFormer[ | 504 | 98.16% | 98.30% | 95.89% | 95.80% |

| 本文(Model-T) | 286 | 99.60% | 98.95% | 100% | 100% |

| TSCRNN[ | 11.7 | 96.90% | 96.85% | 88.04% | 89.26% |

| DeepPacket[ | 38.5 | 95.36% | 93.88% | 90.66% | 89.83% |

| FS-Net[ | 25.7 | 97.60% | 96.90% | — | — |

| MLSTM-FCN[ | 29.1 | 97.00% | 96.30% | — | — |

| 本文方法 | 26 | 99.38% | 98.17% | 100% | 100% |

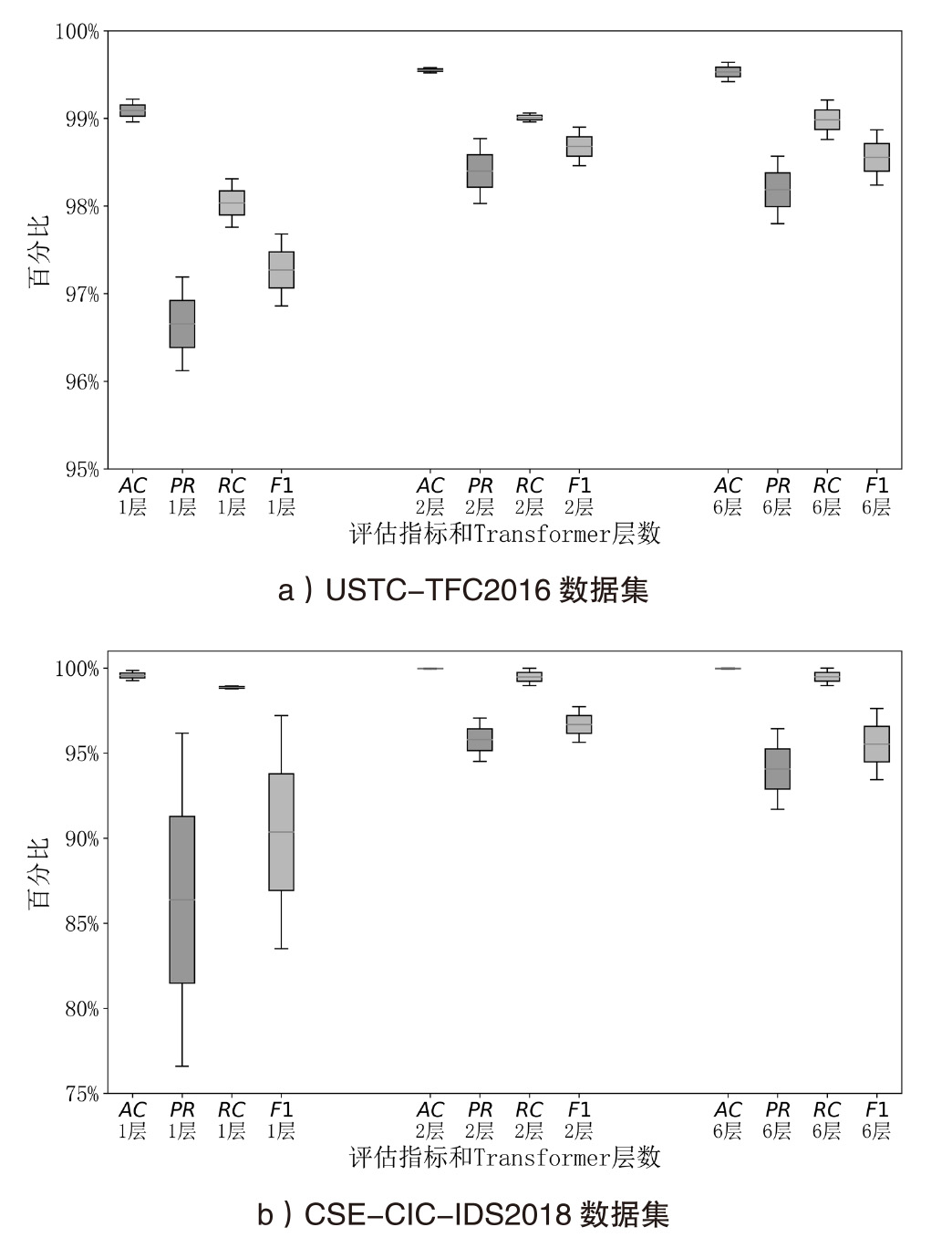

| 数据集 | 模型 (transformer层数) | AC | PR | RC | F1 | 模型大小/MB |

|---|---|---|---|---|---|---|

| USTC-TFC2016 | Model-T(12) | 99.60% | 98.80% | 99.12% | 98.95% | 286.0 |

| Model-S(6) | 99.64% | 98.57% | 99.21% | 98.87% | 144.2 | |

| Model-S(2) | 99.58% | 98.77% | 99.06% | 98.90% | 49.7 | |

| Model-S(1) | 99.22% | 97.19% | 98.31% | 97.68% | 26.0 | |

| CSE-CIC-IDS2018 | Model-T(12) | 99.94% | 97.57% | 99.99% | 98.54% | 286.0 |

| Model-S(6) | 100% | 96.43% | 100% | 97.62% | 144.2 | |

| Model-S(2) | 99.98% | 94.51% | 99.99% | 95.64% | 49.7 | |

| Model-S(1) | 99.86% | 96.17% | 98.95% | 97.21% | 26.0 |

| [1] | ANDERSON B, MCGREW D. Machine Learning for Encrypted Malware Traffic Classification: Accounting for Noisy Labels and Non-Stationarity[C]// ACM. 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York: ACM, 2017: 1723-1732. |

| [2] | AHMED A M, ABDULLAH A, SUNIL K S, et al. Zero-Day Exploits Detection with Adaptive WavePCA-Autoencoder (AWPA) Adaptive Hybrid Exploit Detection Network (Ahednet)[EB/OL]. (2025-02-03)[2025-02-14]. https://www.nature.com/articles/s41598-025-87615-2. |

| [3] | ZHOU Jiajun, FU Wentao, SONG Hao, et al. Multi-View Correlation-Aware Network Traffic Detection on Flow Hypergraph[EB/OL]. (2025-01-15)[2025-02-14]. https://arxiv.org/abs/2501.08610. |

| [4] | MARÍN G, CASAS P, CAPDEHOURAT G. Deep in the Dark-Deep Learning-Based Malware Traffic Detection without Expert Knowledge[C]// IEEE. 2019 IEEE Security and Privacy Workshops (SPW). New York: IEEE, 2019: 36-42. |

| [5] | YANG Hongyu, ZHANG Haohao, HU Ze, et al. Survey on Network Abnormal Traffic Detection Based on Deep Learning[J]. Journal of Wuhan University(Natural Science Edition), 2025, 71(2): 159-172. |

| 杨宏宇, 张豪豪, 胡泽, 等. 基于深度学习的网络异常流量检测研究综述[J]. 武汉大学学报(理学版), 2025, 71(2):159-172. | |

| [6] | LIN Xinjie, XIONG Gang, GOU Gaopeng, et al. Et-Bert: A Contextualized Datagram Representation with Pre-training Transformers for Encrypted Traffic Classification[C]// ACM. 31st Proceedings of the ACM Web Conference 2022. New York: ACM, 2022: 633-642. |

| [7] | ZHOU Guangmeng, GUO Xiongwen, LIU Zhuotao, et al. TrafficFormer: An Efficient Pre-trained Model for Traffic Data[C]// IEEE. 2025 IEEE Symposium on Security and Privacy (SP). New York: IEEE, 2024: 1844-1860. |

| [8] | ZHAO Ruijie, ZHAN Mingwei, DENG Xianwen, et al. A Novel Self-Supervised Framework Based on Masked Autoencoder for Traffic Classification[J]. IEEE/ACM Transactions on Networking, 2024, 32(3): 2012-2025. |

| [9] | XU Ke, ZHANG Xixi, WANG Yu, et al. Self-Supervised Learning Malware Traffic Classification Based on Masked Auto-Encoder[J]. IEEE Internet of Things Journal, 2024, 11(10): 17330-17340. |

| [10] | CHENG Yu, WANG Duo, ZHOU Pan, et al. A Survey of Model Compression and Acceleration for Deep Neural Networks[EB/OL]. (2020-06-14)[2025-02-14]. https://arxiv.org/abs/1710.09282. |

| [11] | YOU Haoran, LI Chaojian, XU Pengfei, et al. Drawing Early-Bird Tickets: Towards More Efficient Training of Deep Networks[EB/OL]. (2022-02-16)[2025-02-14]. https://arxiv.org/abs/1909.11957v5. |

| [12] | HOWARD A G, ZHU Menglong, CHEN Bo, et al. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications[EB/OL]. (2017-04-17)[2025-02-14]. https://arxiv.org/abs/1704.04861. |

| [13] | DONG Peijie, LI Lujun, WEI Zimian. Diswot: Student Architecture Search for Distillation without Training[C]// IEEE. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2023: 11898-11908. |

| [14] | WANG Boyao, PAN Rui, DIAO Shizhe, et al. Adapt-Pruner: Adaptive Structural Pruning for Efficient Small Language Model Training[EB/OL]. (2025-02-05)[2025-02-14]. https://arxiv.org/abs/2502.03460. |

| [15] | ZHU Kehan, HU Fuyi, DING Yuanbing, et al. A Comprehensive Review of Network Pruning Based on Pruning Granularity and Pruning Time Perspectives[EB/OL]. (2025-02-11)[2025-02-14]. https://doi.org/10.1016/j.neucom.2025.129382. |

| [16] | GONG Yunchao, LIU Liu, YANG Ming, et al. Compressing Deep Convolutional Networks Using Vector Quantization[EB/OL]. (2014-12-18)[2025-02-14]. https://arxiv.org/abs/1412.6115. |

| [17] | XU Yuwei, CAO Jie, SONG Kehui, et al. FastTraffic: A Lightweight Method for Encrypted Traffic Fast Classification[EB/OL]. (2023-08-16)[2025-02-14]. https://doi.org/10.1016/j.comnet.2023.109965. |

| [18] | HINTON G. Distilling the Knowledge in a Neural Network[EB/OL]. (2015-03-09)[2025-02-14]. https://arxiv.org/abs/1503.02531. |

| [19] | GUO Daya, YANG Dejian, ZHANG Haowei, et al. DeepSeek-R1:Incentivizing Reasoning Capability in Large Language Models via Reinforcement Learning[EB/OL]. (2025-01-22)[2025-02-14]. https://arxiv.org/abs/2501.12948. |

| [20] | ZHU Shizhou, XU Xiaolong, ZHAO Juan, et al. LKD-STNN: A Lightweight Malicious Traffic Detection Method for Internet of Things Based on Knowledge Distillation[J]. IEEE Internet of Things Journal, 2023, 11(4): 6438-6453. |

| [21] | WEN Yuecheng, HAN Xiaohui, ZUO Wenbo, et al. A Knowledge Distillation-Driven Lightweight CNN Model for Detecting Malicious Encrypted Network Traffic[C]// IEEE. 2024 International Joint Conference on Neural Networks (IJCNN 2024). New York: IEEE, 2024: 1-8. |

| [22] | ACETO G, BOVENZI G, CIUONZO D, et al. Characterization and Prediction of Mobile-App Traffic Using Markov Modeling[J]. IEEE Transactions on Network and Service Management, 2021, 18(1): 907-925. |

| [23] | FU Chuangpu, LI Qi, SHEN Meng, et al. Realtime Robust Malicious Traffic Detection via Frequency Domain Analysis[C]// ACM. Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2021: 3431-3446. |

| [24] | ZOU Zhuang, GE Jingguo, ZHENG Hongbo, et al. Encrypted Traffic Classification with a Convolutional Long Short-Term Memory Neural Network[C]// IEEE. 2018 IEEE 20th International Conference on High Performance Computing and Communications. New York: IEEE, 2018: 329-334. |

| [25] | HE Hongye, YANG Zhiguo, CHEN Xiangning. PERT: Payload Encoding Representation from Transformer for Encrypted Traffic Classification[C]//IEEE. 2020 ITU Kaleidoscope:Industry-Driven Digital Transformation (ITU K). New York: IEEE, 2020: 1-8. |

| [26] | ROMERO A, BALLAS N, KAHOUI S E, et al. Fitnets: Hints for Thin Deep Nets[EB/OL]. (2015-03-27)[2025-02-14]. https://arxiv.org/abs/1412.6550. |

| [27] | TIAN Yonglong, KRISHNAN D, ISOLA P. Contrastive Representation Distillation[EB/OL]. (2022-01-24)[2025-02-14]. https://arxiv.org/abs/1910.10699. |

| [28] | PARK W, KIM D, LU Yuan, et al. Relational Knowledge Distillation[C]// IEEE. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). New York: IEEE, 2019: 3962-3971. |

| [29] | ZHANG Duzhen, YU Yahan, CHEN Feilong, et al. Decomposing Logits Distillation for Incremental Named Entity Recognition[C]// ACM. 46th International ACM SIGIR Conference on Research and Development in Information Retrieval. New York: ACM, 2023: 1919-1923. |

| [30] | XIE Luofeng, CEN Xuexiang, LU Houhong, et al. A Hierarchical Feature-Logit-Based Knowledge Distillation Scheme for Internal Defect Detection of Magnetic Tiles[EB/OL]. (2024-08-01)[2025-02-14]. https://dl.acm.org/doi/10.1016/j.aei.2024.102526. |

| [31] | SUN Jianwen, ZHANG Bin, LI Hongyu, et al. T-Sanitation: Contrastive Masked Auto-Encoder-Based Few-Shot Learning for Malicious Traffic Detection[EB/OL]. (2025-02-10)[2025-02-14]. https://doi.org/10.1007/s11227-025-07104-1. |

| [32] | LIN T Y, GOYAL P, GIRSHICK R, et al. Focal Loss for Dense Object Detection[EB/OL]. (2017-08-07)[2025-02-14]. https://openaccess.thecvf.com/content_ICCV_2017/papers/Lin_Focal_Loss_for_ICCV_2017_paper.pdf. |

| [33] | SHARAFALDIN I, LASHKARI A H, GHORBANI A A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization[C]// SciTePress. The 4th International Conference on Information Systems Security and Privacy. Setubal: SciTePress, 2018: 108-116. |

| [34] | WANG Wei, ZHU Ming, ZENG Xuewen, et al. Malware Traffic Classification Using Convolutional Neural Network for Representation Learning[C]// IEEE. 2017 International Conference on Information Networking (ICOIN). New York: IEEE, 2017: 712-717. |

| [35] | GIL G D, LASHKARI A H, MAMUN M, et al. Characterization of Encrypted and VPN Traffic Using Time-Related Features[C]// SciTePress. The 2nd International Conference on Information Systems Security and Privacy. Setubal: SciTePress, 2016: 407-414. |

| [36] | LIN Kunda, XU Xiaolong, GAO Honghao. TSCRNN: A Novel Classification Scheme of Encrypted Traffic Based on Flow Spatiotemporal Features for Efficient Management of IIoT[EB/OL]. (2021-03-16)[2025-02-14]. https://doi.org/10.1016/j.comnet.2021.107974. |

| [37] |

KARIM F, MAJUMDAR S, DARABI H, et al. Multivariate LSTM-FCNs for Time Series Classification[J]. Neural Networks, 2019, 116: 237-245.

doi: S0893-6080(19)30120-0 pmid: 31121421 |

| [38] | LIU Chang, HE Longtao, XIONG Gang, et al. FS-Net: A Flow Sequence Network for Encrypted Traffic Classification[C]// IEEE. IEEE INFOCOM 2019-IEEE Conference on Computer Communications. New York: IEEE, 2019: 1171-1179. |

| [39] | LOTFOLLAHI M, JAFARI-SIAVOSHANI M, SHIRALI-HOSSEIN-ZADE R, et al. Deep Packet: A Novel Approach for Encrypted Traffic Classification Using Deep Learning[J]. Soft Computing, 2020, 24(3): 1999-2012. |

| [1] | ZHU Shuaishuai, LIU Keqian. A Masking-Based Selective Federated Distillation Scheme [J]. Netinfo Security, 2025, 25(6): 920-932. |

| [2] | LI Xiao, SONG Xiao, LI Yong. Research on Differential Privacy Methods for Medical Diagnosis Based on Knowledge Distillation [J]. Netinfo Security, 2025, 25(4): 524-535. |

| [3] | GU Guomin, CHEN Wenhao, HUANG Weida. A Covert Tunnel and Encrypted Malicious Traffic Detection Method Based on Multi-Model Fusion [J]. Netinfo Security, 2024, 24(5): 694-708. |

| [4] | TU Xiaohan, ZHANG Chuanhao, LIU Mengran. Design and Implementation of Malicious Traffic Detection Model [J]. Netinfo Security, 2024, 24(4): 520-533. |

| [5] | CHEN Jing, ZHANG Jian. A Data-Free Personalized Federated Learning Algorithm Based on Knowledge Distillation [J]. Netinfo Security, 2024, 24(10): 1562-1569. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||